Normalization in NLP

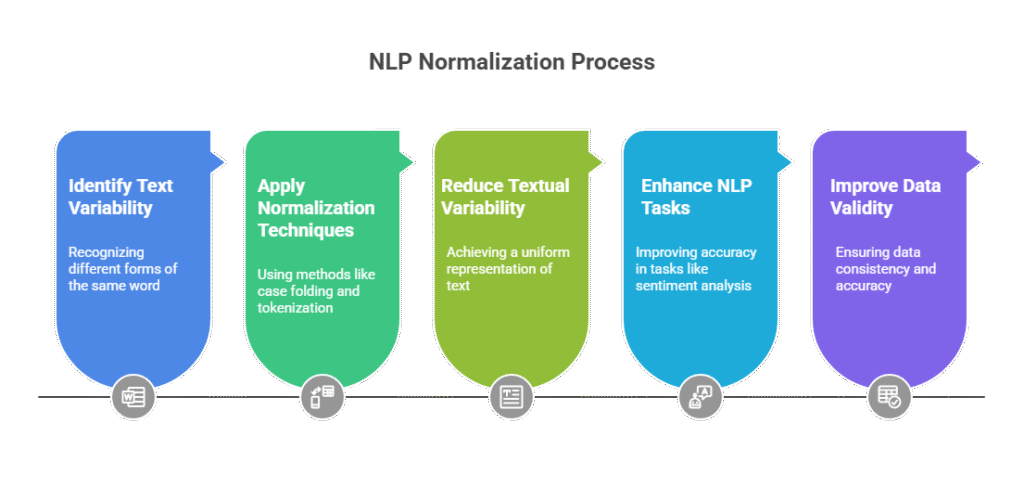

Normalization in NLP standardizes text before further processing. As part of careful data preparation, this phase is essential to the NLP pipeline.

Normalization’s main objective is to lessen textual variability so that several written representations of a token or idea may be combined into a single, canonical normalized version. This facilitates generalization to a variety of activities.

Normalization in NLP kinds and contexts:

Text Normalization (General): This is a general phrase that includes a number of preparatory procedures that are applied to text.

- Text must be transformed into a standard format.

- Typical tasks include segmenting phrases, normalizing word forms, and tokenizing (segmenting) words.

- Handling non-standard terms like dates, numerals, and abbreviations and mapping them to a specific vocabulary (such as “0.0”, “AAA”, “!NUM”, and “!DATE”) is one example. This can increase the accuracy of language modelling jobs and keeps the vocabulary short.

- Case folding is a popular technique of normalization that is useful for generalization in tasks like voice recognition or information retrieval. It involves translating text to lowercase (for example, “Woodchuck” and “woodchuck” becoming “woodchuck”). It might not work well, though, for activities that need case differences (such as “US” for the nation and “us” for the pronoun).

- It is possible to use encoder-decoder models or rules to text normalization. Tokenization is frequently used in rule-based techniques to identify non-standard terms by utilizing regular expressions and other patterns.

- A straightforward baseline for sentiment analysis is to create new “words” like “NOT_like” by appending a “NOT_” prefix during text normalization to words that come after a negation marker until the next punctuation.

Morphological Normalization: This kind seeks to combine different word forms into a single stem or root.

- In information retrieval (IR), stemming is a popular morphological normalization in NLP is a technique that determines a word’s stem, frequently by removing suffixes. In stemming methods, the stem is used instead of the actual word. The stem that results, meanwhile, might not always be a word with any real meaning. The success rate of matching documents to queries can be improved by grouping words according to their stem.

- Similar to stemming, lemmatization assigns a common base form, known as a lemma, to several inflected versions of a word, such as “sang,” “sung,” and “sings.” For example, “sing.” The main distinction is that the dictionary headword is represented by the lemma, which is usually a well-known word. Lemmatization is the process of connecting morphological variations to their corresponding lemma, which is a dictionary’s invariant syntactic and semantic information. It is crucial for processing morphologically difficult languages and helpful for building a vocabulary of valid lemmas.

Named Entity Normalization: Particularly, this task deals with ambiguity and variety in named items that are referenced in text.

- One example is BioNLP’s Gene Normalization (GN), which links textual references to a gene name to a particular database record. This is necessary since there may be ambiguity as writers frequently use multiple names or symbols for the same gene. For example, there are several symbols used to refer to the Brca1 gene.

Temporal Normalization: In order to represent a particular moment in time or length, temporal expressions (such as dates or times) must be mapped to a standard format, such the ISO 8601 standard.

Normalization in Statistical/Machine Learning Contexts: With normalization NLP is also utilized in more general statistical and machine learning methods.

- Normalizing entails dividing by a total count in probability models such as n-grams to guarantee that the resultant probabilities lie between 0 and 1. This is a component of probability estimation. Prior to normalizing counts into probabilities, smoothing techniques frequently require altering counts (for example, adding one in Laplace smoothing). After smoothing, estimates might need to be renormalized.

- Normalization is employed in weighting systems in information retrieval (IR), such as cosine normalization, which reduces term weights according to document length. This is how documents and queries are compared in the vector space model. In order to account for a word’s significance to a document in relation to its frequency throughout the corpus, TF-IDF weighting additionally incorporates normalization.

- Layer Normalization and Batch Normalization are methods for enhancing training performance in neural networks. By computing and applying the mean and standard deviation across the layer’s elements to re-center and re-scale them, layer normalization maintains hidden layer values within a predetermined range. It can lessen the exploding gradient issue and is used in both training and testing. Activations within a mini-batch are normalized via batch normalization to have a variance of 1 and a mean of 0.

- The decision to normalize pre-trained word embeddings to unit length might eliminate frequency information, which, depending on the job, may be advantageous or disadvantageous.

- Although it necessitates establishing the data structure (schema) beforehand, normalization in relational databases can guarantee data validity, which might be difficult for exploratory language data.

The particular data and the job at hand determine the normalization in NLP value. There is a chance that linguistically significant distinctions will be lost, even though it can help with generalization and shrink the feature space. Without needing the language-specific engineering that precise normalization could require, regularization and smoothing can avoid overfitting to infrequent characteristics in some supervised learning settings in a manner similar to that of normalization. In unsupervised situations such as topic modelling and content-based IR, normalization in NLP is typically more important.