In Natural Language Processing, finite-state approaches like Finite State Automata (FSAs) and Finite State Transducers FST in NLP are key methods, especially for applications that require sequential text processing. Regular expressions and formal language theory, which define regular languages, are intimately related to them.

Finite-state models

Finite State Automata (FSAs) and Finite State Transducers(FST) are two related ideas at the core of them.

Finite State Automata (FSAs)

Theoretical models called finite state automata (FSAs), sometimes referred to as finite-state machines (FSMs) or finite automata, are used to ascertain whether a string is part of a certain language, also referred to as a regular language. They are made up of a vocabulary, a starting state, a set of final states, a finite number of states, and state transitions that are triggered by input symbols. The ‘wordchain’ devices that incorporate sequence, selection, and iteration can be thought of as FSAs. They are able to integrate a processing approach with a formal language specification. Although a basic FSA can implement a dictionary or serve as a pattern recognizer, such as a particular date format, it does not automatically offer input analysis.

Finite State Transducers (FSTs)

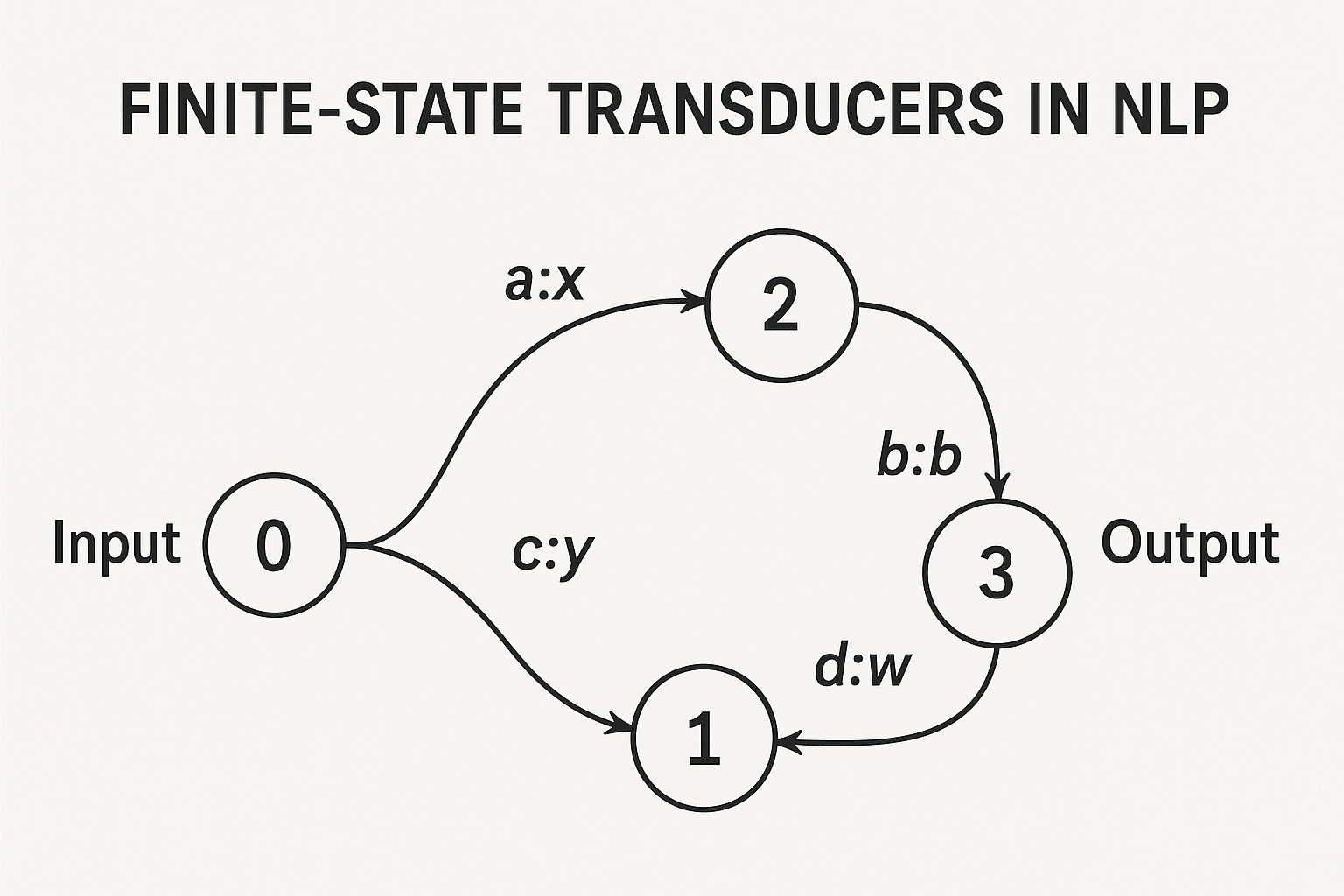

FSTs are an extension of FSAs in which input and output symbol pairs are used to identify transitions. Input symbols are consumed and output symbols are produced when traversing an FST. Because to this, they are effective tools for jobs that need mapping one string to another. A set of states, input and output vocabularies, beginning and final states, and a transition function mapping from states and input/output symbol pairs to states are all part of the formal definition of an FST.

Also Read About An Introduction To Text To Speech Synthesis And Challenges

How it Works:

An FST consists of a beginning state, a collection of states, an input and output alphabet, and a set of transitions that specify how to switch between states depending on the input. An FST outputs a matching sequence of symbols after processing an input, moving between states according to the input symbols.

FST in NLP

Applications in Morphology and Lexical Analysis

In many textbooks, finite-state methods especially those that use FSTs are essential to computational morphology and have dominated the field of lexical analysis. Lexical analysis is the process of dissecting words into their component elements in order to extract information that can be used in subsequent processing steps. Additionally, it can handle words that are absent from a basic dictionary. Linking a word’s morphological variations (such as “delivers,” “deliver,” “delivering,” and “delivered”) to its lemma (such as DELIVER) and related morphosyntactic information is a fundamental undertaking.

This mapping makes excellent use of FSTs

- Morphological Analysis: FSTs have the ability to translate a surface string the word as it appears in text, like “children” to a lexical string or description that incorporates morphological aspects and the lemma, like “child [+plural]”.

- Morphological Generation: FSTs are capable of doing the opposite, producing the appropriate surface form (“women”) from a lexical description (“woman [+plural]”). One of the main benefits of FSTs is their ability to operate in both directions.

- It is assumed that a feature will map to an affix when using FSTs for morphology, particularly in an Item and Arrangement (I&A) model. Using FSAs, where the vocabulary is made up of morphemes (stems and affixes), to allow derived words is a more comprehensive method than specifying every word form.

Handling Spelling Rules (Morphonology)

Since affixation entails adding phonological segments to a stem, phonology more especially, morphonology, or the relationship of morphology and phonology is essential to morphological analysis. Spelling differences (orthographic variance) may result from this. The preferred model for managing this is FSTs.

The “basic” form (for example, underlying glass∧s, where ∧ denotes a morpheme boundary) can be mapped to its orthographic variant (glasses) using an FST. Symbol pairs from two “tapes” usually the lower tape for surface forms and the upper tape for underlying representations are used to license transitions in the FST. You can mimic insertions or deletions on tape by using the empty string symbol ε. The English plural spelling rule, which states that -s becomes -es after specific stems, or modifications such as fly becoming flie before -s are examples.

Early research showed that FSTs might be used to model phonological and morphological laws. Originally intended to function in parallel, Koskenniemi’s Two-Level Morphology model, a key foundation for finite state-based analysis, uses FSTs to transfer underlying lexical representations to surface representations.

Effectiveness and Realistic Achievement

FSTs are incredibly effective and reasonably simple to implement. Their prevalence in lexical analysis can be explained by their efficiency and bidirectionality. Large-scale multilingual projects, like the Multext Project, which covers languages like Finnish, Irish, Zulu, and Hebrew, are something they have a lot of experience with.

“Difficult” Morphology: A Handling

Particularly when Natural Language Processing(NLP) systems become multilingual, finite-state models require methods to handle more complex phenomena that do not suit the basic I&A approach, even while they are perfect for morphology that conforms to simple affixation. These consist of:

- Affix Rivalry/Inflectional Classes: When a single feature may have more than one affix (as in Russian case marking). By adding stem classes and continuation affix classes to the FST network, this can be resolved.

- Non-contiguous Morphology: Vowel changes (apophony), such as sing/sang, are examples of features whose exponent is not a straightforward affix at the stem’s edge. In order to account for the characteristic not mapping to an affix, FSTs can map the vowel alternation utilising strategies such as focusing on particular vowels or employing empty transitions (Ø).

- Infixation: When an affix is placed inside the stem (as in Tagalog, for example). Multi-tape FSTs or cascade FSTs with an intermediate level of representation can be used to model this.

- Nonlinear Root-and-Template Morphology: seen in languages where vowel patterns and root consonants alternate, such as Arabic. This calls for simulating distinct information layers (root, exponent, and interleaving), and n-tape FSTs where n is higher than one are usually used to accomplish this.

- FSTs can intersect (as in Koskenniemi’s Two-Level Morphology) or be constructed (cascaded), in which the output of one FST becomes the input of the next. Composition enables the modular construction of complicated systems, such as the separation of orthographic spelling rules from morphological processes.

- XFST (Xerox Finite-State Tool) and LexTools are well-liked tools for creating finite-state morphological models. Another language that is mentioned as being appropriate for economically specifying FSTs is DATR.

Additional Useful Applications

Other NLP activities also make use of or are connected to finite state techniques:

- Segmenting strings into word forms is known as tokenization, and it is especially helpful for languages with ambiguous word borders.

- Information retrieval frequently uses stemming, a less complex morphological processing method that removes affixes to reduce words to their most basic form.

- Part-of-Speech (POS) Tagging: Linguistic rules or tag transitions can be described as FSTs, and finite state machines are effective for the required sequential processing.

- Discourse Systems: Basic discourse structures can be modelled using FSAs.

Differences

- There are Weighted Finite State Transducers (WFSTs) and Weighted Finite State Acceptors (WFSAs).

- Information concerning transition probabilities, where the probability on outgoing arcs from each state add up to 1, is added to the FSA via probabilistic FSAs (PFSAs). This helps SDS model the probability of user inputs.

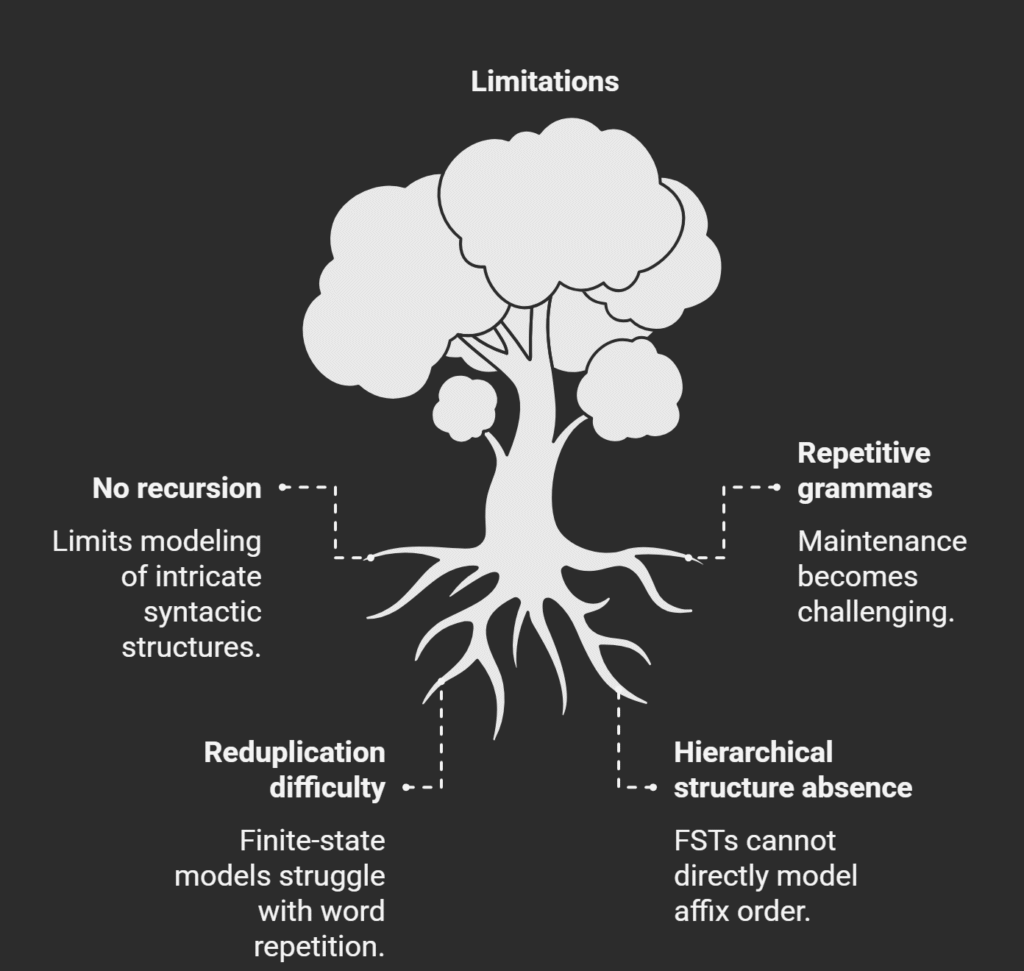

Limitations

The complete complexity of natural languages cannot be adequately modelled by finite state approaches alone, notwithstanding their advantages. The innate recursion power required for intricate syntactic structures in natural language is absent from them. Full recursion is not a natural operation for FSAs, although iteration is feasible. Additionally, grammars created solely with finite-state approaches may become highly repetitive, making them challenging to maintain.

The difficulty of reproducing phenomena like reduplication where a word portion is repeated, frequently for plurality using simple finite-state models is a theoretical restriction in morphology. Furthermore, the underlying hierarchical structure of morphologically complicated words where affixes may need to be applied in a certain order is not directly modelled by the fundamental FST technique for morphology, such as Two-Level Morphology.

In conclusion

In conclusion, finite-state methods, which include FSAs and FSTs, offer a productive and well-understood formal framework with computer science roots. They are especially effective for sequential tasks like lexical analysis and morphology, pattern matching, and string processing. They continue to be a mainstay for many fundamental NLP tasks, despite their limited capacity to fully represent the recursive structure of human language syntax.