A collection of observations gathered over time is called a time series. Time series classification (TSC), which is widely used in industries such as manufacturing, healthcare, finance, and meteorology, attempts to categorize sequences according to their temporal patterns. In contrast to conventional classification problems, Time series classification uses ordered data points that are frequently reliant on one another. This particular feature necessitates the use of certain algorithms that can deal with noise, varying sequence lengths, and temporal dependencies.

Understanding Time Series Classification

Time series classification is a supervised learning job in which a discrete class label is the output and a multivariate or univariate time series is the input. For example, a wearable sensor might generate an accelerometer time series with the objective of categorizing the physical activity (e.g., walking, running, sitting).

Among the main difficulties in classifying time series are:

- Temporal dependencies: The data points’ timing and sequence are important.

- Variability in length: Series can vary in length.

- High dimensionality: a large number of variables or time points.

- Noise: There is frequently a lot of noise in real-world time series.

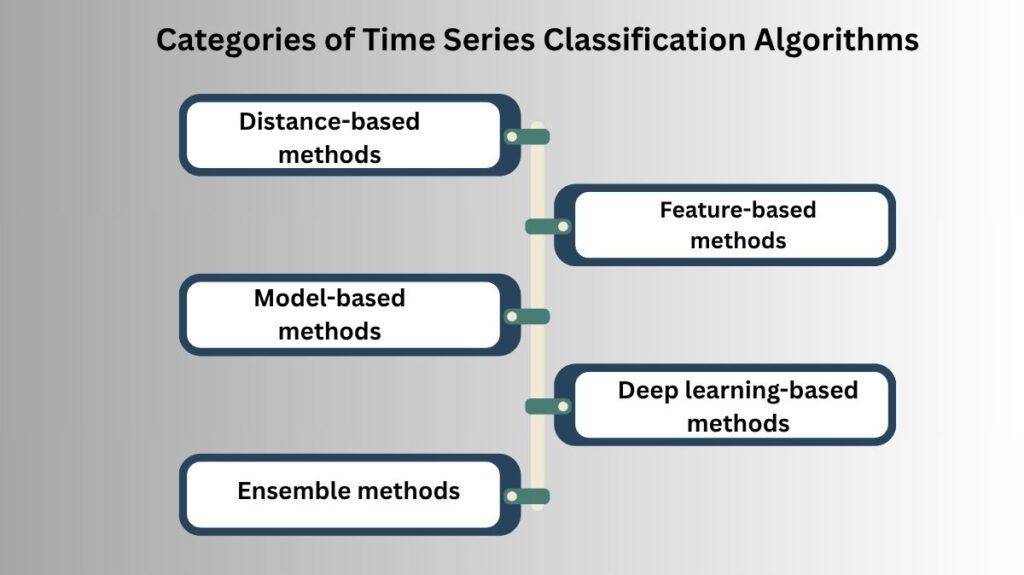

Categories of Time Series Classification Algorithms

In general, time series classification algorithms fall into one of the following categories:

Distance-Based Methods

Dynamic Time Warping with Nearest Neighbor (DTW)

Dynamic Time Warping (DTW) is a widely used distance measure that allows for sequences of varying lengths or speeds by warping the time axis to determine the best match between two time series.

The algorithm calculates the DTW distance between a test instance and every training instance using a 1-Nearest Neighbor (1-NN) classifier with DTW. The closest neighbor is given a label.

Euclidean Distance

The simplest similarity metric, Euclidean distance, only works when time series are aligned and of identical length. Temporal distortions are not taken into consideration.

Other Distance Measures

- Edit Distance with Real Penalty (ERP)

- Time Warp Edit Distance (TWED)

- Longest Common Subsequence (LCSS)

These provide more flexibility and robustness to noise and variable length.

Feature-Based Methods

These techniques reduce temporal complexity by converting time series into feature vectors.

Statistical Features

Extract basic descriptive statistics:

- Mean, variance, skewness, kurtosis.

- Autocorrelation, entropy, zero-crossing rate.

The resulting features are fed into conventional classifiers like SVM, Random Forests, or Logistic Regression.

Shapelets

- Within a time series, shapelets are discriminative subsequences that strongly suggest a particular class.

- Finding subsequences that optimize class separation is a key component in learning shapelets.

- Shapelet-based approaches perform well and provide interpretable models, particularly in areas like activity and health identification.

Bag-of-Patterns (BoP)

Convert time series into symbolic representations by treating them as a “document” made up of symbolic words, for example, by utilizing Symbolic Aggregate approXimation, or SAX.

One effective BoP classifier is Bag-of-SFA Symbols (BOSS).

Model-Based Methods

These methods represent time series data using parametric or probabilistic models.

Hidden Markov Models (HMMs)

HMMs use a series of hidden states to represent the data, each of which produces observations based on a probability distribution.

- Utilized for gesture categorization and speech recognition.

- Every class is represented by a distinct HMM.

- The model with the highest likelihood is used to classify a new time series.

Autoregressive Models

- Forecasting can benefit from the employment of AR, ARIMA, and SARIMA models; however, by modeling each class independently, these models can be expanded to classification.

- Calculating likelihood under each model and choosing the most likely one is the classification process.

Deep Learning-Based Methods

Time series classification has been transformed by deep learning, which automatically learns hierarchical representations.

Convolutional Neural Networks (CNNs)

- CNNs use filters applied across the time axis to identify both local and global patterns.

- ResNet, Fully Convolutional Networks (FCN), and InceptionTime are a few examples.

- CNNs are resilient to noise and effective for multivariate time series.

Recurrent Neural Networks (RNNs)

- RNNs are made to manage long-term dependencies and sequential data, especially LSTMs and GRUs.

- Useful for classifying sequences when long-range patterns are crucial.

- It is possible to capture both forward and backward dependencies using bi-directional LSTM (BiLSTM).

Transformer Models

- Because of its self-attention mechanism, transformers—which were first created for natural language processing—have demonstrated potential in time series.

- Able to simulate intricate connections without depending on recurrence.

- Parallelizable and scalable, but data-hungry.

Models That Are Hybrid

Cutting-edge models like InceptionTime, TS-Transformer, and TST (Temporal Fusion Transformers) are the result of combining CNNs with LSTMs or transformers.

Ensemble Methods

Accuracy and generality are frequently enhanced by combining many models.

HIVE-COTE

One of the top ensembles for Time series classification is HIVE-COTE, which stands for Hierarchical Vote Collective of Transformation-based Ensembles.

- Combines a variety of models, including spectral, interval-based, dictionary-based, and shapelet.

- Combines forecasts using weighted voting.

- Has produced cutting-edge outcomes on UCR benchmark datasets.

Gradient Boosting with Random Forests

XGBoost and LightGBM are examples of tree-based ensembles that can achieve remarkable performance when fed with handcrafted features or symbolic representations.

Evaluation Metrics

The following common classification metrics are applied:

- Precision

- F1-score, recall, and precision

- For binary problems, ROC-AUC

- Confusion matrix (for evaluation of multiple classes)

Accuracy is not as important for imbalanced datasets as F1-score and ROC-AUC.

Popular Datasets and Benchmarks

The following are typical benchmark datasets for TSC:

- The UCR Time Series Archive contains more than 100 datasets from various fields.

- For multivariate jobs, use the UEA Multivariate Time Series Classification Archive.

These datasets aid in the uniform evaluation of algorithms.

Tools and Libraries

Well-known TSC libraries include:

- tslearn (Python): DTW, k-NN, and shapelet-based techniques are implemented by tslearn (Python).

- sktime (Python): A unified interface for numerous TSC models and benchmarks is provided by sktime (Python).

- pyts (Python): BoP and symbolic techniques in Python (pyts).

- tsai: PyTorch-based deep learning library for TSC.

- ROCKET and MiniROCKET: Random convolutional kernel transforms that are incredibly quick and scalable are called ROCKET and MiniROCKET.

Applications of Time Series Classification

Healthcare: categorizing ECG and EEG signals to identify anomalies.

Finance: Identifying transaction kinds or forecasting market trends.

Manufacturing: Sensor time series-based predictive maintenance.

Environmental Monitoring: Weather pattern classification is an example of environmental monitoring.

Human Activity Recognition (HAR): Wearable sensors are used to categorize physical motions.

Future Directions and Research Trends

Self-supervised learning: Using unlabeled data to enhance model performance is known as self-supervised learning.

Interpretable TSC: Shapelets and other models provide interpretability, which is essential in delicate fields like medicine.

Few-shot learning: Adapting models to operate with a small amount of labeled data is known as “few-shot learning.”

Scalable deep learning models: The goal of research is to lower transformer-based models’ training costs.

Domain adaptation and transfer learning: Models trained on one domain can be transferred to another with little retraining thanks to domain adaptation and transfer learning.

In conclusion

A crucial field of machine learning with applications in numerous sectors is time series classification. From traditional distance-based methods to state-of-the-art deep learning models, the discipline has seen substantial change. The type of data, the requirement for interpretability, the available computing power, and the required level of accuracy all influence the algorithm selection.

Future time series classifiers should become more reliable, interpretable, and effective with continued developments in deep learning and ensemble techniques, opening the door for wider domain application.