Contextual Embeddings in NLP

Advanced vector representations of words called contextual embeddings are made especially to capture a word’s meaning in the context in which it appears.

This is a significant difference from previous static word embeddings (such as Word2Vec or GloVe), which assign a single fixed vector representation to every different word type in the lexicon, independent of how the word is employed in a sentence.

How does Contextual Embeddings work?

By taking context into account, contextual embeddings improve word representation. Contextual embeddings dynamically create vectors depending on surrounding words, in contrast to typical word embeddings that give each word a fixed vector. This flexibility enhances semantic comprehension and enables NLP algorithms to grasp complex meanings.

Crucial features include of:

- Dynamic Representation: In order to provide a more realistic portrayal, the vector of each word varies according to its context.

- Improved Semantic Understanding: Homonyms and polysemous words can be better understood by models through context analysis.

- Context-Aware Models: These embeddings are used for tasks like question answering and sentiment analysis by models such as BERT and ELMo.

- Better Results: Contextual embeddings outperform conventional techniques in linguistic problems, resulting in increased accuracy.

- Techniques for Generation: Important techniques that enable efficient word representation creation include transformer architectures and attention processes.

What are Contextual Embeddings’ Benefits?

By improving semantic comprehension, contextual embeddings are transforming the area of natural language processing (NLP). The use of context-aware embeddings has the following main advantages:

- Better Semantic Interpretation: More accurate interpretations result from their ability to grasp the meaning of words depending on their context.

- Dynamic Representations: Contextual embeddings, as opposed to static embeddings, provide more complex meanings by adapting to various circumstances.

- Improved Language Activities: In a variety of linguistic activities, they dramatically improve performance, including:

- Reduced Ambiguity: They reduce ambiguity in word meanings by taking surrounding words into account.

- State of the Art Techniques: They use sophisticated methods for efficient representation production, including as transformers and attention processes.

- Better Generalization: The generalization of models across various activities and datasets is enhanced by contextual embeddings.

Motivation and Need

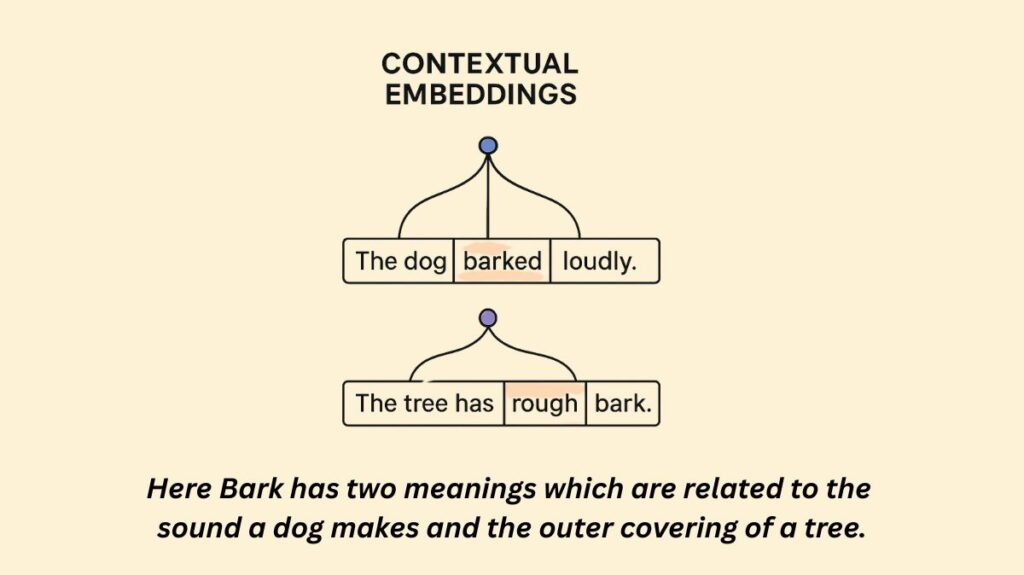

- Conventional static embeddings reduce a word’s multiple meanings to a single numerical representation. For instance, the term “Palm” would have the same vector if it were used to describe a body part or a Tree.

- However, a word’s meaning frequently depends heavily on its context. Because it cannot take into consideration polysemy a word having more than one meaning or the minute changes in meaning that arise depending on nearby words, a single context-independent vector representation is problematic.

- By giving the same word a distinct vector representation each time it occurs in a different context, contextual embeddings overcome this restriction. This enables the model to decipher the meaning of the word from its surrounding content.

Underlying Mechanism (Transformer Networks)

- Transformer Networks and other contemporary neural network topologies are commonly used to learn contextual embeddings.

- Self-Attention is a fundamental Transformer mechanism. By calculating the weighted average of the representations (embeddings) of other words in its input sequence, the network may determine the representation of a word with self-attention. When calculating its own representation, the network learns weights (also known as attention weights) that specify how much “attention” each word should provide to its neighbours.

- In order to provide updated, contextualized representations, this procedure efficiently combines the original (often context-independent) token embeddings according to the context. For example, the word “Bat” will have an impact on the contextualized embedding of “A flying mammal” in “An object used in sports like baseball,” bringing its meaning closer to the geological sense.

Handling Position

- Transformers use location information to capture word order and closeness, not the order-independent (bag-of-words) model that a weighted average may first imply.

- Positional embedding vectors are often added to the input word embedding vectors to accomplish this. Static functions or learning can be used to create these positional embeddings. Because it is thought to be particularly significant for language processing tasks, recent research has also concentrated on directly recording relative positioning information inside the self-attention mechanism.

Output and Training

- After processing an input sequence, the Transformer encoder produces a series of contextualised embeddings, one for every input token. A token’s significance within the context of the complete input sequence is represented by its output vector.

- Frequently, pre-trained Transformer models (such as BERT) are used to generate these embeddings. Tasks like Next Sentence Prediction (which determines if two phrases follow one another) and Masked Language Modelling (which predicts masked words based on context) are used to train these models on enormous volumes of unlabelled text data. It is computationally costly to train such models to learn contextualized representations.

Applications

- Many downstream tasks in Natural Language Processing can benefit greatly from contextual embeddings.

- By overlaying lightweight classifier layers on top of the contextualized outputs, pre-trained Transformer models which generate contextual embeddings can be optimized for particular uses.

- Contextual embeddings have demonstrated excellent performance on the following specific tasks:

- Word Sense Disambiguation (WSD): They are essential to contemporary WSD algorithms because they enable models to discern between a word’s several context-specific meanings. Contextual embeddings are used to explicitly assess context-sensitive meaning representations in tasks such as Word-in-Context (WiC).

- Information Extraction: Information Extraction used in tasks such as connection extraction, where models may identify the link between certain things by concentrating on their surrounding environment.

- Question Answering (QA): Transformer-based contextual embeddings are used by extractive QA systems to assess possible response spans in pertinent text sections.

- Dependency Parsing: The accuracy of parsing can be increased by combining Transformers’ contextual embeddings with other architectures (like Recurrent Neural Networks) and features (like POS tags).

- Coreference Resolution: Contextual embeddings are used in modern neural techniques to help connect references of the same item.

- Text Classification and Sentiment Analysis: Compared to static embeddings, these inputs allow models for these tasks capture more complex meanings.

- Improving other embeddings: Word senses themselves can be represented via contextual embeddings (contextual sense embeddings).

In conclusion, contextual embeddings which are acquired by processes such as the self-attention in Transformer networks represent the meaning of a word occurrence (token) in its particular textual context. By doing this, they are able to overcome the drawbacks of static embeddings that describe word kinds and reflect the fluid and context-dependent character of word meaning.