Indexes in MongoDB

Effective data retrieval is critical to MongoDB application performance, especially when data volumes increase. The main focus of basic query optimisation is on using indexes sparingly and analysing how MongoDB processes queries using the effective explain() technique.

db.users.insertMany([

{ "name": "Alice", "email": "alice@example.com", "age": 30, "city": "New York" },

{ "name": "Bob", "email": "bob@example.com", "age": 25, "city": "London" },

{ "name": "Charlie", "email": "charlie@example.com", "age": 35, "city": "New York" },

{ "name": "David", "email": "david@example.com", "age": 28, "city": "Paris" },

{ "name": "Eve", "email": "eve@example.com", "age": 30, "city": "London" }

]);MongoDB’s createIndexes() method

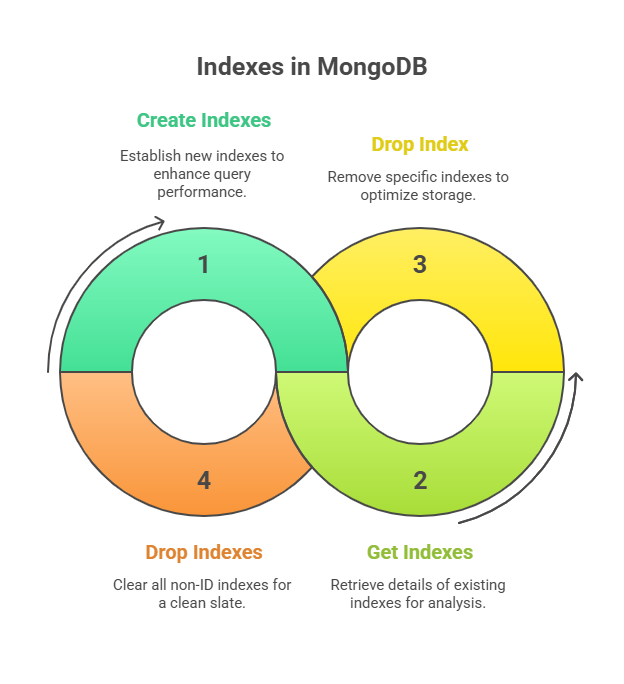

One or more indexes are created on a collection using the createIndexes() function. By lowering the volume of data MongoDB must scan, indexes increase the effectiveness of queries and sorting operations.

Syntax:

db.collection.createIndexes( <keyPatterns>, <options> )Code Example:

Create a single-field ascending index on the email field (for faster lookups by email):

db.users.createIndexes([

{ "email": 1 }

]);Create a unique index on the email field (ensuring no two users can have the same email):

db.users.createIndexes([

{ "email": 1 }

], { unique: true });Create a compound index on city (ascending) and age (descending) for queries filtering by city and sorting by age:

db.users.createIndexes([

{ "city": 1, "age": -1 }

]);Create an index with a custom name:

db.users.createIndexes([

{ "age": 1 }

], { name: "age_idx" });MongoDB’s getIndexes() method

An array of documents describing the indexes on a collection is returned by the getIndexes() method. Details about an index, including its key, name, and choices, are provided by each page in the array.

Syntax:

db.collection.getIndexes()Code Example:

Get all indexes on the users collection:

db.users.getIndexes();MongoDB’s dropIndex() Method

One index is eliminated from a collection using the dropIndex() function. Either the index’s name or key pattern can be used to designate which index to drop.

Syntax:

db.collection.dropIndex(index)Code Example:

Drop an index by its name (recommended, get the name from getIndexes()):

db.users.dropIndex("email_1");Drop an index by its key pattern:

db.users.dropIndex({ "city": 1, "age": -1 });MongoDB’s dropIndexes() Method

A collection’s non-_id indexes are all eliminated using the dropIndexes() method.

Syntax:

db.collection.dropIndexes()Code Example:

Drop all non-_id indexes from the users collection:

db.users.dropIndexes();The Importance of Indexes for Improving Read Performance

Fundamentally, a MongoDB index is a unique data structure that holds a condensed, well-organised subset of the material in a collection. Think of it like the index of a book: you can quickly identify the pertinent pages by consulting the index rather than going through each page (document) to get information.

In the absence of a suitable index, MongoDB is forced to do a collection scan, which is commonly known as a “table scan” in relational database situations. In other words, it searches through a collection of documents to find those that meet the query parameters. This procedure is very ineffective for big collections, requiring a lot of data processing and taking a lot of time and money. Searching for a “needle in an ever-expanding haystack” is what this is like.

By limiting the quantity of documents MongoDB must examine, indexes significantly enhance read performance. In addition to supporting range-based searches and effective equality matches, they can also enable sorted results straight from the index’s ordering.

Indexes improve read speeds, but their effects on write operations must be considered. With every insert, update, or delete that impacts an indexed field, MongoDB updates the document and any associated indexes. Indexes will increase overhead, therefore prioritise collections with a greater read-to-write ratio. Frequent indexes should fit entirely in RAM to avoid slower disc reads for optimal performance.

Creating Single-Field Indexes

The db.collection is used in MongoDB to generate an index.the createIndex() function. A new function replaced ensureIndex() in MongoDB 3+, however it remains an alias. CreateIndex() only generates an index if none exists with the same specification. MongoDB creates the specified collection during indexing if it doesn’t exist.

A collection index only has to be created once. As data changes after it is first created, MongoDB automatically refreshes the index.

Basic Syntax: The basic syntax for building a single-field index is quite simple: > CreateIndex db.COLLECTION_NAME({KEY:1})

Here’s an illustration: Let’s say you have papers with a title field in a collection called mycol. To make this field indexable for effective sorting and searching:

// Create an ascending index on the 'title' field

db.mycol.createIndex({"title": 1}) The creation of an index for each title value from documents in mycol is guaranteed by this command.

The _id Index: By default, each MongoDB collection has a distinct index on the _id field. This index serves as the primary key since it stops you from inserting two documents with the same _id value. This default _id index cannot be dropped. Drivers and mongods construct _id fields with ObjectId values if applications don’t specify one.

Ascending (1) and Descending (-1) Order for Indexes

When constructing an index, you choose ascending or descending sorting for the field(s).

For single-field indexes, MongoDB can traverse the index in either way, so sort order is irrelevant. An index built with “field”: 1 can easily handle ascending (.sort(“field”: 1)) and descending (.sort(“field”: -1)) sorts on that field.

Here’s an example of creating a single-field index and how it supports sorting:

// Create an ascending index on the 'timestamp' field

db.posts.createIndex({ timestamp : 1 })

// This index can optimize both ascending and descending sorts:

db.posts.find().sort({ timestamp : -1 })

db.posts.find().sort({ timestamp : 1 })Nonetheless, the sort order chosen at the time of index construction matters for compound indexes (indexes on several fields). Whether a compound index can fully support a sort operation depends on the order of its fields. A compound index can enable a sort if the sort order matches the index’s orientation for all keys or is the opposite. Compound indexes can support sorting on {username: 1, date: -1} or {username: -1, date: 1}.

If no sorting option is specified in sort(), documents are shown in ascending order.

Optimising MongoDB apps requires understanding index building basics and how they affect query and sort performance.

Compound Indexes

A compound index is one that is constructed using many fields in a collection. When you need to filter on numerous fields at once or sort on multiple criteria, this kind of index is quite helpful. MongoDB enables for substantially faster data retrieval by storing the values of these fields in a sorted order.

Compound indexes depend on field order. A {name: 1, age: -1} index sorts by name (ascending) and subsequently by age (descending) inside each name group. Whether the index can handle a sort operation depends on this order. When a query matches a “prefix” subset of the indexed fields, MongoDB can effectively provide it using the compound index.

Here’s an example of creating a compound index:

db.people.createIndex({name: 1, age: -1})In this example, an index that is descending on age and ascending on name is created.

Unique Indexes

A unique index guarantees that every value for the indexed field or fields (or a compound unique index’s combination of values) occurs no more than once in all of the documents in a collection. MongoDB automatically allocates a unique _id index for each collection.

Importantly, MongoDB accepts null as a unique index value. Trying to insert several documents with a missing field with a unique index will fail since the null value will be duplicated.

To create a unique index:

db.collection.createIndex({user_id: 1}, {unique: true})This command makes the user_id field unique.

Sparse Indexes

The purpose of sparse indexes is to only refer to documents that have the designated field. The index excludes documents without the indexed field. For optional fields or fields in a tiny subset of your documents, this can dramatically reduce index size and save space.

Sparse indexes are more compact than dense indexes, which put a null value in every document in the collection regardless of field existence.

The null value problem is frequently solved by combining unique indexes with sparse indexes. A sparse unique index can be used to make a field unique only when it is present.

Here’s how you’d create a sparse index:

db.scores.createIndex({nickname: 1}, {unique: true, sparse: true})This example builds a unique index on nickname, however it will only index documents that have the nickname field. This means that many documents can be added if the nickname field is missing. Text and geospatial indexes are by default sparse.

Indexes let MongoDB find relevant documents without scanning the entire collection, improving read performance. However, updating indexes adds overhead to writing operations (inserts, updates, and deletes).

Analysing Queries

An essential MongoDB diagnostic tool for comprehending how the database processes a query is the explain() method. It offers comprehensive details regarding the query execution strategy, including performance metrics and whether indexes were used.

Explain() is used by appending it to a call to the find() method. For instance, db.COLLECTION_NAME.find({query_criteria}).explain(). To regulate the quantity of information supplied by explain(), you can define verbosity options. For example, “executionStats” can be used to obtain comprehensive execution statistics.

To assess query performance, look for the following important fields in the explain() output:

- cursor / stage: The type of cursor used is indicated by this field.

- A comprehensive collection scan is indicated by BasicCursor (in older versions) or COLLSCAN (in recent versions).

- The use of an index is indicated by BtreeCursor or IXSCAN. Usually, the name of the index that was utilised will also be given.

- nscannedObjects / totalDocsExamined: The total number of documents MongoDB has to scan is indicated by the ratio of nscannedObjects to totalDocsExamined.

- nscanned / totalKeysExamined: The number of scanned documents or index items is shown by the ratio nscanned to totalKeysExamined. It counts documents (like totalDocsExamined) unless an index is utilised, in which case it counts index entries.

- n / nReturned: The quantity of documents that the query brought back.

- millis / executionTimeMillis:The duration in milliseconds that the query took the database to process. Lower numbers are preferable.

- scanAndOrder: If this field is true, MongoDB has to sort the results in memory since it was unable to use the proper index. Slow, memory-intensive operations often indicate inefficient query plans.

- indexOnly: If true, that query was “covered” by the index, meaning MongoDB didn’t have to fetch the actual documents because all fields (conditions and returned fields) were in the index.

Identifying “COLLSCAN” and Addressing Performance Issues

As previously stated, MongoDB executed a complete collection scan if the explain() output contains a COLLSCAN (or BasicCursor in previous MongoDB versions). This indicates that the database is inefficiently reviewing each document, which is a serious performance red flag, particularly for huge collections.

The explain(“executionStats”) output should show a COLLSCAN if the totalDocsExamined (or nscannedObjects) value is close to the collection’s total number of documents, while nReturned (or n) is significantly smaller.

Let’s illustrate with an example:

Scenario: Querying a collection without an index

Consider myusers’ many documents. For queries like db.myusers.find(email: “example@email.com”), MongoDB scans the entire collection if there is no index on the email column.

// Querying without an index

db.myusers.find({email: "example@email.com"}).explain("executionStats")The output for this query might look something like this (simplified):

{

"queryPlanner": {

"winningPlan": {

"stage": "COLLSCAN" // Indicates a full collection scan

},

},

"executionStats": {

"nReturned": 1, // Number of matching documents found

"executionTimeMillis": 400, // Time taken (e.g., 400ms, could be seconds for larger collections)

"totalKeysExamined": 0, // No index keys examined

"totalDocsExamined": 1000000 // All documents in the collection were scanned

}

}A complete scan is evident in this example from stage: “COLLSCAN” and totalDocsExamined: 1000000 (assuming 1 million documents). Despite its apparent smallness, the 400 ms executionTimeMillis can cause serious issues in applications with large traffic. Inefficiency would be further highlighted if a sort was also performed without an index, as you would see scanAndOrder: true.

Addressing the COLLSCAN

The primary solution to a COLLSCAN is to create an index on the field(s) used in your query criteria.

// Create an ascending index on the 'email' field

db.myusers.createIndex({email: 1})After the index is created, run the same explain() query again:

// Re-running the query after index creation

db.myusers.find({email: "example@email.com"}).explain("executionStats")The output would now show significant improvement:

{

"queryPlanner": {

"winningPlan": {

"stage": "FETCH", // Indicates fetching documents

"inputStage": {

"stage": "IXSCAN", // Indicates an index scan

"indexName": "email_1", // Name of the index used

}

},

},

"executionStats": {

"nReturned": 1,

"executionTimeMillis": 0, // Much faster! (e.g., 0-5ms)

"totalKeysExamined": 1, // Only 1 index key examined

"totalDocsExamined": 1 // Only 1 document examined

}

}Here, stage: “IXSCAN” and the drastically reduced totalKeysExamined and totalDocsExamined demonstrate that MongoDB efficiently used the index. The executionTimeMillis would drop significantly, showcasing the index’s direct impact on performance.

In conclusion, the key to optimising your MongoDB searches is knowing when a COLLSCAN occurs and how to interpret explain() data. Your database can function well and scale by proactively building the right indexes based on the query patterns of your application.