Scale a Node.js Application

In Node.js, scaling an application means making sure it can continue to function well even when workloads increase. In contrast, “performance,” which gauges the speed of a single request, is different. Node.js is ideal for scalable network applications and is very good at managing large amounts of data and several client connections at once.

Node.js uses a single-threaded paradigm by design. This indicates that a Node.js application executes on a single thread. Although this makes programming easier by eliminating multi-threading difficulties like deadlocks, it also calls for particular techniques to effectively handle heavy loads and take advantage of contemporary multi-core CPUs.

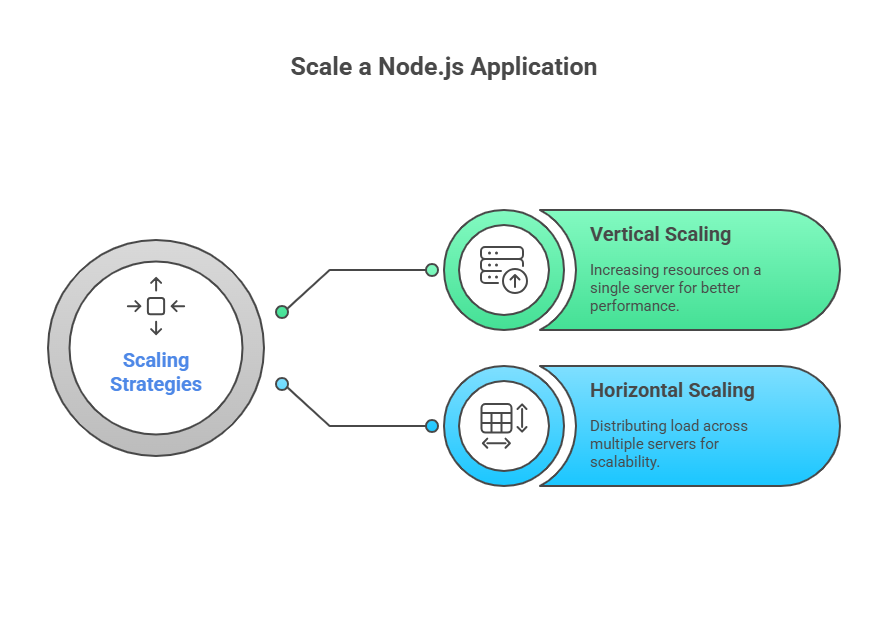

In Node.js, scaling can be broadly divided into two categories: horizontal scaling and vertical scaling.

Vertical Scaling with the Cluster Module

You can leverage multi-core systems with Node.js by starting a cluster of Node.js processes to manage the load. The built-in cluster module of Node is used to accomplish this.

A worker process (child process) can be forked by a master process thanks to the cluster module. Usually, the number of accessible CPU cores determines the number of employees. The loads are then automatically divided among these workers, usually by default using a Round-Robin technique, and they can use the same network port. This essentially enables a single Node.js application to use multiple CPU cores, despite the fact that each instance only uses one thread.

Here’s a basic example of how the cluster module works:

// cluster.js (or master file)

const cluster = require('cluster');

const http = require('http');

const numCPUs = require('os').cpus().length;

if (cluster.isMaster) {

console.log(`your server is working on ${numCPUs} cores`);

// Fork workers based on CPU count.

for (let i = 0; i < numCPUs; i++) {

cluster.fork();

}

cluster.on('exit', (worker, code, signal) => {

console.log(`worker ${worker.process.pid} died`);

// Optionally, fork a new worker if one dies to maintain capacity.

cluster.fork();

});

} else {

// Workers can share any TCP connection.

// In this case, it is an HTTP server.

require('./server.js')(); // Your application code.

}

// server.js (worker file)

const http = require('http');

function startServer() {

const server = http.createServer((req, res) => {

res.writeHead(200);

res.end('Hello Http');

});

server.listen(3000);

}

if (!module.parent) { // Start server if file is run directly

startServer();

} else { // Export server, if file is referenced via cluster

module.exports = startServer;

}The inability of these threads (workers) to directly share variables is crucial. A database such as Redis is commonly used for sharing data, such as cookies or session information.

Horizontal Scaling Strategies

In horizontal scaling, the application is spread across several separate servers or systems.

- Process Managers: PM2 and Forever are two tools that are used to manage many instances and maintain the continuous operation of Node.js applications. They enable deployment without downtime by immediately restarting apps in the event of a crash.

- Proxies and Load Balancers (e.g., Nginx): Reverse proxies, such as Nginx, are frequently used to allocate client requests evenly among several Node.js servers. Additionally, load balancing is something that Nginx may offer “out of the box,” rerouting traffic to servers according to proximity or current load.

- Message Queues (e.g., RabbitMQ): Asynchronous communication between various Node processes or services is made possible by message queues, such as RabbitMQ, which are useful for sophisticated, distributed systems. Through component decoupling and request buffering, this enables services to interact without direct awareness of one another, increasing system scalability and resilience.

- Child Processes (spawn, fork): Although Node.js is a single-threaded framework, it can CPU-intensive activities to distinct child processes through the use of modules such as

child_process(spawnorfork). This avoids bottlenecks by guaranteeing that the main Node.js event loop is free to manage client requests and I/O operations. - Cloud Services: It is much easier to scale when non-core functions are transferred to cloud providers. For example, Amazon Web Services (AWS) provides services for NoSQL databases (DynamoDB) and file storage (S3), enabling applications to scale their data stores. To lessen the strain on your application’s servers, third-party authentication services like as Facebook Connect (used with Passport.js) can manage user authentication and account management.

When to Scale

The key to a resilient approach is knowing when to scale. The following are important indicators:

- Network Latency: If reaction times are longer than permitted.

- CPU Load: If processors are starting to act as a bottleneck, it can be detected by tracking CPU consumption using programs like

psorhtop. - Socket Usage: Too many open file descriptors (sockets) may indicate that additional servers are required.

- Data Creep: When a database’s size or row count grows unnecessarily high, this is known as data creep.

These scaling strategies enable Node.js applications to efficiently handle high concurrency and massive data volumes, which qualifies them for real-time, data-intensive web applications.