What are Linear classification models?

One kind of classifier utilized in machine learning, especially for Natural Language Processing (NLP) applications, is the linear classification models. Choosing the appropriate class label for a given input is the main objective of categorization. Predicting one of two potential labels is the goal of binary classification, but multi-class classification entails classifying an example into one of k classes, where k is higher than two.

A real-valued scoring function, usually in the form of f(x) = wTx + b, where x is the observed input vector, is learnt using linear classifiers. The input vector x ∈ Rd is mapped to a real number using this function. In order to assign inputs to one of two classes usually denoted as -1 or +1 the output of this function is frequently passed through a sign function for binary classification. Calculating a score for each class and predicting the class with the greatest score is a popular method for multi-class issues. A hyperplane in an n-dimensional feature space serves as the decision boundary of a linear classifier, which is powered by a linear equation in the weight vector w.

Using supervised learning, linear classification models are constructed. A series of labelled examples, {(x1, y1),…, (xn, yn)}, where x is the input and y is the appropriate class label, is used to train them. A different test dataset is used to assess the trained classifier’s performance. A vector of measurements or a d-dimensional feature vector ø(x,y) are common representations of input data for linear classifiers. The vector space model is one way to represent documents as vectors of weighted word counts for text categorization. Linear classifiers are also employed with other text representation techniques such as TF-IDF, Bag of Words, and Bag of N-Grams.

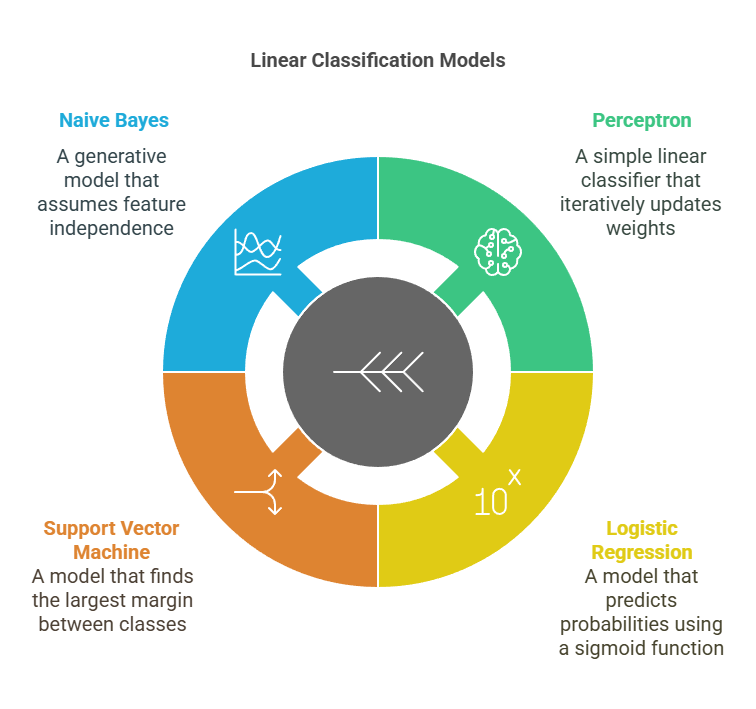

Types of Linear classification models

Perceptron: This classifier is linear. The perceptron algorithm will always discover a linear separator if the training data is linearly separable. Nevertheless, it is unable to learn functions like the exclusive-or (XOR) function that are not linearly separable. Weights are iteratively updated by the perceptron learning method using instances that were incorrectly categorized. The online support vector machine and the update rule are comparable. Among the three primary categories of discriminative classifiers discussed is the perceptron. It is said that feed-forward neural networks are a perceptron generalization.

Logistic Regression: This is a supervised machine learning classifier and a popular linear classification technique. It learns characteristics that aid in class distinction, making it a discriminative classifier. Binary logistic regression creates a probability between 0 and 1 by running the sum of weighted information through a sigmoid function. A decision is then made based on a threshold. A linear function is associated with this decision boundary. Multinomial logistic regression, sometimes referred to as softmax regression or maximum entropy classification in earlier NLP research, is used for issues with more than two classes. The probability for each class are obtained by applying the softmax function to the scores. Due to the fact that the log-probability is a linear function of the characteristics, logistic regression is frequently called a log-linear model. Moreover, it is regarded as one of the three primary categories of discriminative classifiers. It is said that feed-forward neural networks are a logistic regression generalization.

Support Vector Machine (SVM): Another well-liked linear classification technique is this one. Finding a separating hyperplane in the training data with the biggest margin across classes is the fundamental concept of linear Support Vector Machine. In some classification tasks, such identifying comparison phrases, SVM has demonstrated high performance. Linear SVM is a separate technique, but kernel-based SVM permits non-linear classification limits. Among the three primary categories of discriminative classifiers, SVMs are also discussed. Training multiple binary SVMs with techniques like one-versus-all or one-versus-one can handle multi-class classification.

Naive Bayes: We refer to this as a generative classifier. Generative models seek to comprehend the characteristics of each class, in contrast to discriminative models that concentrate on class separation. Because of its effectiveness and capacity to integrate data from several aspects, Naive Bayes is a popular algorithm. It works when a set of characteristics characterizes the state. Naive Bayes implicitly classifies itself as a linear classifier by computing the predicted class as a linear function of input characteristics in the log space. A multi-class classification algorithm by nature is the naive Bayes algorithm. For tasks like topic and sentence segmentation using a Hidden Markov Model (HMM), it is also the most often utilized generative sequence classification technique.

One significant class of models for classification is the log-linear model. The log-probability in these models is a linear combination of the weights of the features. One typical example is logistic regression. Another popular loglinear model in statistical natural language processing is maximum entropy modelling.

Convex optimization targets are frequently the outcome of linear models, which are also seen to be practical, straightforward and efficient to train, and have some interpretability. However, because their hypothesis class is limited to linear correlations, it will not work with data that is not linearly separable and cannot describe many complicated functions. Non-linear neural network models over dense inputs, which can approximate any function and provide a better hypothesis class than linear models over sparse inputs, became more popular around 2014. However, for more than ten years, linear and log-linear models dominated statistical natural language processing and provide the foundation for more intricate models.

To decrease error on the training instances, supervised learning uses an objective function during training, such as log loss, hinge loss, or cross-entropy loss. A key element or building block for many NLP tasks beyond simple text categorization, such sentence segmentation, is linear classification, which includes techniques like logistic regression and support vector machines.