What is Unicode normalization?

By defining a Universal Character Set that comprises more than 100,000 unique coded characters taken from more than 75 approved scripts, Unicode is a standard intended to remove character set ambiguity and represent almost all current writing systems. Limited character sets, such as 7-bit ASCII, which only supported the Roman alphabet and necessary English characters, made it difficult to comprehend digital text files in the past. Other languages had to be “asciified” or “Romanized.”

Data exchange was challenging due to the range of language-specific encoding schemes, especially for documents that were multilingual. To overcome this, Unicode gives each character a code point, which is a unique identifier. All possible characters can be encoded without overlap or confusion to encodings like UTF-8, which represent Unicode code points using variable-length byte sequences (1 to 4 bytes), even though they don’t fit into a single byte.

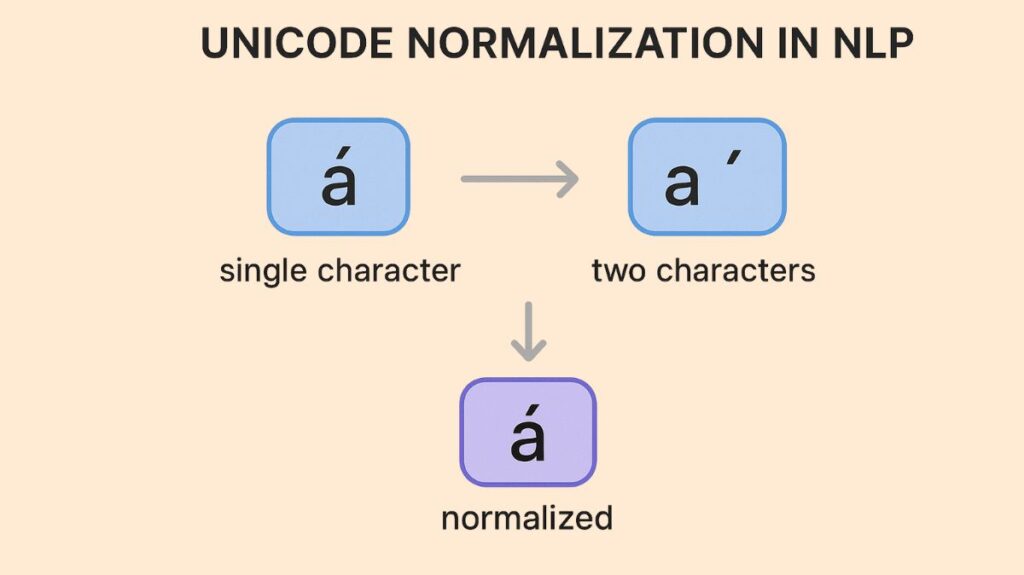

Because Unicode permits many code point sequences to represent the same abstract character or sequence of characters which may appear to be identical when displayed but have distinct underlying binary representations text normalization is an essential stage in the Unicode process. For many applications using Natural Language Processing (NLP), the ability to compare strings is essential. If the same character can be rendered in numerous ways, the byte or code point sequences differ despite semantic similarity. ‘á’ can be written as a single code point (LATIN SMALL LETTER A WITH ACUTE) or as ‘a’ followed by a combining character. These two representations are not equivalent, as demonstrated by a straightforward Python comparison.

Unicode Normalization Features

To solve this problem, the Unicode standard offers guidelines for text normalization. It distinguishes between two kinds of character or character sequence equivalency:

Canonical equivalency is a basic equivalency for sequences that represent the same abstract character. When displayed correctly, these sequences should always have the same visual appearance and behavior.

For sequences that represent the same abstract character or characters but may have different visual appearances or actions, compatibility equivalence is a weaker kind of equivalence. When exact visual distinctions are irrelevant for a certain application, this is helpful. For example, even if they differ under canonical equivalency, distinct graphical versions of the Greek letter π may be recognized as equivalent under compatibility equivalence.

Unicode Normalization forms

Unicode defines normalization forms based on two processes in order to utilize these equivalencies:

Also Read About World Knowledge In NLP Enable Contextual Understanding

- Decomposition: This process separates composite characters into the individual characters that make them up.

- Composition: This technique substitutes a single composite character for visually combining character sequences.

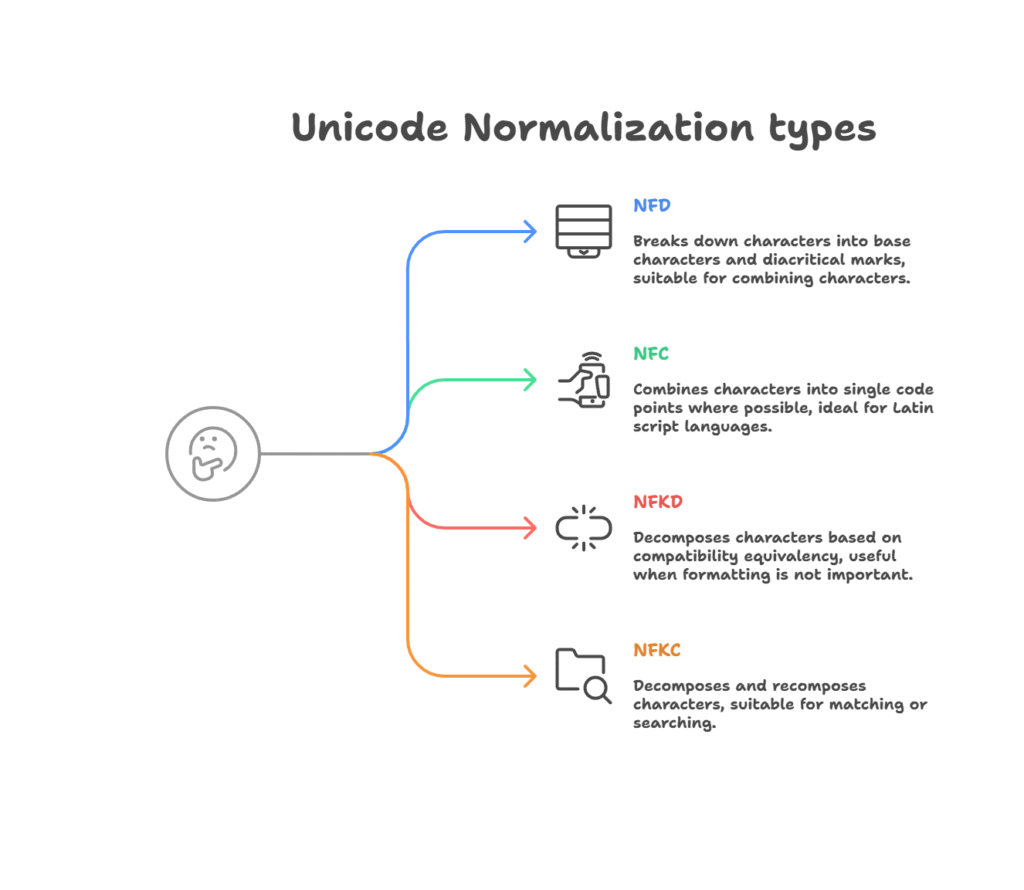

Unicode Normalization types

There are Unicode defines four types of normalization:

- Canonical Decomposition is applied in Normalization Form D (NFD). Characters are broken down into their base characters and diacritical marks are combined in this form. In order to combine character, the ‘á’ example would be changed to ‘a’ and then the sharp accent.

- Canonical Decomposition and Canonical Composition are applied in Normalization Form C (NFC). Particularly for languages that employ the Latin script, this form is frequently the most widely used. It guarantees that characters that may be represented as a single code point are done so; characters that cannot are expressed by combining them. If at all possible, it normalizes the ‘á’ example to the single code point form.

- Compatibility Decomposition is used in Normalization Form KD (NFKD). This frequently results in the loss of formatting or structural information when characters are broken down according to compatibility equivalency (e.g., breaking ligatures like ‘fi’ into ‘f’ followed by ‘i’).

- Compatibility Decomposition and Canonical Composition are applied in Normalization Form KC (NFKC). Because it normalizes according to compatibility equivalency and then recomposes where feasible, this form is frequently employed when formatting or appearance differences are not important. For activities like matching or searching, where formatting or symbol variations should be disregarded, it can be helpful.

Correct string comparison is made possible by using one of these normalization forms, which transform disparate but equivalent code point sequences into a single, standard representation. The tools to implement these normalizations are provided by libraries such as the Unicode data module in Python.

Case-folding, another technique for standardizing text for case-insensitive comparisons, is related to Unicode normalization. Unicode case-folding incorporates extra transformations to guarantee case-insensitive analysis across different writing systems, although basic lowercasing is effective for basic ASCII. For instance, a straightforward lowercasing might not be able to case-fold the German character “ß” to “ss.”