P-values are used in hypothesis testing to assess evidence against a null hypothesis. Even though it’s not the first statistical idea in machine learning, it can help determine model significance and meaning. Model evaluation and interpretation benefit from the inferential statistics term “P-value” which helps explain uncertainty.

What is P-Value?

A P-value checks whether our results are statistically significant. The null hypothesis’s likelihood of observable data is measured by it. According to the null hypothesis, the data has no influence, difference, or relationship, hence the results are random.

You might construct a machine learning model to predict whether a buyer would buy a product based on its features. You may assume as part of your null hypothesis that age, income, etc. do not affect purchase likelihood. Your analysis may reject the null hypothesis and accept the alternative hypothesis if the P-value is very small, indicating that the observed relationship between features and purchasing behaviour is unlikely to be due to random chance.

Significance Level of P-Value

Determining whether to reject the null hypothesis involves comparing the P-value to alpha, usually 0.05. Statistical significance is reached if the P-value is less than 0.05, rejecting the null hypothesis. P-values greater than the significance level prevent us from rejecting the null hypothesis, indicating that the data is insufficient to indicate a substantial effect.

Role of P-Value in Machine Learning

The P-value isn’t directly related to accuracy, precision, recall, or F1 score in machine learning. However, it is crucial to model evaluation when determining variable relationships or model features.

Analyse this. Linear machine learning algorithms like logistic regression and linear regression aim to uncover features that are significantly related to the output variable. The P-value lets you know if a feature predicts the outcome statistically.

Example: Based on a person’s age, income, and marital situation, a logistic regression model can guess if they will buy a product. Every trait has a P-value. The model’s predictions are considerably influenced by features with low P-values (typically less than 0.05). With a high P-value, the feature may not be adding relevant information to the model and may be eliminated.

Feature Selection and Model Interpretability

Machine learning model feature selection is crucial. Identifying the model’s most predictive properties. Examine feature P-values to determine importance. Features with very high P-values (typically above 0.05) may be removed because they probably don’t contribute much to model predictions. This method simplifies and clarifies models.

However, not all machine learning algorithms generate P-values. Support vector machines, decision trees, and random forests rarely produce feature P-values. To evaluate feature contributions, we may use feature importance metrics or permutation importance.

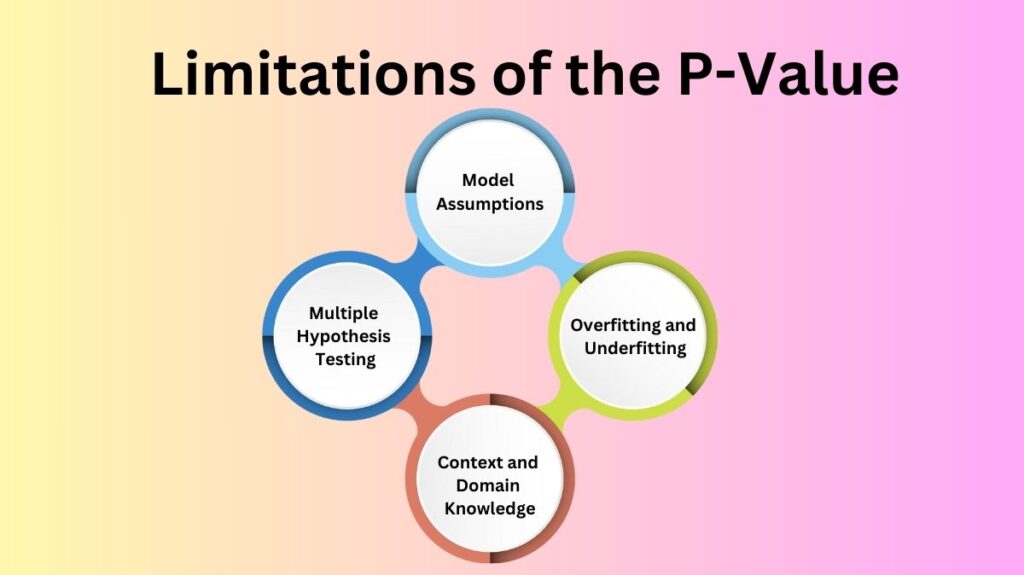

Limitations of the P-Value in Machine Learning

A valuable tool for determining feature significance, the P-value has limits, notably in machine learning.

- Model Assumptions: Linear regression and logistic regression make assumptions about data distribution. Statistically unreliable P-values may result from data that is excessively skewed or has non-linear correlations. P-values may not be generated by decision trees that don’t assume a distribution.

- Multiple Hypothesis Testing: More features or hypotheses increase the risk of a false positive (a result that appears significant but is actually due to random chance). If not corrected, multiple hypothesis testing can lead to erroneous conclusions.

- Overfitting and Underfitting: Overfitted machine learning models do well on training data but not so well on unknown data, while underfitted models do badly on both training and test data. The statistical significance-based P-value does not prevent these modelling difficulties. P-values from overfitting may indicate a feature is important when it is essentially noise.

- Context and Domain Knowledge: The P-value doesn’t indicate a feature or relationship’s practical worth. Despite a tiny P-value, a feature may not affect model performance or real-world outcomes. An accurate P-value interpretation requires subject understanding.

Alternatives and Complementary Metrics of P-Value

In machine learning, P-values are not necessarily ideal. Model performance is usually assessed using accuracy, precision, recall, F1 score, and AUC-ROC. These measures indicate how well the model performs in real-world settings without assessing statistical significance.

There are various feature importance approaches for models with interpretability and feature selection issues. In permutation importance, a feature’s importance is assessed by randomly mixing its values and observing the model’s performance. Performance drops significantly when the feature is important, but hardly when it is not.

Use LIME (Local Interpretable Model-agnostic Explanations) or SHAP (SHapley Additive exPlanations) to evaluate each feature’s contribution to individual predictions. These methods provide more actionable insights than the P-value by revealing how features affect the model.

Applications of P-Values in Machine Learning

Though limited, the P-value can be useful in machine learning, especially in statistical modelling and with smaller datasets.

- Model Diagnostics: The P-value can assess predictor importance in linear regression and logistic regression models. Using a regression model to predict house prices, the P-value for the “square footage” variable might indicate its significance.

- A/B Testing: Machine learning is being employed in experimentation and A/B testing. The P-value tells us if the differences between the treated group and the control group are statistically important.

- Biology and Medicine: Genome study, drug development, and epidemiology all use machine learning models to find biomarkers and therapeutic effects. P-values can rate the evidence. They assess whether genetic factor-disease outcome correlations are true or random variations.

Conclusion

In statistics and machine learning, the P-value is important for assessing model features’ importance. Its model assumptions and vulnerability to multiple testing make it not a panacea. Combining statistical techniques like the P-value with performance measurements and domain expertise helps machine learning make intelligent decisions.

P-values are valuable for statistical inference, especially when understanding the significance of specific traits or relationships is critical. Machine learning practitioners, especially those working with complex models, may find more meaningful insights using feature importance or model-agnostic explainability tools.