Data preparation improves machine learning model performance, especially for categorical variables. Machine learning techniques require numerical representations of categorical features. Different data formats require different encoding methods. Encoding strategy affects model learning and generalisation. This article discusses machine learning’s most frequent encoding methods, their pros and cons, and optimal applications.

What are Encoding Techniques in Machine Learning?

Encoding Techniques are machine learning methods for converting category input into numerical format that algorithms can process. Most machine learning algorithms operate with numerical input and cannot directly handle categorical variables. As a result, encoding converts these categories into numbers or numerical representations that retain the information contained within the category features.

Simply said, encoding is the process of transforming non-numerical labels into numbers so that machine learning models can comprehend the data and make predictions. The way categorical data is represented in the model can significantly affect its performance. As a result, adopting the appropriate encoding strategy is critical for improved accuracy and generalisation.

Why Is Encoding Important?

Compatibility with algorithms: The majority of machine learning techniques, including linear regression, decision trees, and neural networks, require numerical input. Categorical data (strings, labels, etc.) must be converted into numbers before being employed in these models.

Efficient representation: Encoding techniques allow you to express vast volumes of categorical data while retaining crucial information.

Improved model performance: A good encoding technique helps the model understand the underlying relationships between data, allowing it to generate more accurate predictions.

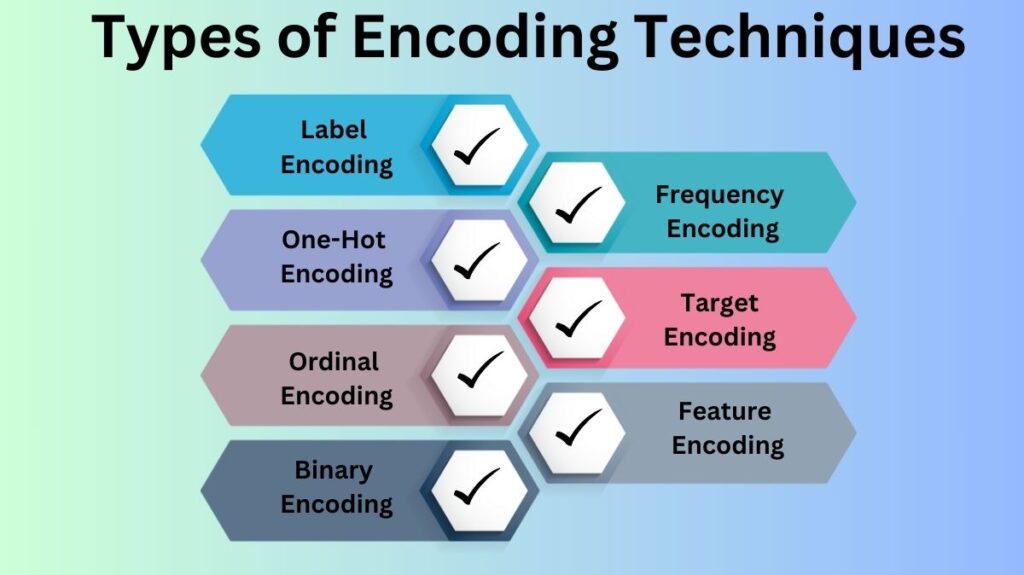

Types of Encoding Techniques

There are several different encoding techniques available, and the best one to use depends on the nature of the categorical data and the type of machine learning model being applied. Below are some of the most widely used encoding techniques.

Label Encoding Techniques

Label encoding is a straightforward way to convert category data to numbers. This approach gives each feature category a unique integer. These integers replace categories, providing a numerical column.

Advantages:

- Simplicity: Simple, computationally efficient label encoding. Just a category-to-integer mapping is needed.

- Efficient for ordinal data: Label encoding is efficient for ordinal data, which has a natural ordering. Label encoding can encode customer satisfaction (Low, Medium, High).

Disadvantages:

- Loss of meaning for nominal data: Label encoding artificially orders categories, a major problem. If we label Red, Green, and Blue to 0, 1, and 2, a model may mistakenly conclude that Green is more “numerically” significant than Red or Blue. In nominal data, categories have no inherent order, which causes this issue.

- Model bias: Tree-based machine learning models may misinterpret integer labels as quantitative relationships (such as Green being halfway between Red and Blue).

Use Case:

- Label encoding is most effective for categorical variables with a natural order. Example: High School, Bachelors, Masters; Child, Teen, Adult.

One-Hot Encoding Techniques

One-hot encoding, which converts each feature category into a binary (0 or 1) feature, is commonly employed. This approach creates binary columns for each category in a categorical feature. That category is present if 1 and absent if 0.

Advantages:

- No false ordering: One-hot encoding is suitable for nominal data since it does not impose any intrinsic order between the categories. Each category is treated individually, with no assumptions regarding relationships between them.

- Widely supported: Most machine learning models can handle one-hot encoded data without issue, especially those that rely on distance measures (like linear models or neural networks).

Disadvantages:

- Increased dimensionality: When the categorical feature has many unique categories, one-hot encoding can create a high-dimensional dataset. One-hot encoding creates 100 binary columns for a feature with 100 categories. This can raise computational cost and cause the “curse of dimensionality”—where feature space hinders model performance.

- Sparsity: The dataset may have mostly zero values. Sparsity requires more memory and slows processing.

Use Case:

- One-hot encoding is most effective for nominal features with few categories. Colour, Country, Product Type, and City are examples.

Ordinal Encoding Techniques

Ordinal encoding is like label encoding but for ordinal data. Ordinal variables are ordered but may not have quantitative distinctions across categories. Ordinal encoding replaces categories with integers indicating rank.

Advantages:

- Maintains order: The advantages of ordinal encoding include maintaining the natural ranking of categories, which is important for ordinal data. Ordinal encoding ensures that the model recognises increasing satisfaction if a feature represents Satisfaction with values Low, Medium, and High.

- Efficient: Ordinal encoding is computationally efficient and does not add dimensionality like label encoding.

Disadvantages:

- Not suitable for nominal data: Use ordinal encoding only for ordinal data. Application to nominal data (data without an order) may mistake a hierarchy or ranking.

- Inflexibility: Ordinal encoding works well for ordered categories but may not reflect their relationship. Ordinal encoding doesn’t reflect the fact that Low and Medium may be different from Medium and High.

Use Case:

- Ideal for features such as Satisfaction Level, Education Level, and Income Range, ordinal encoding maintains a clear order in the categories.

Binary Encoding Techniques

Binary encoding is more compact than one-hot encoding for high-cardinality categorical information. This approach names categories with integers and then converts them to binary values. A separate feature for each binary digit reduces dimensionality compared to one-hot encoding.

Advantages:

- Reduced dimensionality: Binary encoding uses fewer columns than one-hot encoding, reducing computational cost and preventing the curse of dimensionality.

- Efficient for high-cardinality features: Binary encoding works well for high-cardinality features with many unique categories, when one-hot encoding would yield multiple binary columns.

Disadvantages:

- Possible loss of interpretability: Binary encoding decreases dimensionality but may make features harder to read. The link between categories is more complicated than with one-hot encoding.

- Potential collisions: Binary encoding uses integers and their binary representations, therefore two categories may have the same binary representation, causing collisions and information loss.

Use Case:

- Binary encoding is ideal for high-cardinality categorical features like User IDs, Product Codes, and Zip Codes, where one-hot encoding is not feasible due to the vast number of unique categories.

Frequency Encoding Techniques

Frequency encoding values each category based on its dataset frequency. To replace each category with its dataset frequency, this approach is used.

Advantages:

- Efficient: Only category frequencies are needed for frequency encoding, which is computationally efficient.

- Works well for high-cardinality data: Avoiding the formation of several binary columns makes this encoding method advantageous for high-cardinality features.

Disadvantages:

- Can introduce bias: Overfitting may result from overreliance on over-represented categories.

- Loss of categorical information: Symbolic links between categories are lost in frequency encoding. Even though their relationship to the target variable differs, two categories with similar frequency may have the same encoded value.

Use Case:

- Frequency encoding is particularly beneficial for categorical characteristics with a skewed distribution, such as Product ID, City, or Country, where one-hot encoding is impracticable.

Target Encoding Techniques

Target encoding, also called mean encoding, replaces each feature category with its target variable mean. This approach works well when the categorical feature and target variable are strongly correlated.

Advantages:

- Captures relationships with the target: Target encoding captures relationships with the target and provides valuable information for models by incorporating how categories affect the target variable.

- Reduced dimensionality: Target encoding avoids one-hot encoding’s high-dimensional feature space, which is useful for high-cardinality features.

Disadvantages:

- Risk of overfitting: Encoding the full dataset before splitting it into training and testing sets might cause overfitting by introducing test set information into the training process.

- Bias: Target encoding may generate bias for rare categories if the target variable is substantially unbalanced.

Use Case:

- Target encoding is ideal for features with a predictive link between the category and the target variable. For instance, City and Price or Product ID and Sales.

Hashing Encoding Techniques

Hashing trick, or feature hashing, applies a hash function to each category and transfers the hash to a fixed-length vector. For high-cardinality features, this method reduces dimensionality and minimises collisions.

Advantages:

- Handles high cardinality well: Features with multiple categories benefit from feature hashing. It lowers new features, improving scalability.

- Memory-efficient: This method creates a fixed-length representation for each category, regardless of the number of unique categories.

Disadvantages:

- Hash collisions: Hashing encoding difficulties include collisions and information loss when different categories are hashed to the same item.

- Interpretability: The encoded characteristics are less interpretable than one-hot or label encoding.

Use Case:

- Feature hashing is ideal for high-cardinality features like IP Address, User ID, or Product Code, where one-hot or binary encoding would result in too many columns.

Conclusion

The machine learning model and categorical data type determine the encoding techniques. Summary of when to utilise each technique:

Label Encoding: Great for ordinal data with natural categories.

One-Hot Encoding: For nominal data with few categories.

Ordinal Encoding: For meaningful ordinal data.

Binary Encoding: High-cardinality features with numerous unique categories benefit from binary encoding.

Frequency Encoding: Useful for valuable category frequency data.

Target Encoding: Best for features with strong target variable relationships.

Feature Encoding: Features with extraordinarily high cardinality benefit from feature encoding.

Understanding these encoding techniques and choosing the right one for the situation helps improve machine learning model performance and efficiency.