In machine learning and data analysis, Principal Component Analysis (PCA) is strong. It aims to decrease dataset variables while maintaining key information. Dimensionality reduction is necessary for visualizing, processing, and analyzing high-dimensional data. By finding patterns and structures, PCA simplifies the dataset, boosting computational efficiency, model performance, and data interpretation. We will explain PCA in machine learning, its goal, working principles, applications, and data science significance in this article.

What is Principal Component Analysis?

Principal Component Analysis (PCA) reduces high-dimensional data to a lower-dimensional form while keeping variability. Principal Component Analysis (PCA) extracts the most important features from a dataset and dismisses the rest. These linearly combined variables are new variables. Each component contributes to dataset variance.Principal Component Analysis (PCA) finds data’s most variable axes. Principal components are directions. By projecting data onto these components, PCA reduces dimensionality while capturing important data.

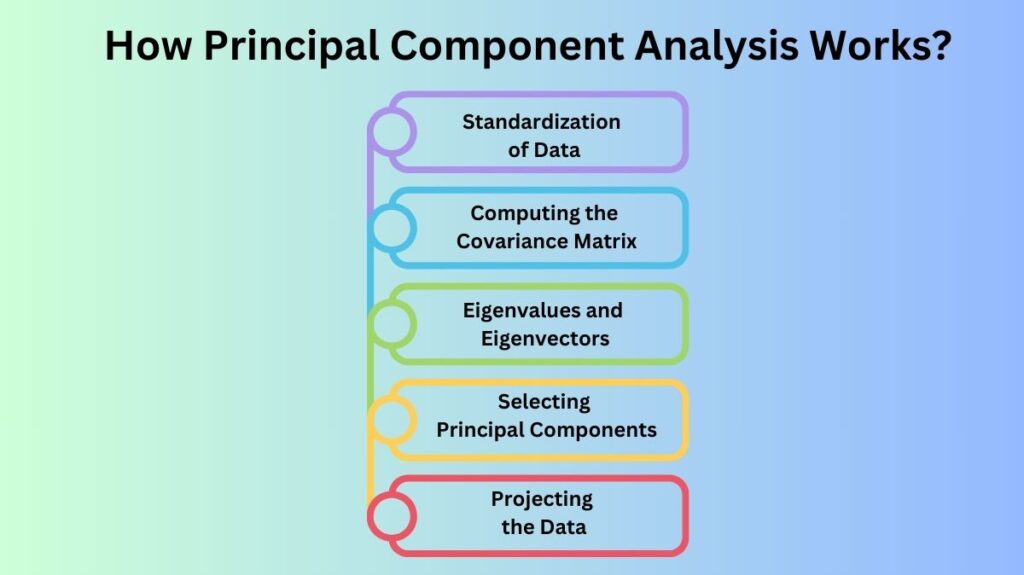

How Principal Component Analysis Works?

- Standardization of Data: Standardize Data PCA requires data standardization, especially for features with varying units or scales. Standards ensure that all features contribute equally to analysis. Each feature has a zero mean and one standard deviation in a standardized dataset. Without respect to scale, this stage enables PCA treat all characteristics equally.

- Computing the Covariance Matrix: The covariance matrix is calculated after data standardization. Correlations between dataset features are shown in the covariance matrix. How closely two features move together is shown by covariance. Unlike low-covariance features, high-covariance ones are commonly associated. The covariance matrix helps PCA discover the most relevant characteristics as well as their significant associations.

- Eigenvalues and Eigenvectors: PCA computes eigenvalues and eigenvectors after covariance matrix. The eigenvectors represent the new axes (principal components) onto which the data will be projected, and the eigenvalues determine their ability to explain data variance. Data structure is better captured by directions with higher eigenvalues, which have more variance. We order the eigenvectors by eigenvalue. Largest eigenvector reflects data variance, followed by next largest, etc.

- Selecting Principal Components: By picking a selection of principle components, PCA reduces data dimensionality. To reduce computing cost, PCA selects the top k components that capture the most variance, rather than using all components. The cumulative explained variance determines the amount of components to maintain, with the goal of explaining 90% or 95% of the overall variation.

- Projecting the Data: The original data is projected onto the primary components after selection. Data is projected from its native space to the primary components’ space. A simpler, lower-dimensional dataset keeps the most important facts and is easier to interpret and visualize.

Applications of PCA in Machine Learning

- Dimensionality Reduction: PCA often reduces dimensionality. Complex real-world datasets can make analysis and modeling computationally expensive and complex. Picture, text, and sensor data often have hundreds or thousands of attributes. Machine learning algorithms can handle datasets with fewer features and more important data thanks to PCA. Individual pixels are often considered as features in image processing. PCA reduces the amount of pixels needed to represent big datasets of images, such as facial identification or object detection, without compromising information, enhancing processing speed and accuracy.

- Noise Reduction: Aside from dimensionality reduction, PCA can reduce data noise. The model’s predictive power is typically reduced by irrelevant or redundant elements in real-world datasets. Unimportant features can contribute noise and cause overfitting, when the model gets overly specialized to the training data and performs poorly on fresh data. Principal Component Analysis (PCA) filters away noise and extraneous variables by focusing on the principal components that explain the most variance. Improved model generalization and performance.

- Feature Extraction: With PCA, new characteristics can be extracted from old ones. New primary components are linear combinations of original features. PCA can generate uncorrelated features that capture data patterns from highly linked features. In multicollinearity—when two or more features are highly correlated—PCA feature extraction is useful. By converting data into main components, PCA eliminates correlations and makes each new characteristic independent.

- Data Visualization: Also useful for visualizing high-dimensional data is PCA. In exploratory data analysis (EDA), data visualization helps reveal data structure and linkages. Visualizing data with hundreds or thousands of features is tough since we can only plot three dimensions. PCA gives data two or three components, allowing 2D or 3D visualizations. In the high-dimensional space, these graphs may not show clusters, outliers, or other patterns. PCA is used in biology and finance to depict gene expression data and stock market patterns.

- Preprocessing for Other Algorithms: Often, PCA is used to preprocess other machine learning methods. SVM, KNN, and linear regression gain from fewer features. You can speed up and simplify model training by using PCA before training. PCA also assists machine learning regularization by eliminating irrelevant features and reducing overfitting. It can improve model performance on unseen data.

Advantages of Principal Component Analysis (PCA)

- Efficient Dimensionality Reduction: PCA efficiently reduces dataset features while maintaining key information. PCA simplifies computing, especially for high-dimensional datasets, by focusing on key components.

- Improved Model Performance: By removing noise and extraneous features, PCA improves machine learning models. It helps models generalize and avoid overfitting, improving new data prediction accuracy.

- Data Visualization: PCA displays high-dimensional, complicated data in two or three dimensions. This helps analysts and data scientists interpret data structure and relationships, revealing patterns, trends, and outliers.

Limitations of Principal Component Analysis (PCA)

- Linear Assumptions: Sometimes datasets are not linear subspaces, but PCA assumes they are. If feature interactions are non-linear, PCA may miss essential data structure. T-Distributed Stochastic Neighbor Embedding (t-SNE) or autoencoders may be better for dimensionality reduction in such circumstances.

- Loss of Interpretability: PCA reduces dimensionality and reveals essential patterns, however the new features (principal components) are linear combinations Of the original features. Since they may not match any relevant data idea, they are tougher to interpret. In interpretability-critical situations, PCA may not be optimum.

- Sensitivity to Outliers: PCA reacts to data outliers. For PCA to maximize variance, outliers might alter principal components, resulting in unsatisfactory results. PCA requires proper outlier handling.

Conclusion

Machine learning uses Principal Component Analysis (PCA) for dimensionality reduction, noise reduction, feature extraction, and data visualization. Principal Component Analysis (PCA) simplify complex datasets, enhance model performance, and improve data interpretation by identifying data variance directions. In image processing, finance, and biology, PCA is employed for data analysis despite its linearity assumptions and outlier sensitivity.

To improve model efficiency and data management, machine learning workflows commonly use Principal Component Analysis (PCA) as a preprocessing step. With high-dimensional data, data scientists and machine learning practitioners must understand PCA and when to use it.