A neural network architecture called the Deep Convolutional Inverse Graphics Network (DC-IGN) was created to extract the underlying three-dimensional structure of sceneries and objects from two-dimensional pictures. It seeks to accomplish “inverse graphics,” which is the process of determining the parameters that control how an image is created from a specific scene or item.

What is Deep Convolutional Inverse Graphics Network (DC-IGN)?

The Deep Convolutional Inverse Graphics Network (DC-IGN) was created especially to comprehend the 3D world from 2D photos. Learning a mapping from images to a collection of latent variables that represent different geometric and appearance features of the scene or object is its main concept.

Aspects including item forms, positions, lighting, and material qualities are examples of these latent variables. Based on the learnt latent variables, the network may be able to produce new images by learning this mapping from other perspectives, lighting scenarios, or other modifications.

Also Read About What is Deep Generative Models? and its types

History

The publication “Deep Convolutional Inverse Graphics Network” by Tejas D. Kulkarni, Joshua B. Tenenbaum, William F. Whitney, and Pushmeet Kohli introduced the DC-IGN model.

Kulkarni and Whitney, the first two authors, each made an equal contribution to the work. The work was included in the proceedings of Advances in Neural Information Processing Systems 28 (NIPS 2015). March 11, 2015, was the date of the first submission to arXiv; updates were made until June 22, 2015. The writers have affiliations with Microsoft Research (MSR Cambridge, UK) and the Massachusetts Institute of Technology (MIT).

DC-IGN expands on ideas from deep convolutional neural networks and variational autoencoders (Kingma and Welling). DC-IGN sought to automatically generate a semantically-interpretable graphics code and develop a 3D rendering engine to replicate images from single static frames, in contrast to earlier inverse graphics efforts that frequently depended on manually created rendering engines or techniques that required pairs of images.

Deep Convolutional Inverse Graphics Network Architecture

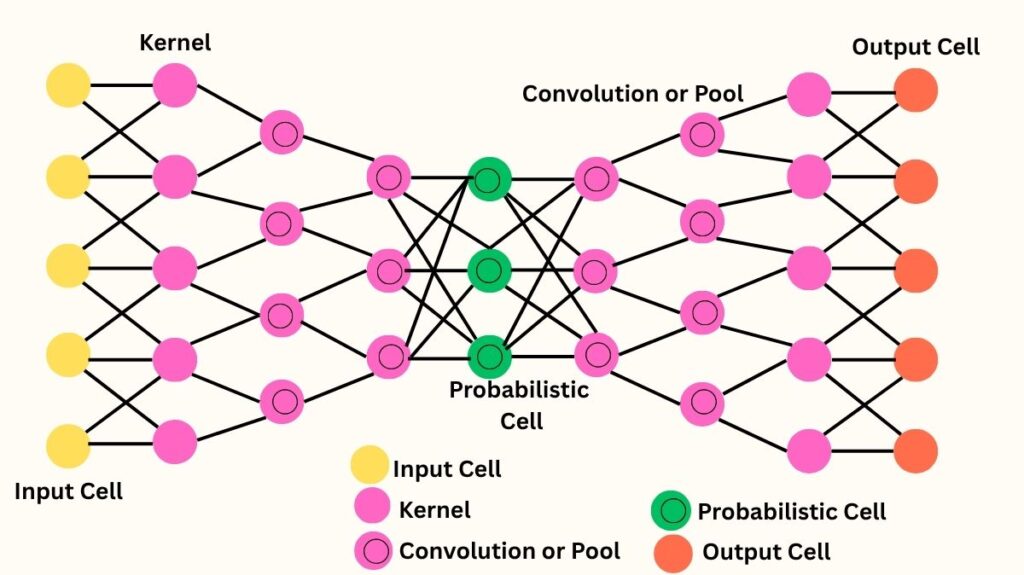

Because deep convolutional neural networks (CNNs) can capture hierarchical characteristics in images, they are commonly used in DC-IGN architectures. Multiple layers of convolution and de-convolution operators make up the model.

With certain modifications, it adheres to a variational autoencoder (VAE) architecture. There are two primary components to the fundamental structure:

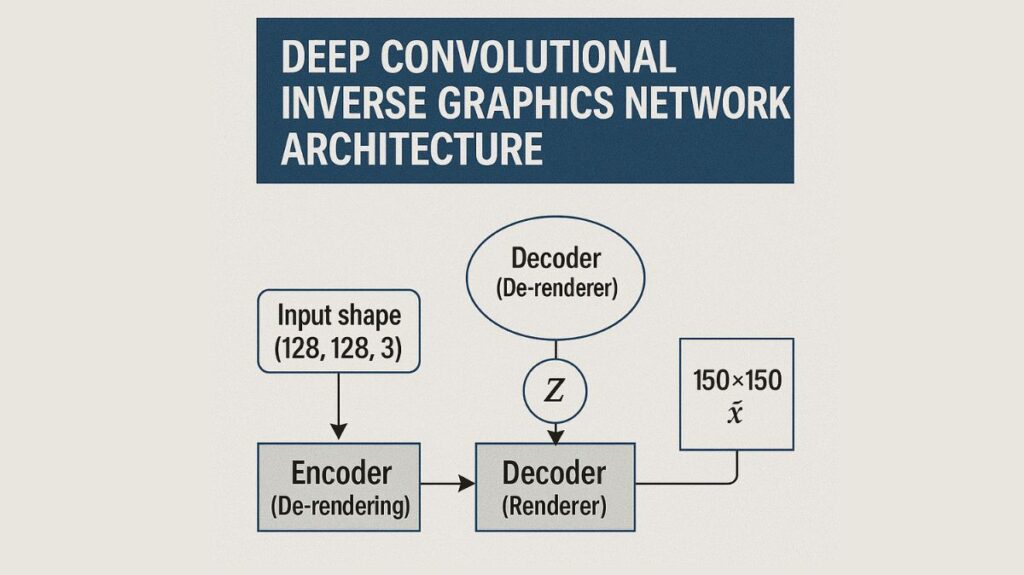

- Encoder (De-rendering): Given input picture data (x), this network records a distribution over graphics codes (Z). It comprises of max-pooling after many convolutional layers.

- Decoder (Renderer): Given the graphics code (Z), this network approximates the image (x̂) by learning a conditional distribution. It consists of multiple layers of convolution after unpooling (upsampling using nearest neighbours).

Using unpooling and convolution to control the rise in dimensionality, the decoder network converts a compact graphics code (for example, 200 dimensions) into a 150×150 image. An input shape of (128, 128, 3) and a latent dimension of 16 are displayed in a straightforward Python example of a DC-IGN model using TensorFlow/Keras.

How Deep Convolutional Inverse Graphics Network works

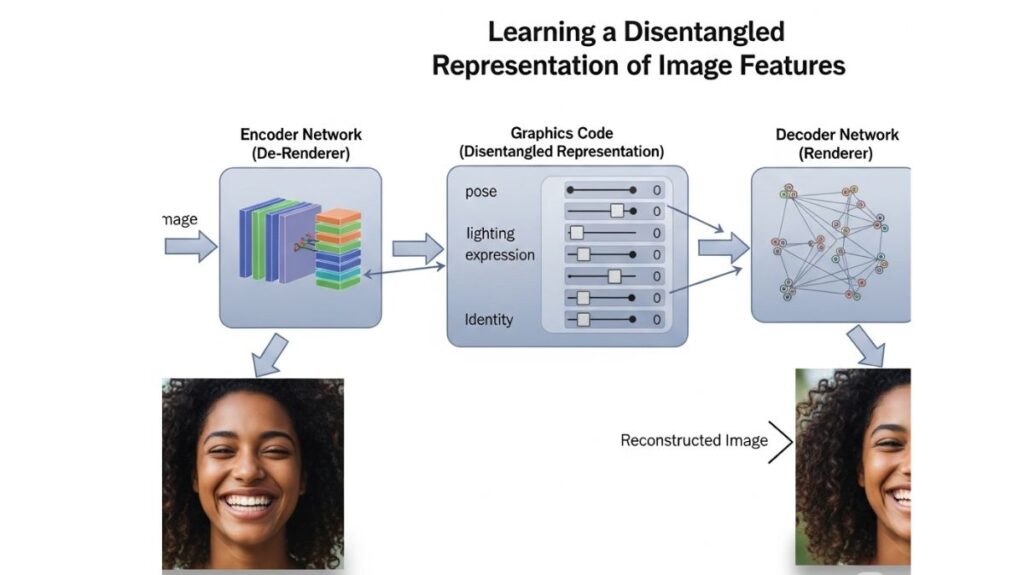

A representation of an image that is disentangled with regard to different transformations is learnt by DC-IGN. This means that distinct elements of the image, such as posture, light, shape, and texture, are encoded in distinct, independent regions of the latent space.

Latent Variables

The network is trained to map images to a set of latent variables (zi ∈ Z) that encode scene attributes including texture, shape, light (e.g., azimuth of light source), and position (e.g., elevation). These fall into two categories: intrinsic attributes (such identity, shape, and expression) and extrinsic variables (like stance and light).

Training Process

- Objective:The objective is to learn a representation in which real-world modifications only cause a small fraction of latent variables to change.

- Algorithm: The Stochastic Gradient Variational Bayes (SGVB) algorithm is used to train DC-IGN.

- Loss Function: To increase quality, the network modifies latent variables while learning by reducing the difference between produced and real images in the training set.

Disentanglement Training approach

To make the network learn disentangled and interpretable representations, a novel training approach is used.

- Mini-Batch Selection: Training data is grouped into mini-batches in which only one particular scene variable (such as only intrinsic characteristics, only elevation angle, only azimuth angle, or only light source azimuth) changes at a time. This is a reflection of changes in the real world.

- Clamping: In the forward step, every sample in the batch must have the same output from every latent variable component (zi) except the one that corresponds to the mini-batch’s changing variable (z_train). These are referred to as “clamped” outputs since they show that the other producing variables of the image remain unaltered. As a result, the active neuron (z_train) is forced to account for every variation in that batch.

- Backpropagation with Modified Gradients: Only the gradient for the active latent variable (z_train), unaltered by the reconstruction error, is transmitted through in the backward step. The gradients of the clamped latent variables (zi ≠ z_train) are substituted with the scaled-down (e.g., by 1/100) difference from the variable mean across the batch. This encourages invariance to transformations not found in the batch by pushing them closer together.

Training Ratio: To take into consideration the increased dimensionality of intrinsic attributes, the kind of batch employed is chosen randomly, usually in a ratio of roughly 1:1:1:10.

Operation (Test Phase)

The encoder network generates the latent variables (Z) by processing a single input image. The decoder may then independently modify these latent variables to create new images, essentially re-rendering the original object or scene with alternate poses, lighting, or other modifications, much like a typical 3D graphics engine.

Also Read About Types Of Backpropagation & Disadvantages Of Backpropagation

Benefits and Features

- Interpretable Representation: DC-IGN acquires the ability to interpret visual representations.

- In terms of 3D scene structure and viewing transformations, such as item out-of-plane rotations, lighting changes, texture, and shape, it creates a representation that is disentangled. Compared to real-world transformations, encoded data changes are minimal.

- Novel View Generation: Using a single input image, the network may produce fresh images of the same object in various lighting conditions or from various angles.

- Accurate 3D Rendering Engine: The decoder network that has been properly trained serves as an approximate 3D rendering engine.

- Single Static Frame Input: Unlike some previous approaches that require pairs of photos as input, this method can handle single static images.

- One example of a generative model is DC-IGN.

- High Equivariance: As a result of the training process, latent variables have a strong equivariance with relevant generating parameters, making it easy to recover the true generating parameters (such as a face’s true angle).

- Generalization: It has the ability to generalise to previously unseen items, such as portraying chairs from previously undiscovered angles.

Disadvantages/Challenges

- Data Requirements: A significant amount of labelled training data is needed for DC-IGN and related methods.

- Network Design: To get significant outcomes, careful network design is essential.

- Complex 3D Structures: Accurately capturing complex 3D structures remains a difficulty for the networks.

- Occlusions: Managing occlusions is still difficult.

- Generalisation to Diverse scenarios: It might be challenging to generalise to a wide range of scenarios and items.

- Speculation: The model may speculate about invisible elements when displaying them from a single view (for example, supposing a chair has arms if it is unable to see them).

- Keyframe vs. Smooth Transitions: Instead of capturing smooth transitions between angles, it may only record a “keyframes” representation of certain invisible objects.

- Azimuth Discontinuity: At 0 degrees (looking directly forward), the encoder network’s representation of azimuth for faces exhibits a discontinuity.

Also Read About What are Generative Stochastic Networks? and Advantages of GSNs

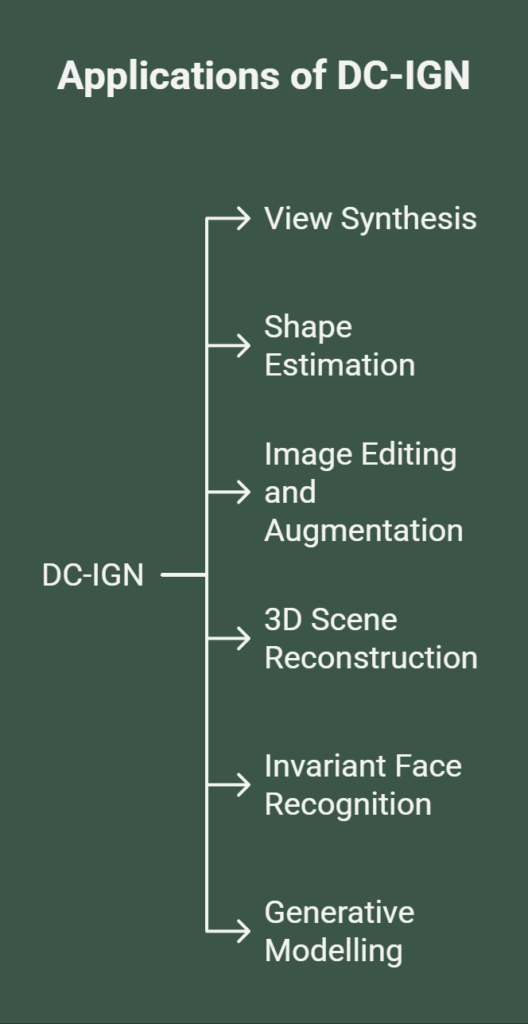

Applications of DC-IGN

DC-IGN and related inverse graphics networks are used in the following applications:

- View Synthesis: Using a single input image, this technique creates images of an object or scene from many perspectives.

- Shape Estimation: This technique, which is helpful for object recognition and scene comprehension, estimates the 3D shape of things from 2D images.

- Image Editing and Augmentation: By adjusting learnt latent variables, one may alter object positions, lighting, and other characteristics, allowing for image editing and training data augmentation.

- 3D Scene Reconstruction: When used in conjunction with other methods, this methodology helps to reconstruct 3D scenes from 2D photos.

- Using the learnt representation for face recognition tasks that are unaffected by changes in illumination and position is known as invariant face recognition.

- Using the learnt representation as a summary statistic for generative modelling is known as generative modelling.

Also Read About What is Deep Boltzmann Machines? and its Applications