Attention Mechanism

Neural network models employ an attention mechanism to enable the model to concentrate on pertinent segments of the input sequence when producing output sequences or making predictions. By resolving the issue of the full input sequence being encoded into a single fixed-length vector for decoding, this approach was created to improve the efficiency of encoder-decoder (sequence-to-sequence) RNN models.

Core Concept and Functionality

- The basic principle of attention is to give each element in the input sequence a weight that indicates its relevance to the processing phase at hand. These weights are frequently calculated using neural network layers, including softmax layers, according to how relevant or comparable each element in the input sequence is to the model’s current state (for example, the decoder state in machine translation).

- To gauge this resemblance, attention mechanisms usually calculate attention scores. Dot product attention, additive attention, and multiplicative attention are common techniques used to calculate these scores. Dot-product attention, for example, uses the dot product of the query and key vectors to calculate scores.

- A softmax function is frequently used to translate the scores into attention weights once they have been calculated.

- After that, a weighted sum of the input elements, where the weights are the attention weights, is used to calculate a context vector. The targeted information from the input sequence that is pertinent to the current processing step is represented by this vector.

Because the weights indicate how important each item in the target sequence is in relation to a particular item, this weighted sum representation of a vector sequence is frequently referred to as an attention mechanism.

You can also read Ontology Example in NLP, Types, Functions And Applications

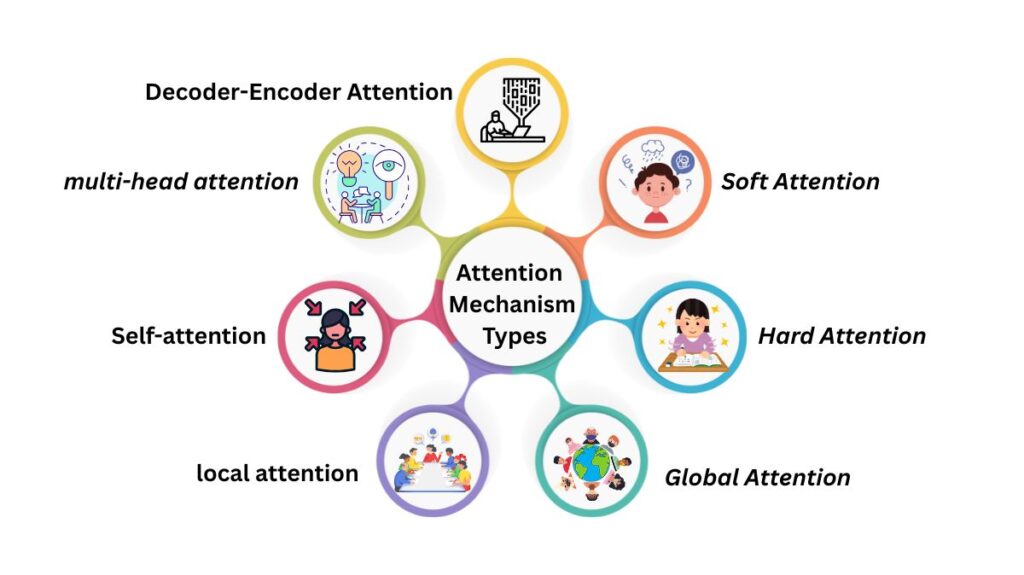

Attention Mechanism Types

The sources outline many forms and applications of attention:

- Every element in the input sequence has its attention weights calculated by Soft Attention, which usually normalizes the weights using a softmax function. This enables the model to take into account the complete input sequence, although with differing weights. Soft attention models can be trained using simple backpropagation and are differentiable. Generally, soft attention models are global attention models.

- At each stage of processing, Hard Attention chooses one element from the input sequence. This choice is frequently made using heuristics or learnt probabilities. It appears that hard attention settings are built for training with reinforcement learning.

- When calculating attention weights, Global Attention takes into account the complete input sequence. Although it enables more flexible alignment, this necessitates computing weights for each element, increasing computational complexity (O(n^2) time and memory in common implementations) for lengthy sequences.

- The attention process is limited to a particular area or window of the input sequence by local attention. By concentrating on a smaller group of input pieces, this lessens the computing load and improves efficiency while handling lengthy sequences. In contrast to global attention, it enforces a more limited alignment.

- Self-attention, sometimes referred to as intra-attention, is a particular kind in which the input sequence is contrasted with itself. As opposed to RNNs, which rely on recurrent connections, this enables the network to directly extract and use information from arbitrarily large contexts. It makes it possible for the model to understand the connections between the sentence’s words.

- Projecting each input vector into three separate vectors a query vector, a key vector, and a value vector, is the process of self-attention. These vectors are utilised to create attention weights; the output embedding is created by weighting and adding the value vectors, while the query and key vectors establish the relative importance of words.

- A popular implementation of scaled dot-product attention uses the square root of the key dimension to scale the dot product of queries and keys to calculate scores.

- Models can differentiate between homophones, different usages of the same word based on context, to self-attention.

- An expansion of self-attention, multi-head attention divides the mechanism into several “heads.” With its own set of learnt parameters, each head functions separately, enabling the model to concurrently concentrate on various facets of the interactions between inputs. The outputs from several heads are projected after being concatenated.

- Decoder-Encoder Attention: This attention, also known as cross-attention or Source attention. In this case, the attention between the encoder and decoder layers’ output is calculated. The decoder provides the queries, and the encoder’s output provides the keys and values. This enables the decoder to generate the target sequence by focusing on the most pertinent portions of the sequence. One of the main concepts of neural machine translation is this.

You can also read on What Is Masked Language Modelling & Next Sentence Prediction

Relationship with Other Architectures

Originally, attention methods were created to enhance encoder-decoder models based on RNNs. They are, nevertheless, the fundamental Transformer mechanism. The importance of attention is emphasised by the title of the paper that presented the Transformer architecture: “Attention is All You Need.” Transformers, in contrast to RNNs, are non-recurrent networks that efficiently handle remote input by using self-attention. Transformer networks that use attention can learn contextualised embeddings that are sensitive to a word’s surroundings. Attention mechanisms act across word embeddings.

The idea of memory networks or brain Turing computers, which entail selective access to internal or external memory, is also connected to attention mechanisms. Reinforcement learning is sometimes used to implement attention mechanisms, especially in domains such as recurrent models of visual attention.

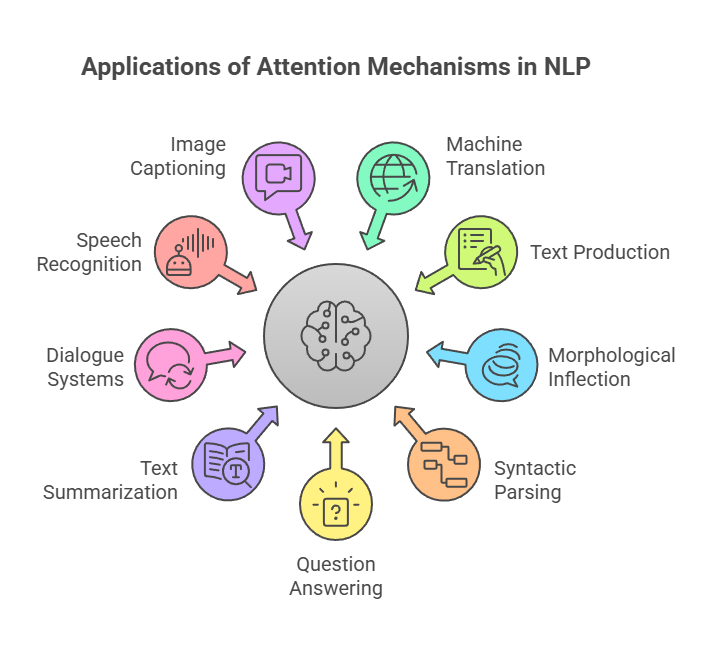

Attention Mechanism Applications in NLP

Numerous NLP jobs make use of attention mechanisms, such as:

- Translation by machine, particularly neural machine translation

- Text Production

- Inflection of Morphology

- Parsing Syntactically

- Answering Questions

- Summarization of Texts

- Dialogue systems and chatbots (in answer generation)

- Recognition of Speech (in encoder-decoder types such as AED)

- Image captioning, which frequently combines textual and visual attention

Benefits and Challenges

When it comes to sequence-to-sequence tasks, attention-based models are especially strong and efficient. Compared to conventional recurrent or convolutional architectures, they are better able to grasp dependencies and long-range interactions between words. Interpretability is another important advantage, since the attention weights might provide some information about the specific aspects of the input that the model concentrated on when generating an output.

The computational cost of ordinary self-attention, which increases quadratically with sequence length, is a major drawback. Because of this, processing lengthy documents is costly. By altering the underlying operations, current research attempts to increase the efficiency of attention through techniques such as linearized attention and sparse attention (e.g., Global, Band, Dilated, Random, Block local attention patterns).

You can also read What Are The T5 Applications And How Does It Work?