Let us discuss about Challenges In NLP, Latest trends and Ethical Considerations in NLP.

New Developments in NLP

Dominance of Statistical and Machine Learning Approaches: Over the past few decades, statistical and empirical methodologies have significantly replaced earlier rule-based or symbolic methods. Nowadays, there are a lot more papers that use statistical methods than don’t. This paradigm, which mostly uses supervised and unsupervised machine learning techniques, has taken over.

Rise of Deep Learning: In NLP, there has been a “deep learning tsunami” from about 2014. Due to their advantages over conventional linear models, nonlinear neural network models over dense inputs have taken the lead in many applications. This comprises designs like as Convolutional Neural Networks (CNNs) for detecting local patterns and Recurrent Neural Networks (RNNs), especially LSTMs and GRUs, which are effective for sequence modelling and capturing statistical regularities.

The Transformer Architecture and Large Language Models (LLMs): A popular design, especially for language modelling, is transformer networks. With previously unheard-of capacities for reasoning, creation, and complicated problem-solving, Large Language Models (LLMs), which are based on these architectures, are regarded as revolutionary technologies that are transforming artificial intelligence. A significant trend in using vast volumes of text data through transfer learning is the use of pre-trained models such as BERT, T5, and BART, which are frequently fine-tuned for downstream applications.

Increased Use of Dense Representations and Embeddings: Dense vector representations (embeddings) of words and even sentences that are learnt from data are replacing sparse feature representations. Generalization to unknown terms is made possible by these embeddings, which encode semantic similarity.

Growth in Text Generation Capabilities: One important area of effect is the generation of text using language models, particularly RNNs and Transformers. With commercially attractive uses like report creation, this is viewed as a component of the larger “generative AI” paradigm.

Focus on Higher-Level Semantic and Discourse Tasks: Research on more difficult tasks including meaning and context is still ongoing, even if lower-level tasks like POS tagging are getting close to human-level performance for several languages. Semantic Role Labelling (SRL), coreference resolution, sentiment analysis, question answering (QA), Neural Machine Translation (NMT), discourse analysis, and information extraction (IE), in particular relation extraction, are all included in this. Ontology building and text-based learning are both gaining popularity.

Increased Multilinguality and Domain Adaptation: Languages other than English, many of which have distinct morphological and syntactic traits, are gaining more attention in this discipline. There is ongoing study on adapting systems to new fields and creating quickly transferable technologies for languages with little resources.

Multimodal Natural Language Processing: A growing field is the integration of language processing with other modalities, such as sounds, pictures, and structured data. When combined with NLP, speech recognition has advanced significantly.

Application-Driven Research and Evaluation: Practical applications and their effects on the actual world are driving more and more research. Challenges and evaluation programs (such as CoNLL, TREC, CLEF, and NTCIR) supported by the government and business community help advance certain tasks and encourage repeatable outcomes.

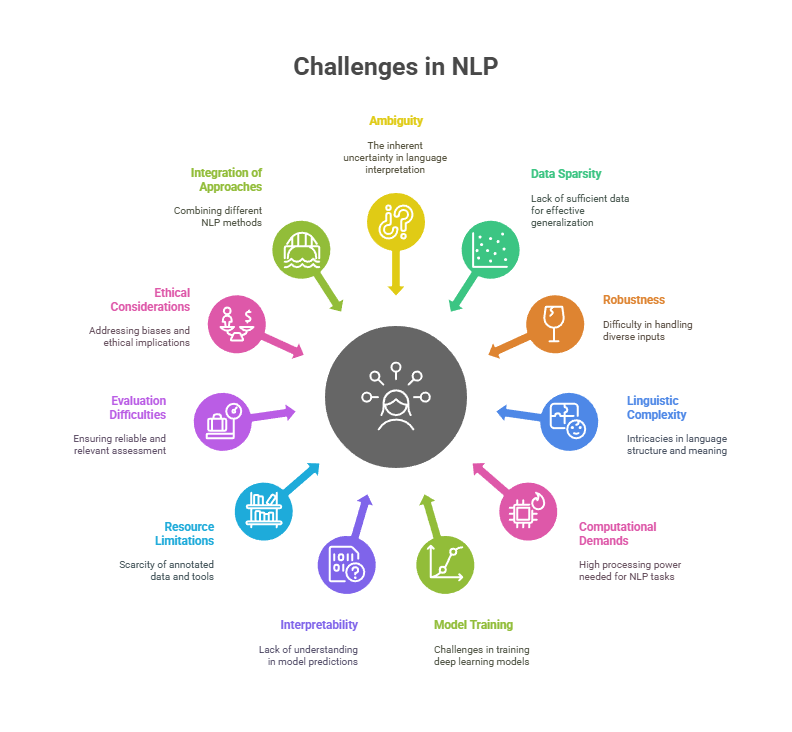

Challenges in NLP

Inherent Ambiguity of Language: Machine interpretation of natural language is challenging because to its intrinsic ambiguity on several levels (lexical, syntactic, semantic, and pragmatic). One of the main challenges is to resolve these misunderstandings.

Data Sparsity and Generalization: Managing uncommon or unseen words and structures (OOV words) is a recurring issue, particularly in languages with limited resources or different corpora. Despite their strength, neural networks usually need a lot of labelled data, whereas humans can make generalizations from a small number of examples. It’s still difficult to generalize across domains and effectively use the vast volumes of unlabelled data and the little quantity of labelled data.

Lack of Robustness and Portability: When given input that differs from the kind of data they were trained on (other genres, styles, or domains), systems frequently perform badly. System porting to new languages or domains takes a lot of work and specific knowledge.

Complexity of Linguistic Phenomena: Despite advancements, it is still difficult to fully capture the nuances of language occurrences. Complex morphology, challenging syntactic structures and non-local connections, complex discourse structure and cohesiveness, multiword expressions, global knowledge, and subtle semantic linkages are all examples of this.

Computational Demands: Large-scale NLP systems, particularly deep learning models and LLMs, need a substantial amount of processing power to train and implement. Long sequences and big vocabulary sizes are still difficult to handle well. Future speedups need a move to parallel computing architectures.

Training Deep Learning Models: Although algorithmic developments like batch normalization and other optimizers have helped, problems like overfitting, disappearing and bursting gradients in deep networks, challenges in convergence, identifying optimal parameters, and training instability still exist.

Interpretability and Lack of Rigorous Theory: Since of the opaqueness of their learnt representations, deep learning models are sometimes referred to as “black boxes” since it can be challenging to comprehend why they produce particular predictions. Many of the effective designs and learning algorithms are known to lack sound theoretical foundations.

Resource Limitations and Standardization: The extensive annotated corpora and extensive lexical resources that are accessible for languages like English are absent from many languages and particular fields. It is still difficult to create appropriate tools and generally recognized standards for the generation, annotation, and usage of corpora. Language productivity makes the lexical acquisition bottleneck automatically updating dictionaries and knowledge bases significant.

Evaluation Difficulties: It might be difficult to ensure repeatable outcomes and provide rigorous and relevant assessment measures, particularly for complicated production or understanding tasks. Certain methods created for particular assessment configurations might not be very generalisable.

Ethical Considerations and Bias: Applications of NLP run the danger of reproducing and intensifying biases found in the training data, especially when data-driven approaches are used. In applications like search, translation, or coreference resolution, this may result in unfair or detrimental consequences. Particularly in delicate fields like BioNLP, it is essential to guarantee software quality and take into account the possible drawbacks of badly designed systems. Research on biases and the ethical use of NLP is ongoing and crucial.

Integration of Approaches: Although hybrid approaches are becoming more popular, there is still work to be done to bridge the gap between deep processing of certain domains and shallow processing of unconstrained text, as well as to properly integrate various methodologies (e.g., statistical/deep learning methods with symbolic/linguistic knowledge).

In conclusion, the discipline is presently being propelled by the quick growth of deep learning and statistical techniques, especially the creation and use of massive transformer models, which has resulted in advances in jobs like translation and generation. However, current research and development is still driven by the basic difficulties presented by the ambiguity and complexity of human language, as well as pragmatic problems such data availability, processing cost, model interpretability, robustness, and ethical considerations.

Ethical considerations in NLP

As NLP systems become more advanced and used in real-world applications, ethical challenges in NLP are becoming increasingly recognized. As machine learning and artificial intelligence grow more ubiquitous, understanding how different groups share the risks, costs, and benefits is crucial.

The following are ethical issues:

- Replication and Amplification of Bias NLP systems run the danger of reproducing and magnifying biases in their training data, especially when they use data-driven techniques like machine learning. Additionally, biassed human labellers, biassed resources like lexicons or pretrained embeddings, or even the model architecture itself may be the cause of these biases. For instance, it has been demonstrated that word embeddings store implicit associations such as gender and racial stereotypes that are present in human thinking. Using GloVe vectors and cosine similarity, studies have confirmed findings of implicit connections (e.g., connecting African-American names with negative phrases, or feminine names more with family terms). The possibility that NLP will reproduce and magnify prejudices in the text and the reality is highlighted by this encoding of biases in embeddings. Software may not interpret messages that challenge stereotypes if models include prejudices (such as the idea that women are unlikely to be programmers). These prejudices may cause applications to provide unfair or detrimental results. For example, pronoun resolution in texts that defy gender conventions may be problematic for modern systems. Additionally, it has been demonstrated that machine translation systems perform poorly when translating words that describe non-stereotypical gender roles. When big pretrained language models are adjusted for tasks like text production, possible negative effects may be realised, such as the creation of harmful language or the reinforcement of prejudices.

- Security and Danger Particularly in delicate areas like biological text mining (BioNLP), health-care applications, and conversation systems, poorly constructed NLP applications can have detrimental effects on actual people. Giving the wrong advise in safety-critical scenarios (such medical advice, emergencies, or signs of self-harm) in conversation systems can be risky and even fatal. According to a study, commercial conversation systems’ reactions to medical issues may have caused injury or even death if they had been used. Negative outcomes are also possible for applications such as robot nurses. Assigning confidence levels to outputs and creating metrics to grasp what systems “don’t know” are unresolved issues that are essential for guaranteeing safety, especially in critical circumstances like legal or medical translation.

- Privacy and Monitoring The right to free and private communication may be impacted by extensive text processing. In domestic situations, dialogue agents may often overhear confidential information. Users may divulge confidential information more easily and underestimate the possible consequences when interacting with chatbots that mimic humans. Anonymisation of personally identifiable information is required for systems that have been trained on human talks.

- Access and Fairness Examining the intended users of NLP technology is another aspect of ethical issues. This involves taking into account whose language is translated to and from as well as whether the advantages of this technology are distributed to everyone whose labour (such as supplying text or annotating) enables it. Additionally, NLP may be used in monitoring or censoring regimes.

- Inability to Interpret Deep learning models are sometimes referred to as “black boxes” since it can be challenging to comprehend the reasoning behind their predictions. This opacity may make it more difficult to recognise and address biases.

- Moral Conundrums in Design Even the design of system objectives can give rise to ethical quandaries. For example, in reinforcement learning, agents may obtain rewards through unanticipated or unwanted actions, simulating human problems brought on by basic, greed-centric learning principles. Gender equality is another problem raised by dialogue systems; many of the chatbots in use today are named after women, which may reinforce preconceptions. Another major worry is how systems react to poisonous or abusive language.

Research on resolving these problems is ongoing and significant. Research is being done on “debiasing” machine learning and natural language processing (NLP), encouraging the use of documentation such as model cards and datasheets to describe the data and potential problems, investigating value-sensitive design by carefully weighing potential harms beforehand, creating metrics to assess system uncertainty, and working with organisations like Institutional Review Boards (IRB) in studies involving human subjects.