Entity Linking in NLP

What is Entity Linking?

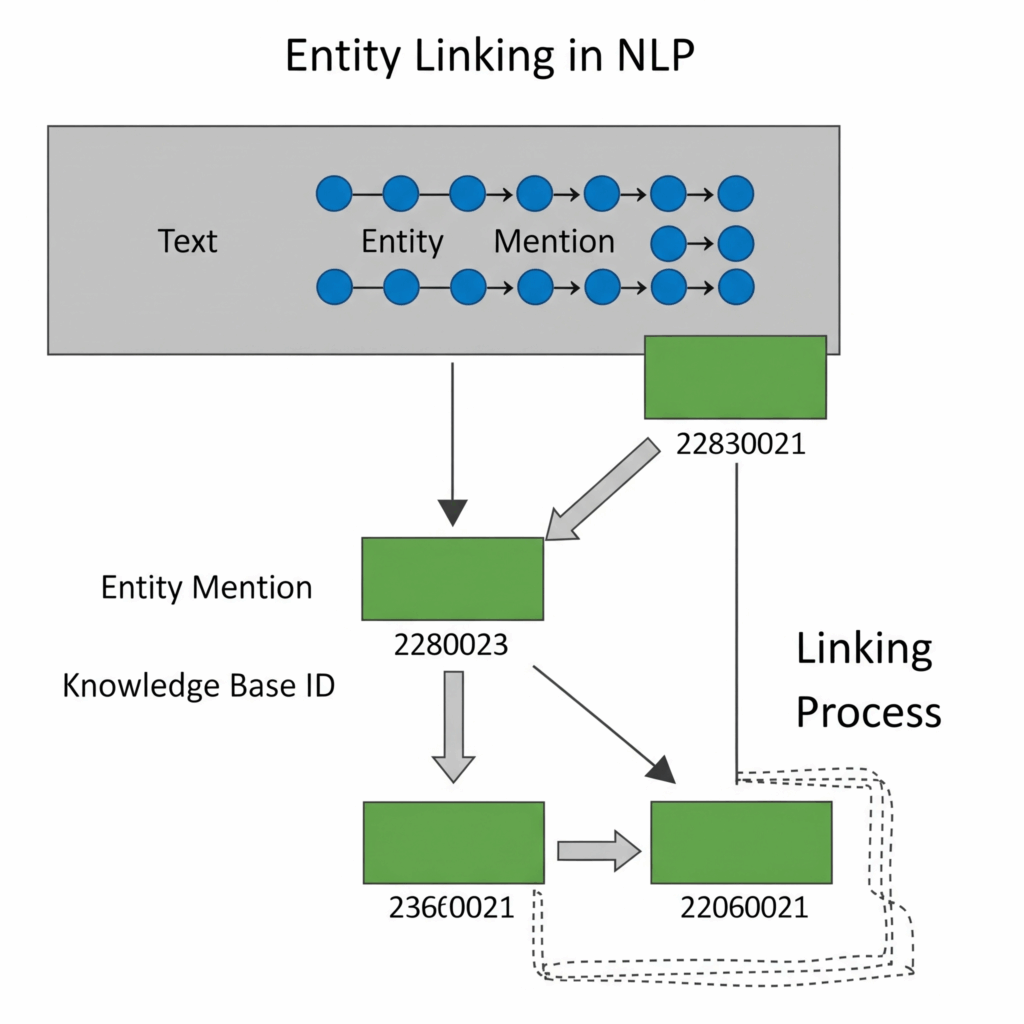

One task in natural language processing is entity linking, which entails connecting a textual mention to an ontology’s representation of a real-world entity. Finding the precise real-world entity to which a remark alludes is the challenge of mapping discourse entities to actual people. In contrast to coreference resolution, which groups text spans that refer to the same discourse entity, entity linking goes one step further and links that discourse entity to a knowledge base canonical entry. Named entity tagging and coreference resolution can be used in conjunction with entity linking.

Linking textual mentions to entities in a knowledge base is the aim of entity linking. Usually, an ontology, a catalogue of all the entities in the world, is the aim of connecting. In tasks such as factual Question Answering, Wikipedia is a popular ontology, with each page acting as a distinct identifier for a specific entity. Entity linking can, however, also be done in more “closed” contexts using smaller, predetermined lists of entities. Wikipedia serves as the foundation for many knowledge bases, including DBpedia and Freebase. The ability to identify if a mention refers to an entity that is not in the knowledge base, sometimes known as NIL entities, is another requirement for an entity linking system.

What is entity linking used for?

Improved comprehension:

Text can be better understood by NLP systems by connecting entities to their knowledge base counterparts.

Better Information Extraction:

Applications such as knowledge graph generation and question answering depend on the ability to extract structured information from unstructured text, which entity linking makes possible.

More Effective Search and Suggestion:

Personalized recommendations and more accurate and pertinent search results are made possible by knowing the unique entities in searches and documents.

Semantic Analysis:

Applications like as sentiment analysis and content suggestion require deeper semantic analysis, which entity linking makes possible.

What is the Process of Entity Linking?

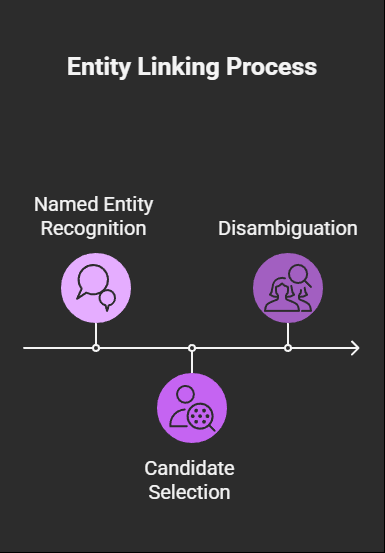

Named Entity recognition:

The first step in the process is to find and extract any mentions of entities from the text.

Candidate Selection:

Next, a collection of possible knowledge base entries that might match the entity mention is chosen by the system.

Disambiguation:

The system then selects the most likely candidate from the set, taking into account text similarity, context, and other elements to identify the right item.

Linking Entities Applications

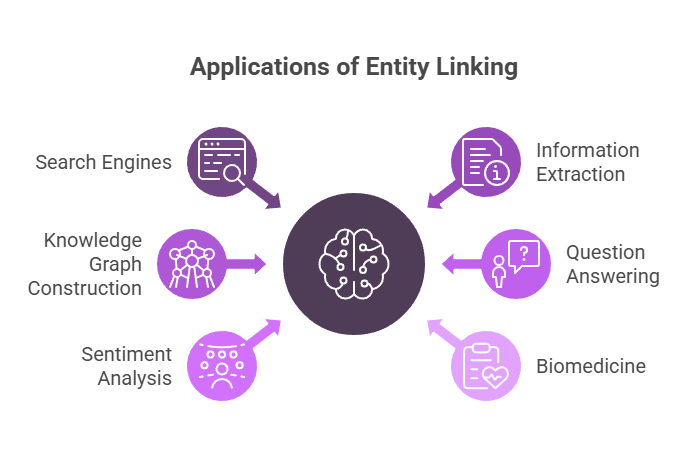

Search Engines:

Search engines can better comprehend user intent and deliver more relevant results by removing irrelevant content thanks to entity linking.

Information Extraction:

It makes it possible to discover relationships between items and extract structured information from text.

Knowledge Graph Construction:

Building knowledge graphs requires entity linking, which connects entities to the relevant knowledge base elements.

Question Answering:

It aids in context comprehension and helps question answering systems choose the right responses to user enquiries.

Sentiment Analysis:

Text sentiment can be more accurately analyzed by sentiment analysis systems if they have a deeper understanding of the entities being discussed.

Application in Biomedicine:

By associating various terms with a common set of entities, entity linking aids in the standardization and normalization of language in the medical industry.

Often, entity linking is divided into two primary phases: mention disambiguation and mention detection.

Mention detection: It is the process of locating textual passages that might include references to entities. Named Entity Recognition (NER) systems are frequently the first stage in locating candidate mentions since they can recognize many kinds of proper names (such as people, organizations, and places).

Mention Disambiguation: After possible mentions have been found, this step clarifies any unclear references by associating them with the appropriate, particular knowledge base entity. Examples of very ambiguous mentions include “Washington,” which can refer to a US state, the nation’s capital, or the individual George Washington, and “Atlanta,” which might refer to several towns, cities, or other entities.

Entity Linking methods

There are various methods for entity linking:

Classic Baseline Methods:

These early techniques frequently made use of Wikipedia-derived anchor dictionaries. These dictionaries associate the anchor text of Wikipedia hyperlinks with the objects they refer to. For example, in order to find possible mentions, the TAGME algorithm searches such a dictionary for token sequences in the input text. It uses things like the prior probability of an anchor referring to a particular entity (based on how frequently that anchor links to that entity in Wikipedia) and the candidate entity’s coherence or relatedness to other entities mentioned in the text (measured by shared in-links between Wikipedia pages) to disambiguate.

Feature-Based Approaches:

The entity linking problem is frequently framed as a ranking challenge by feature-based approaches. A classifier assigns a score to each of the possible entities from the knowledge base given a mention. Typically, ranking features consist of:

- String resemblance between the mention text and the canonical name of the potential object.

- PageRank in the Wikipedia link graph or Wikipedia page views are two examples of sources that can be used to estimate an entity’s popularity.

- An NER system’s anticipated entity type.

- Using word embeddings or bag-of-words, the document context compares the text surrounding the mention to the knowledge base description of the candidate item.

Neural Approaches:

More recent techniques employ embeddeds, which are distributed vector representations, for entities, mentions, and context. In order to produce entity embeddings, algorithms such as ELQ encode the text from Wikipedia pages, including the title and description. The input text’s mention spans are also encoded (for example, by averaging the token embeddings). To score the likelihood of a link, the similarity between an entity embedding and a mention span embedding is then calculated, frequently using a dot product. It is possible to train neural models end-to-end, employing suitable loss functions to optimize for entity linkage and mention detection simultaneously.

Collective Entity Linking:

This method links all of the entities in a document together instead of handling each mention separately. It is assumed that the inclusion of other entities in the document offers powerful contextual cues for disambiguation (for example, references to “California,” “Oregon,” and “Washington” collectively imply that “Washington” refers to the state, while references to “Baltimore,” “Washington,” and “Philadelphia” imply the city). A compatibility score is introduced between candidate items in the document by collective approaches.

There are several techniques to quantify this compatibility, including utilising probabilistic topic models, the dot product of entity embeddings, the amount of common in-links between Wikipedia pages, and shared categories in Wikipedia. Linking collective entities is computationally difficult (NP-hard) and usually depends on methods of approximation inference.

Entity linking example

In order to evaluate supervised entity linking systems, datasets containing manually marked mentions that are connected to knowledge base entities are needed. GraphQuestions/GraphQEL and WebQuestionsSP/WebQEL are two examples of such datasets.

For many NLP applications, entity linking is essential, especially in question answering, where it is essential for knowledge-based quality assurance, and information extraction, where it helps with tasks like knowledge base population and slot filling. It can also be employed in dialogue systems and aid in coreference resolution by offering more information for distinguishing mentions.