What is Gated Recurrent Unit?

A particular kind of Recurrent Neural Network (RNN) architecture called Gated Recurrent Units (GRUs) was created to overcome some of the drawbacks of more basic RNNs. They are regarded as tangible representations of the RNN concept.

What is GRU used for?

In order to address the vanishing and exploding gradient issues that hinder the effectiveness of training basic RNNs, particularly on tasks that require the model to capture long-range dependencies in sequential data, GRUs and Long Short-Term Memory (LSTM) networks were developed. In particular, information from signals earlier in the sequence may not be able to effectively influence later phases due to disappearing gradients. Even while LSTMs and GRUs guard against these problems, performance can still suffer for longer sequences. The largest addition of deep learning to the statistical natural language processing toolkit is thought to be gated architectures, such as GRUs.

How GRUs Work?

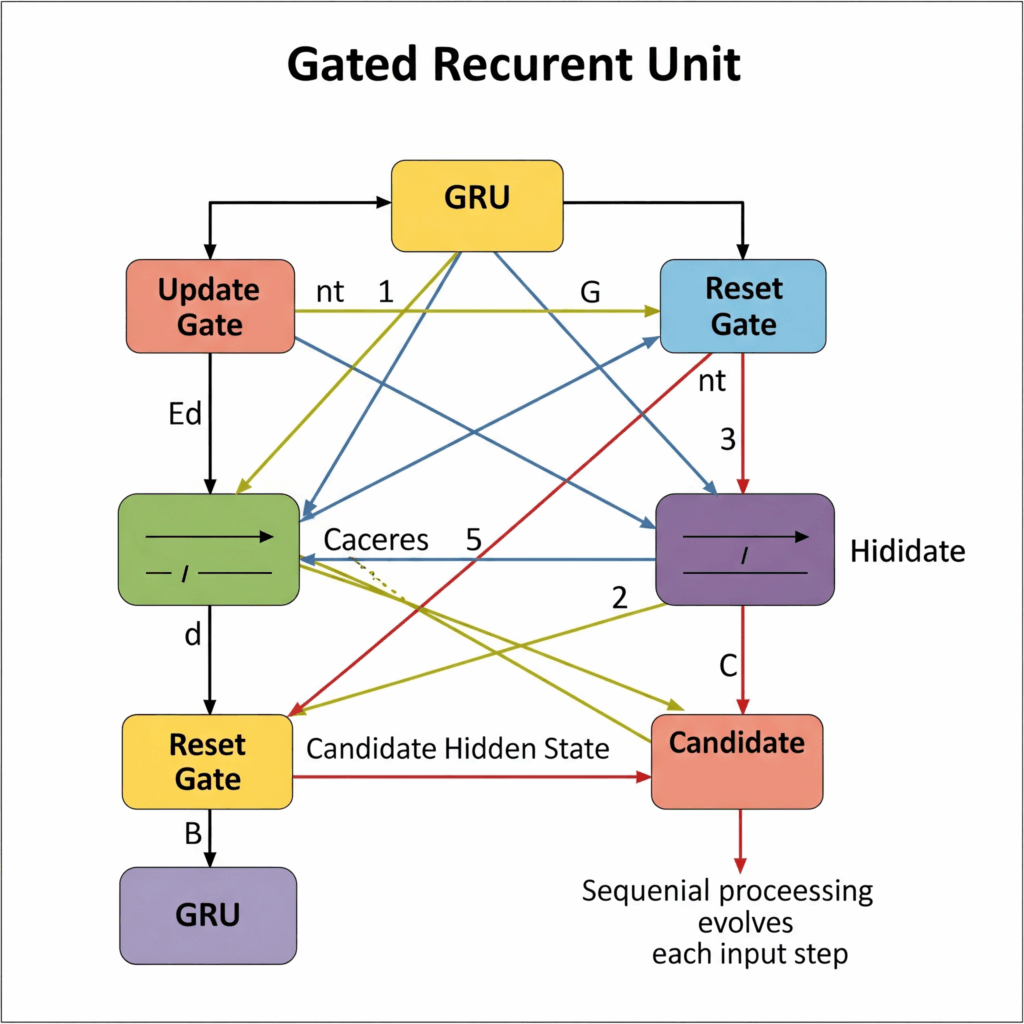

The Gating Mechanism in the Operation of GRUs use a gating mechanism in contrast to basic RNNs, which process sequences by applying a function to the current input and the prior hidden state without providing fine-grained control over information flow.

Mechanisms known as gates regulate access to multi-dimensional vectors: They are frequently implemented as element-wise products between a gate vector and the vector whose information flow is being regulated. This is sometimes referred to as the Hadamard product, and it is represented by the symbol ⊙.

Gates should ideally be binary (0 or 1), allowing or completely blocking an element. However, the inability to differentiate binary values poses a challenge for gradient-based training techniques such as backpropagation.

A soft, differentiable gating method is used to approximate the hard binary gating. In order to accomplish this, real-valued outputs are sent via a sigmoid function (σ), which compresses values into the interval (0, 1). Training determines the behavior of these continuous gate values, which show how much information is permitted to flow through.

Using these differentiable gates to determine which portions of the inputs are written to memory and which portions of memory are overwritten (forgotten) at each time step is the fundamental concept underpinning GRUs and LSTMs. Unlike simple RNNs, which use multiplicative updates for the hidden state transitions, they use additive updates to prevent vanishing gradients.

Gated Recurrent Unit vs LSTM

One may consider the GRU to be an LSTM architecture simplification. GRUs are simpler, have a significantly smaller number of gates, and do not require a separate memory cell than LSTMs.

Important attributes and capabilities:

Gating System:

By selectively updating the concealed state at each time step via gates, GRUs are able to retain crucial information while eliminating unimportant details.

Update Gate:

The amount of fresh data that is added to the hidden state is managed by the update gate.

Reset gate:

The amount of the prior hidden state that should be forgotten is decided by the reset gate.

A simple architecture

GRUs use fewer parameters and train more quickly than LSTMs because of their simpler architecture, which consists of just two gates and a single hidden state.

Vanishing Gradients problem:

Similar to LSTMs, GRUs assist standard RNNs in learning long-term relationships in sequences by reducing the vanishing gradient issue that might afflict them.

Excellent for a Range of Uses:

GRUs are extensively employed in many different applications, including as time series forecasting, speech recognition, and natural language processing.

Benefits of GRUs

Quicker Instruction:

When opposed to LSTMs, GRUs’ simpler architecture results in quicker training periods.

Reduced Memory Demand:

GRUs are appropriate for applications with constrained memory resources since they use less memory than LSTMs.

Effective for Long Sequences:

GRUs are appropriate for tasks requiring the processing of lengthy data sets because they can efficiently learn long-term dependencies in sequences.

Uses and Applications

GRUs have proven to be successful in applications such as machine translation and language modelling. Modern systems that need recurrent processing frequently use gated architectures like LSTMs and GRUs because of their better training stability as compared to simple RNNs. They can be employed as transducers (predicting an output at each step), acceptors (predicting a final output based on the sequence), or parts of encoder-decoder architectures in a variety of RNN configurations. For tasks like document categorization, for instance, they can be employed as forward and backward components in bidirectional RNNs.