Parsing Algorithms

Syntactic parsing, which involves mapping a string of words to its parse tree or giving a phrase a syntactic structure based on a grammar, uses parsing algorithms. A parser may be viewed as a recognizer that, under the guidance of a language, generates structural analyses, such as feature terms or parse trees. Grammars, particularly context-free grammars (CFGs), give the parser the syntactic rules it needs to retrieve information that isn’t stated clearly in the input phrase. In order to identify a structure for the input sentence, a parser looks through the alternatives granted by a grammar.

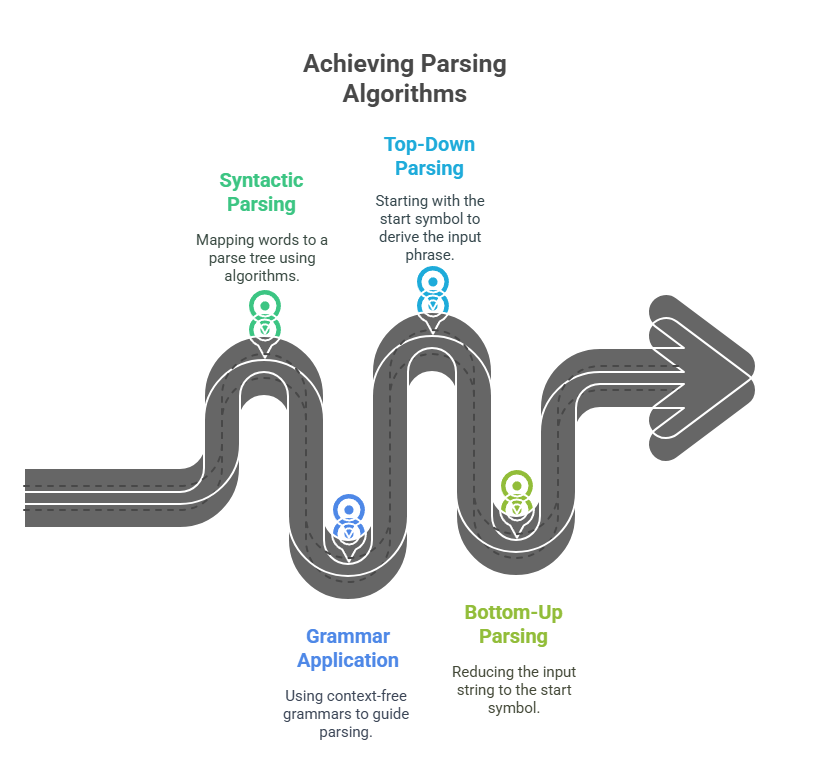

The way parsing algorithms do derivations series of rule expansions that change the grammar’s start symbol into the input string allows them to be categorized. There are two broad strategies mentioned:

Top-down parsing: Applies rules by substituting the sequence of symbols on the right-hand side of a production for a non-terminal symbol, starting with the grammar’s start symbol (such as S for sentence). The goal of the method is to use the start sign to determine the input phrase.

Bottom-up parsing: Replaces sequences of symbols (the right-hand sides of rules) with the matching non-terminal symbols (the left-hand sides) after applying rules in reverse, starting with the input words (terminal symbols). Reducing the input string to the initial symbol of the grammar is the aim.

Parsing Algorithms and frameworks

- Recursive Descent Parsing: A straightforward top-down parsing technique in which the parser deconstructs high-level objectives (such as locating a S) into smaller, more manageable subgoals by interpreting the language as instructions. Until terminal symbols (words) fit the input, it depends on producers to swap out aims.

- Shift-Reduce Parsing: A bottom-up parsing technique that stores input symbols in a buffer and CFG symbols on a stack. When the top of the stack corresponds to the right-hand side of a rule, input tokens are “shifted” onto it and “reduced” to the matching left-hand side non-terminal. The steps of a rightmost derivation can be carried out in reverse using this approach.

- Left-Corner Parsing: Described as a bottom-up filtering approach that is top-down. It determines if the subsequent input word is compatible with the pre-terminal categories in a grammar-related “left-corner” table.

- Chart Parsing: A method for parsing that uses the dynamic programming methodology. It avoids duplication of effort by storing incomplete answers (intermediate findings) in a data structure known as a “chart” for later use. An “agenda” is frequently used by chart parsing algorithms to control the sequence in which edges which stand for rule applications or incomplete results are processed. It is possible to create different parsing algorithms by changing the structure of the agenda (e.g., stack or queue).

- The Cocke Kasami Younger (CKY) algorithm: Originally enabling parsing in cubic time for grammars in Chomsky Normal Form (CNF), this simple tabular or chart parsing technique was created especially for CFGs. General CFGs need conversion, which can be problematic, but they are effective for constrained grammars. One example of dynamic programming is CKY.

- Earley’s algorithm: It was introduced as the first method that could parse generic CFGs in less time than cubic. The chart parsing algorithm is top-down.

- Deductive Parsing: Pereira and Warren (1983) provided a broad paradigm that views parsing as a deductive process and provides a high-level description of tabular parsing algorithms. Algorithms for parsing charts are examples of this paradigm. Managing an agenda and storing and retrieving parse results in the chart are two aspects of implementing deductive parsing.

- LR Parsing: This method, which is frequently applied to deterministic parsing of formal languages such as programming languages, precompiles the grammar to improve parsing speed. It effectively creates a push-down automata by compiling the language into a finite automaton enhanced with reductions. This is extended to nondeterministic languages by generalised LR (GLR) parsing.

- Dependency Parsing Algorithms: The goal of dependency parsing is to determine the relationships between the words in a phrase. Two primary categories of dependency parsing algorithms:

- Transition-based parsing: The shift-reduce parsing is used. Using a stack and a buffer of incoming words, it gradually constructs the syntactic structure through a sequence of transitions or actions directed by an oracle, which is a predictor. The sentence length of these methods can have linear time complexity. They are frequently algorithms driven by greed. The parser operation may be categorised using neural networks according to the stack and buffer states.

- Graph-based parsing: It generates a weighted, directed graph from a phrase, with words acting as vertices and potential head-dependent associations acting as edges. Using precomputed edge scores, the method chooses the greatest scoring structure, such a maximum spanning tree, to determine the best parse. The scores for the edges may be estimated using neural networks.

Parsing algorithms play a key role in statistical parsing, which employs statistical inference from samples of natural language text. In addition to classical algorithms, statistical parsing techniques are mostly employed for disambiguation, choosing the best analysis when a phrase contains several potential parse trees based on formal grammar. In statistical parsing, Probabilistic Context-Free Grammars (PCFGs) are a straightforward extension of CFGs.

PCFG uses dynamic programming methods. In O(n³) time, where ‘n’ is the phrase length, parsing can calculate the likelihood of substructures and select the top scoring analysis using methods like Viterbi search. Although these precise techniques identify the single best analysis, the k-best analyses can be extracted by generalisations. AI-developed search techniques are used when determining the optimal parse becomes computationally costly (e.g., exponential time for some models). By restricting the search space to only the most probable partial analyses, beam search improves efficiency with weighted or probabilistic grammars, bringing the parsing process closer to linear rather than exponential or cubic in practice.

Additionally, parsers can be modified for certain inputs, such as word lattices produced by voice recognisers, in which the input words are ambiguous. In this case, the parser can function as a language model to identify the most likely word order along a lattice path.

Additionally, parsers are not only theoretical tools; they are employed in reality for tasks including simulating psycholinguistic processing, evaluating grammar during grammar creation, and serving as a first step in NLP applications like question-answering systems. They can also be resilient, trying to provide meaningful results even when the grammar does not fully encompass the input.