This article gives an overview of Regular Expression Applications, Core Concepts, Syntax and Implementation Regular Expression in Python.

Regular Expressions

A key tool in natural language processing and computer science in general are regular expressions, or regex for short. They provide you a language to specify the character patterns and text search strings you want to use. Any language that can be defined by a regular expression is referred to as a regular language. In formal terms, a regular expression is an algebraic notation used to describe a collection of strings. Regular expressions are very helpful for finding matches that suit the specified pattern while searching through texts or corpora.

Finite-state machines, which are theoretical models of computing on regular languages, are the foundation of regular expressions. From basic character sequences to far more intricate forms, they may be utilised to define patterns.

The following describes regular expressions, their syntax, and applications:

Core Concepts & Syntax of Regular Expressions

Literal Characters and Concatenation: A string of basic characters is the most basic type of regular expression. Concatenation is the process of joining characters in a certain order. The substring “woodchuck” is exactly matched by /woodchuck/, but the letter “!” is matched by /!/. Since regular expressions usually take case into account, “Woodchucks” would not match /woodchucks/.

Disjunction

- Square Braces []: Any single character within the set can be used as a disjunction of characters to match when a string of characters enclosed in square brackets is used. For example, /[wW]/ corresponds to either ‘w’ or ‘W’. The dash (-) inside brackets can be used to specify ranges, as in /[A-Z]/ for any capital letter, /[a-z]/ for any lower case letter, or // for any single digit.

- Pipe Character |: This suggests that the content on its left or right can be chosen. /the|any/, for instance, matches either “the” or “any”. One of the given strings is matched by the | operator. The scope of the | operator is indicated by brackets ().

Wildcard .: With the exception of a carriage return, the period is a wildcard expression that matches any single letter. For instance, “begin,” “beg’n,” or “begun” are all compatible with /beg.n/. It frequently means “any string of characters” when combined with the Kleene star (.*).

Counters (Iteration): These symbols indicate how many times the previous character or phrase must appear.

- ?: Corresponds to either one or zero instances of the prior item. The previous character is rendered optional. For instance, “woodchuck” or “woodchucks” are matched by /woodchucks?/.

- *: “Zero or more occurrences of the immediately preceding character or regular expression” is what the Kleene star signifies. For instance, /a/ corresponds to zero or more “as.” Zero or more lowercase letters are matched by [a-z].

- +: “One or more occurrences of the immediately preceding character or regular expression” is what the Kleene plus signifies. For instance, /a+/ matches at least one “a.” [a-z]+ corresponds to at least one lowercase letter.

- {n}: Corresponds to precisely n instances of the preceding item.

- {n,}: Corresponds to at least n instances.

- {,m}: Matches a maximum of m instances.

- {n,m}: Correspondences between n and m instances (inclusive).

Anchors: Regular expressions are anchored to certain locations in a string by special characters.

- ^: Corresponds to a line or string’s beginning. Only when “The” is at the start of the line does /The/ match it. Keep in mind that, if ^ is the initial letter, it also denotes negative inside [ ].

- $: Corresponds to a line or string’s end. Only when “dog.” Occurs at the end of the line (the period is escaped) does /dog.$/ match it.

- \b: Corresponds to a zero-width word boundary. /\bthe\b/ corresponds to the word “the” but does not form a component of another word, such as “other.”

- \B: Corresponds to a non-word boundary.

Special Characters and Backslashes \: In regular expressions, certain characters (such as., *,?, +, [, ], (, ), {, }, |, ^, $) have unique meanings. These characters must be preceded by a backslash in order to match them literally. For example, /\$/ corresponds to a literal dollar symbol, and /./ corresponds to a literal period. For aliases for character sets such as \d (digit), \s (whitespace), and \w (alphanumeric), backslashes are also utilised.

Grouping (): When applying operators to numerous characters or expressions, words are grouped together in parenthesis. Additionally, they are used to save the matched pattern in a register for use in substitutes and other subsequent processes. When grouping is required but capturing is not, non-capturing groups (?:) might be employed.

Operator Precedence: The order in which operators apply is determined by the operator precedence hierarchy of regular expressions. The precedence of brackets is greatest, followed by counters, sequences and anchors, and disjunction (|).

Greedy vs. Non-Greedy Matching: Regular expressions are “greedy,” which means they match the longest string by default. Qualifiers that match the shortest string feasible, such as *? and +?, impose “non-greedy” matching.

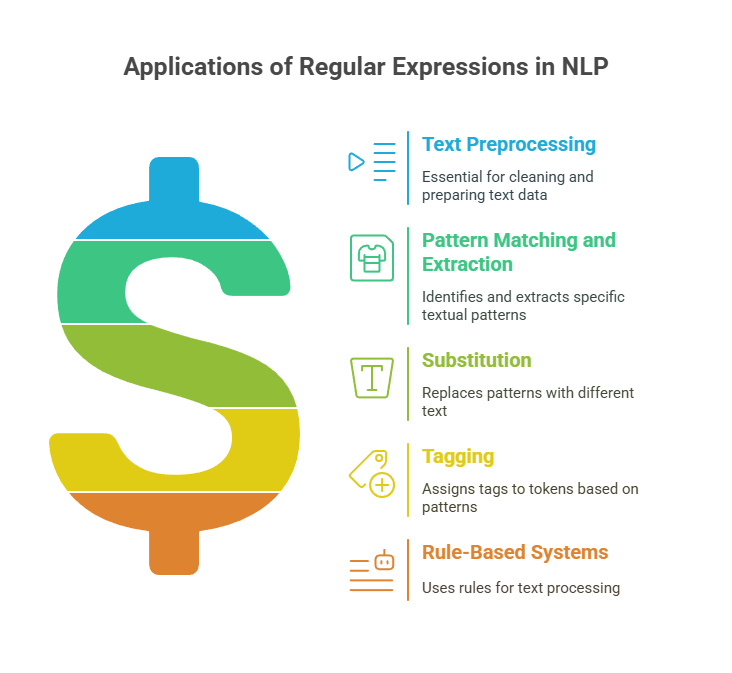

Regular Expression Applications in NLP

In NLP, regular expressions are frequently employed for a variety of tasks:

Text Preprocessing: They are an essential preprocessing tool.

- Tokenization: Used to separate a string into recognizable tokens or linguistic pieces, such words and punctuation. Regular expressions can be used to achieve rule-based tokenization. For instance, a phrase may be divided into words based on whitespace using re.split(‘\s+’,’I enjoy this book.’). Complex situations like splitting contractions can also be handled using regular expressions.

- Normalization: Used for word format normalization. Case folding (converting to lowercase) is one example of this. Regular expressions can be used to construct rule-based text normalization systems. Additionally, non-standard terms (such as dates or numbers) can be mapped to standard tokens using them.

- Sentence Segmentation: Used to divide text into sentences.

Pattern Matching and Extraction: Used to identify and extract particular textual patterns. Finding certain words, phrases, or character sequences are a few examples. You may use re.findall() to extract word fragments. Additionally, they may be used to extract structured data, like prices, dates, and even particular names. Certain information extraction techniques use regular expressions to extract relationships based on patterns.

Substitution: Using substitution operators, regular expressions may identify a pattern and swap it out for a different text. This is helpful for normalizing whitespace, cleaning text, and eliminating extraneous characters or markup. More imaginative changes, such turning text into “hAck3r” speech, may also be made using it. Regular expression-based replacements can be used to construct ELIZA-like programs.

Tagging: Rule-based taggers, such as Part-of-Speech taggers, can assign tags to tokens based on matching patterns by using regular expressions. Any word that ends in ‘-ed’, for example, might be classified as a past participle by a rule.

Rule-Based Systems: From simple text processing to more complicated tasks like constructing simple grammars or recognizing pleonastic “it” based on patterns, regular expressions are an essential part of many rule-based natural language processing (NLP) systems.

Regular Expressions – (using re and NLTK)

Regular expression operations are often done with Python’s re package. Key methods include re.sub( ), re.match( ), re.search( ), and re.findall( ).

Use raw strings when using regular expressions with backslashes in Python strings to avoid Python interpreting them. This may be achieved by prefixing the string literal with r (e.g., r’\b’).

Regular expressions are used by NLTK routines like nltk.regexp_tokenize() for tokenization and nltk.re_show() for match visualization.

In conclusion, regular expressions constitute a strong and flexible language for characterizing patterns in text. They are necessary for tasks like information extraction and rule-based tagging, as well as for several basic NLP preparation procedures like tokenization and normalization. Their striking resemblance to finite-state machines emphasises their capacity to process patterns that basic computational models can identify.