What is Semantic analysis in NLP?

Semantic analysis is a crucial part of Natural language Processing (NLP), which deals with linguistic meaning. The traditional language processing pipeline places it after lexical and syntactic analysis. Although a straightforward perspective proposes a clear division into phases that correspond to the language divisions between syntax, semantics, and pragmatics, it is often acknowledged that in practice, this is not always the case. Nonetheless, this division is a helpful tool and the foundation for architectural models that simplify NLP work.

Understanding the utterance is the main objective of semantic analysis for both humans and NLP systems. This may entail responding with an action or adding information to a knowledge base. Determining the meaning of a statement is seen as a crucial problem.

Semantic analysis linguistics

Within linguistics, semantic meaning can be examined at various levels:

- Lexical semantics is the study of fixed word combinations and their meanings. It entails examining the meanings of the individual words, frequently beginning with definitions from dictionaries.

- The meanings of phrases and sentences that are created by combining words are referred to as supralexical (combinational or compositional) semantics. In order to comprehend words in context, it looks at the connections between individual words and evaluates the meaning of words that combine to make sentences. Words’ meanings greatly influence their combinatorial possibilities, and many grammatical formulations have meanings unique to their composition.

Even though lexical and supralexical semantics are traditionally separated, word-level and grammatical semantics are increasingly interconnected. Semantic analysis considers the sentence’s meaning, intent, and word, phrase, and idea relationships.

Challenge in Semantic Analysis

Ambiguity is a significant challenge in Semantic Analysis. From the standpoint of a machine, human speech can be interpreted in a variety of ways. This comprises:

- Words with several meanings are lexical ambiguity. For instance, “bill” might indicate money or a bird’s beak, whereas “nail” can mean metal or a body part.

- Words such as quantifiers, modals, or negatives that apply to various textual segments are known as scopal ambiguity.

- Referential ambiguity is the inability to clearly identify the intended reference of pronouns or other referring expressions.

One essential component of a semantic theory is the resolution of word ambiguity in context. Determining which sense of an ambiguous word was meant in a particular context is known as word sense disambiguation, or WSD. In essence, WSD is a classification problem in which each instance of a word is given a semantic tag according to its context. Semantic tags, as opposed to part-of-speech tags, can be used to tag words with different meanings. Because it is challenging to get labelled data for each polysemous word, unsupervised and semi-supervised approaches are especially crucial for WSD.

Translating original utterances into a semantic metalanguage or comparable representational system is frequently necessary to achieve semantic analysis. It is possible to abstractly represent semantic representations as logical forms. Semantic representation techniques can be roughly divided into compositional and lexical approaches, as well as formal and cognitive approaches.

- Although truth-conditional meaning has occasionally been the focus of formal techniques, which are frequently influenced by philosophical logic, these analyses are thought to be too limited to provide a thorough explanation of everyday language use and many real-world applications. Predicate logic representations are one example.

- To influences from psychology and cognitive science, cognitive techniques have become more and more prevalent. To capture an insider perspective of what a speaker is saying, the Natural Semantic Metalanguage (NSM) approach, for example, employs semantic explication (reductive paraphrasing). NSM makes use of ideas such as semantic molecules to comprehend lexical complexity.

- An technique known as “ontological semantics” describes the meanings of lexical items and text-meaning representations using ontologies.

Also Read About Understanding The Difference Between Deep Learning And NLP

Elements of Semantic Analysis

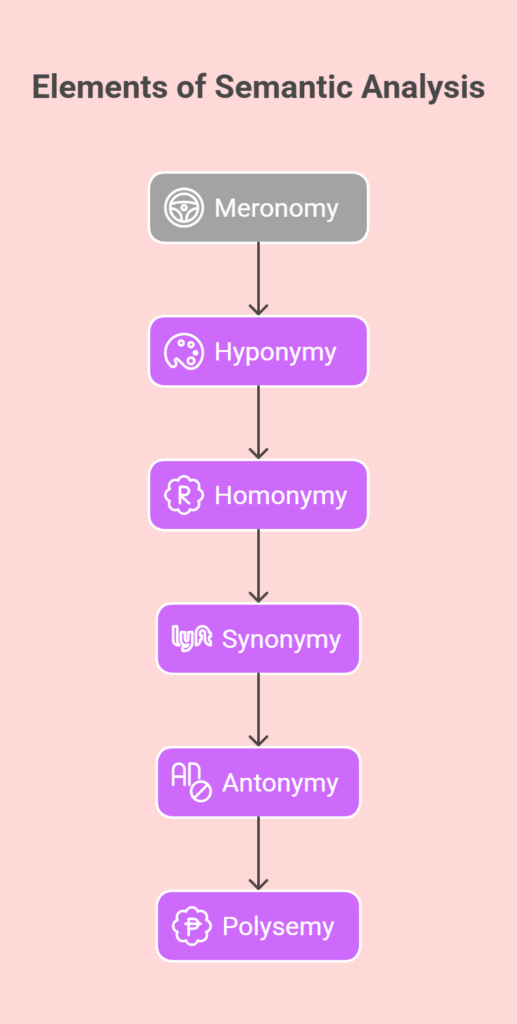

The following are some crucial elements of semantic analysis that need to be carefully considered while processing natural language:

- A relationship in which one lexical term is a constituent of a bigger entity is known as meronomy. For instance, “automobile” is a meronym for “wheel.”

- A term that is an example of a generic term is called a hypoonymy. They can be comprehended by using the class-object analogy.Hypoonyms for hypernyms like “colour” include “grey,” “blue,” “red,” etc.

- Two or more lexical concepts with the same spelling but different meanings are homoenial. The word “rose” can mean “the past form of rise” or “a flower.” It has the same spelling but different meanings.

- Synonymy: Synonymy is the state in which two or more lexical phrases that may have different spellings have the same or a similar meaning. As an illustration, consider (Job, Occupation), (Large, Big), and (Stop, Halt).

- The term “antonymy” describes a pair of lexical terms that are symmetric to a semantic axis and have opposing meanings. As an illustration, consider (Day, Night), (Hot, Cold), and (Large, Small).

- Lexical concepts with the same spelling but several closely related meanings are referred to as polysemy. It doesn’t require similar meanings like homonymy. Multiple meanings: “man” might signify “the human species,” “a male human,” or “an adult male human.” These definitions are linked.

Semantic analysis Ideas

Any system or application that uses language comprehension must first perform semantic analysis. Related assignments and ideas consist of:

- The process of translating natural language into a representation of meaning, like a logical formula, is known as semantic parsing. Another name for this is computational semantics. Meaning representations must be expressive, unambiguous, connect language to outside information, and facilitate inference.

- Finding the arguments of predicates (main verbs) in a phrase and figuring out how these arguments link to the predicate semantically is known as semantic role labelling, or SRL. Shallow semantics parsing is another name for this. SRL makes advantage of semantic frameworks such as FrameNet and PropBank.

- Relation extraction is the process of determining, for example, through grammatical structure, how linguistic units are connected within a sentence or conversation. This has to do with learning verb argument selection constraints.

- Semantic similarity is the measurement of how similar or unlike words or passages of text are in terms of context and meaning.

- Distributional semantics is the study of word meaning representations through the analysis of unlabeled data, predicated on the idea that words with similar meanings are employed in comparable circumstances.

Applications of Semantic Analysis

Semantic analysis has several uses, including the following tasks:

- Information Retrieval

- Information Extraction

- Text Summarization

- Machine Translation

- Question Answering

- Understanding User Queries and Matching User Requirements

- Dialogue Systems and Human-Computer Interaction

- Web Ontologies and Knowledge Representation Systems

- Sentiment Analysis