Multilayer Perceptron (MLP)

A basic kind of neural network design that is widely employed in natural language processing and machine learning in general is the Multilayer Perceptron (MLP).

What is MLP Mean?

A neural network with several computational layers is called an MLP. This sets it apart from a single-layer perceptron, which consists of just one output layer for calculation. MLPs are thought of as a generalization of more basic models, such as logistic regression and the perceptron.

Architecture

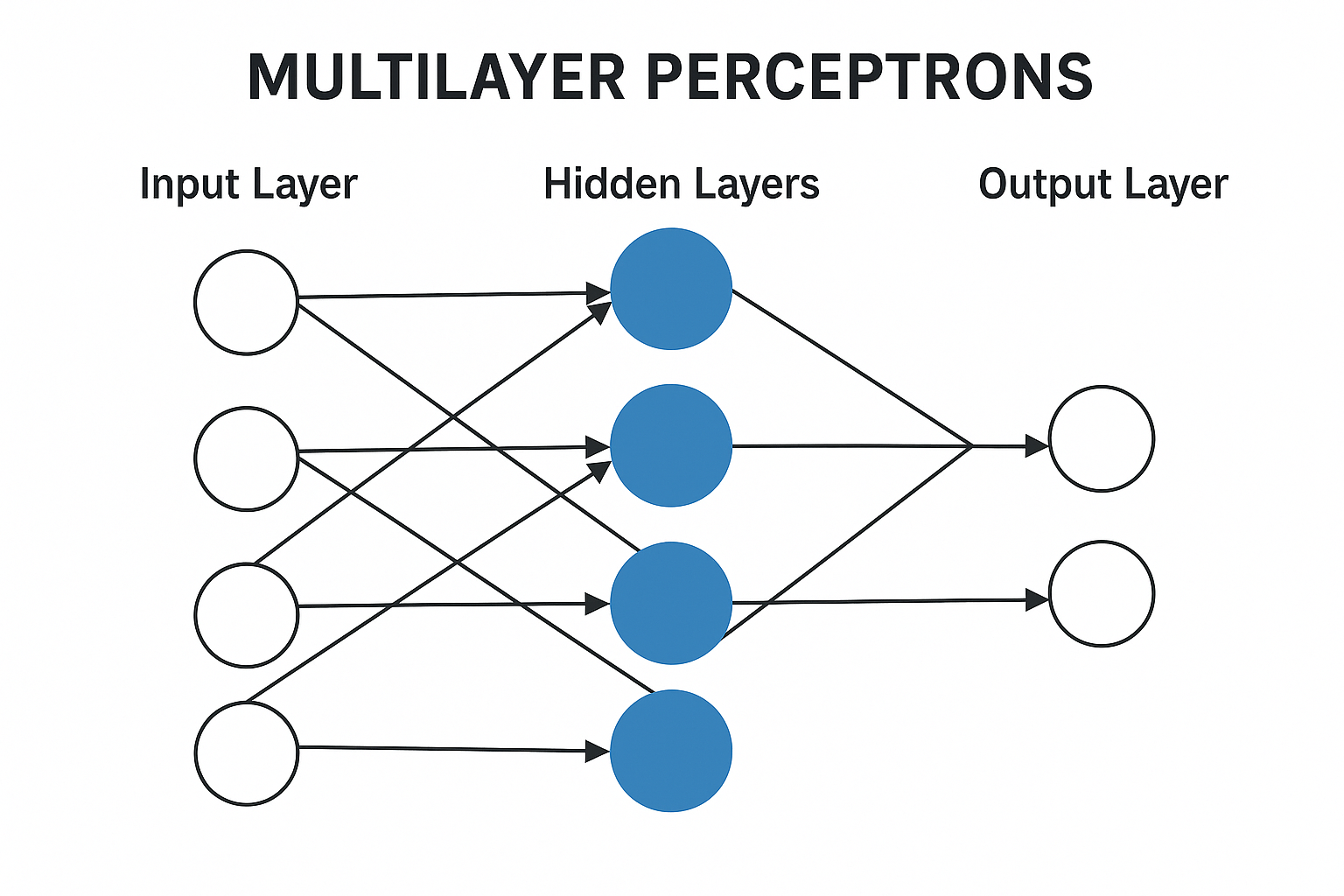

- Layers: Multiple layers make up an MLP, which is usually organized into an input layer, one or more hidden levels, and an output layer.

- The data, which is frequently shown as a vector of real numbers, is transmitted to the input layer.

- Between input and output lie hidden layers. The reason these layers’ calculations are called “hidden” is that they are not visible as the end result. MLPs can have as many hidden layers as they like. The phrase “deep learning” originates from the word “deep networks,” which are networks with several hidden layers.

- The network’s prediction is generated by the output layer. The particular goal determines the quantity and kind of output nodes (many nodes for multiclass classification or reconstruction, one node for binary classification, etc.).

- Feed-Forward Structure: Data moves from the input layer to the output layer via the network’s hidden layers in a single direction. The connections don’t have any feedback loops or cycles. They are also known as feedforward neural networks for this reason.

- Units (Neurons): A single compute unit serves as the network’s fundamental component. Every unit generates a single output after calculating a set of real-valued inputs. This calculation includes:

- Multiplying each input by a matching weight in order to calculate the weighted total of the inputs. Consider this the weight vector-input vector dot product.

- Adding a phrase that is biassed. One way to think of the bias is as a weight attached to an input unit that is always set to +1.

- applying the weighted sum + bias result to a non-linear activation function. The Rectified Linear Unit (ReLU), sigmoid, and tanh are examples of common non-linearities.

- Connections: Every layer in the typical MLP design is fully-connected, which means that every unit in one layer is linked to every unit in the layer below it. The output of the previous layer is multiplied by a weight matrix for the current layer in a vector-matrix multiplication operation, which is equivalent to this extensive connection between layers.

Functionality and Power

- Overcoming Linear Separability: One of the main drawbacks of single-layer perceptrons is that they are only capable of handling linearly separable issues, which are those in which a line or hyperplane can properly separate data points of various classes. The XOR function is one of several significant issues that cannot be linearly separated. In order to learn non-linear decision boundaries, MLPs get over this restriction by utilising hidden layers with non-linear activation functions.

- Universal Approximation: A universal approximator is an MLP with a non-linear “squashing” activation function (such as sigmoid or tanh) and at least one hidden layer. It can therefore estimate any continuous function to any level of precision. This theoretical solution does not, however, imply that a training method can locate the function or determine the size of the hidden layer.

- Benefits of Depth: Networks with additional layers (higher depth) may learn more complicated functions and may need fewer units per layer, even if a single hidden layer is theoretically adequate for universal approximation.

Learning/Training

- Usually, supervised machine learning is used to train MLPs. In this method, the network is provided with examples of inputs and the proper outputs that correspond to them.

- Learning the network’s parameters (weights and biases) so that the output (Ϸ) of the network is as near to the actual output (y) for the training data as feasible is the aim of training. This is expressed in terms of minimizing a loss function that measures the discrepancy between the actual and projected results.

- Algorithms for gradient-based optimization are used to accomplish learning. In order to lower the loss function, these techniques iteratively modify the parameters.

- Backpropagation is the main technique used to calculate the gradients in multilayer networks. By going backward from the output layer through the hidden layers, backpropagation determines the relative contributions of each parameter to the total loss using the chain rule of calculus. For gradient computation or error propagation, it may be viewed as a type of dynamic programming.

- SGD or its variations, which update parameters based on the gradient calculated from small portions of the training data known as mini-batches, are frequently used in training.

- Because non-linear MLPs typically have non-convex optimisation objectives, gradient descent may become trapped in local optima.

Function in NLP

- When the job can be presented as a classification issue, MLPs may be employed directly for a variety of NLP applications.

- They are especially crucial as parts of more intricate neural networks designed for structured data (such as Convolutional Neural Networks, or CNNs) or sequential data (such as Recurrent Neural Networks, or RNNs).

- MLPs process contextualized embeddings on their own and act as the feed-forward layers inside Transformer network blocks.

- Word embeddings are learnt by these networks, usually in a “embedding layer” and represent words as dense vectors.

Multilayer Perceptrons are essentially strong, adaptable structures that combine basic non-linear units in several layers, with their parameters effectively adjusted using backpropagation, to learn intricate, non-linear correlations in data. They serve as the theoretical foundation and fundamental components of many contemporary deep learning models used in natural language processing.