Algorithms and Analysis in Python

One essential component of computer science and a key skill required of contemporary computer engineers is the understanding of algorithms and their analysis. This includes these computational tools’ design, analysis, and application.

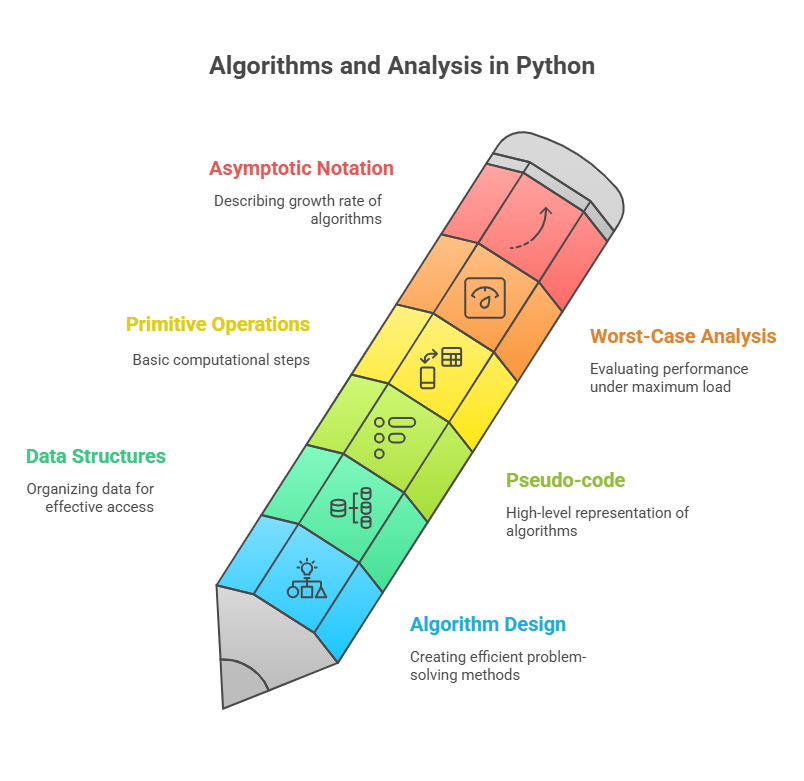

Introduction to Algorithms and Data Structures

Fundamentally, an algorithm is a methodical process for completing a task in a limited amount of time, whereas a data structure is a systematic approach to organise and access data. Effective information gathering, organisation, and retrieval depend on data structures. The instructions needed to solve a problem are provided by algorithms. Both ideas are fundamental to computers, and when applied correctly, they allow for the efficient writing of code to solve common issues.

Although the study of data structures and algorithms is typically taught in the second year of a university computer science program, it is equally pertinent to high school students, professional programmers, and everyone else who wants to learn more than simply a programming language. This area focusses on the substantial influence that proper algorithms and data organisation have on what can be calculated at hand.

Algorithm Design and Analysis

For a data structure or algorithm to be deemed “good,” it must be precisely analysed. Characterising the execution durations of algorithms and data structure operations is the main goal of the analysis tool; space use is also a crucial factor. This analysis should ideally take into consideration all potential inputs and be independent of particular hardware and software settings.

Pseudo-code is frequently used in algorithm design as a prerequisite for implementation. For human readability, pseudo-code is an organised blend of high-level programming structures and natural language that concentrates on the essential concepts while omitting low-level implementation details.

Counting Primitive Operations: Primitive operations are counted in order to analyse running time without experiments. A low-level instruction with a fixed execution duration is equivalent to a primitive operation. Among the examples are:

- Giving an object a unique identity

- Completing an arithmetic task

- Two numbers are compared.

- Using an index to retrieve a single element from a Python list

- Making a function call or coming back from one

The algorithm’s execution time is measured by the total number of primitive operations, or t.

Worst-Case Input Analysis: Some inputs of the same size may cause algorithms to operate more quickly than others. Worst-case analysis is typically chosen, although average-case analysis can be complicated because it requires defining a probability distribution for inputs. The construction of stronger algorithms that function well on all inputs is usually the result of this easier method, which simply requires identifying the worst-case input.

Analysis of Asymptotes and Big-Oh Notation

Using a “big-picture” approach that ignores constant factors, algorithm analysis focusses on the growth rate of running time as a function of input size n. This is where the use of Big-Oh notation is useful.

In algorithm analysis, the seven most frequently used functions are arranged from fastest to slowest growth rate:

Constant (1): If a tail pointer is kept, the operation time (such as adding a node to the tail of a linked list) is independent of the input size.

Logarithm (log n): A common algorithm that divides issues into smaller pieces with each iteration is the logarithm (log n) (e.g., searching a binary search tree).

Linear (n): When it comes to iterating through a list, the running time is directly proportional to the size of the input.

N-Log-N (n log n): Grows considerably more slowly than quadratic but marginally faster than linear. The fastest sorting algorithms usually take n log n time.

Quadratic (n^2): Frequently, nested loops with inner and outer loops carrying out a linear amount of operations give rise to quadratic (n^2).

Cubic (n^3): The cubic function, f(n) = n^3 or O(n^3), assigns ‘n’ the product of ‘n’ multiplied by itself three times in algorithm analysis. The running time of an algorithm with a number of operations proportional to the input size cube. It occurs occasionally, although less often than constant, linear, or quadratic functions.

Exponential (a^n, where a > 1): Almost never regarded as efficient, algorithms with this running time are impractical for all but the smallest inputs.

Ideally, algorithms should execute in linear or n-log-n time, and data structure operations in constant or logarithmic time.

Code Examples and Analysis

Quadratic-Time Algorithm: Computing Prefix Averages Calculating Prefix Averages (prefix average1) using a quadratic-time algorithm Let’s look at an example of calculating a sequence S’s prefix averages. Using an inner loop to add elements, the prefix average1 algorithm calculates each component of result A independently.

Prefix average1 represented in pseudo-code:

Example:

def prefix_average1(S):

"""

Calculates the prefix averages of a sequence using a nested loop.

Input:

S: A sequence (list or tuple) of numbers.

Output:

A: A list where A[j] is the average of S[0] to S[j].

"""

n = len(S)

A = [0] * n # Create a new list of n zeros to store the averages

# Outer loop iterates through each element of S to compute its prefix average

for j in range(n):

total = 0 # Initialize total for the current prefix sum

# Inner loop sums elements from S[0] to S[j]

for i in range(j + 1): # Loop from 0 up to and including j

total = total + S[i]

A[j] = total / (j + 1) # Calculate the average and store it

return A

# Example Usage:

if _name_== "_main_":

# Test with a list of integers

sequence1 = [1, 2, 3, 4, 5]

averages1 = prefix_average1(sequence1)

print(f"Original Sequence 1: {sequence1}")

print(f"Prefix Averages 1: {averages1}\n")

# Test with a list of floating-point numbers

sequence2 = [10.0, 20.0, 30.0, 40.0]

averages2 = prefix_average1(sequence2)

print(f"Original Sequence 2: {sequence2}")

print(f"Prefix Averages 2: {averages2}\n")

# Test with a single element sequence

sequence3 = [7]

averages3 = prefix_average1(sequence3)

print(f"Original Sequence 3: {sequence3}")

print(f"Prefix Averages 3: {averages3}\n")

# Test with an empty sequence

sequence4 = []

averages4 = prefix_average1(sequence4)

print(f"Original Sequence 4: {sequence4}")

print(f"Prefix Averages 4: {averages4}\n")Output:

Original Sequence 1: [1, 2, 3, 4, 5]

Prefix Averages 1: [1.0, 1.5, 2.0, 2.5, 3.0]

Original Sequence 2: [10.0, 20.0, 30.0, 40.0]

Prefix Averages 2: [10.0, 15.0, 20.0, 25.0]

Original Sequence 3: [7]

Prefix Averages 3: [7.0]

Original Sequence 4: []

Prefix Averages 4: []Analysis:

- It takes O(1) time when n = len(S).

- A = * n: This takes O(n) time to create and initialise a list of length n.

Nestled Loops

- From j=0 to n-1, the outer loop, which is controlled by j, runs n times.

- O(n) time is contributed by the n-time execution of total = 0 and A[j] = total / (j+1) inside the outer loop.

- For any given j, the inner loop (controlled by i) iterates j+1 times (from i=0 to j).

- The inner loop’s actions are time-constant. Thus, the inner loop takes O(j) time for every j.

Total Time Calculation: The sum of O(j) for j between 0 and n-1 is the total time for the nested loops. This adds up to 1 + 2 + 3 +… + n, or n(n+1)/2. It reduces to O(n^2).

Overall: Prefix average1 runs in O(n^2) time since the nested loops take up the majority of its operating time. The slicing and summing still take O(j+1) time for each j, even when using Python built-in sum(S[0:j+1]) (as in prefixaverage2), which results in the same O(n^2) asymptotic complexity.

Logarithmic-Time Algorithm: Binary Search Algorithm in Logarithmic Time A traditional recursive approach for finding a target value quickly in a sorted sequence of n elements is binary search. In comparison to sequential search, it is substantially more efficient.

Analysis:

- Binary search operates in O(log n) time as opposed to O(n) time for sequential search. The fact that log n is only roughly 30 for an input size of one billion is a significant gain.

- The divide-and-conquer approach is efficient because it quickly finds the element or establishes its absence by halving the search space in each phase.

Recursive Algorithm

The Factorial Function A well-known mathematical function with a naturally recursive definition is the factorial function (n!). Usually, a recursive algorithm consists of two primary components:

Base cases: A non-recursive condition or circumstances that eventually cause the recursive calls to stop. The basis instances for factorial are 0! = 1 and 1! = 1.

Recursive case: A base case is approached by one or more recursive calls. Since n! = n * (n-1)! for factorial is the step that is recursive.

The process of analysing recursive algorithms entails adding up all of the actions carried out during each function activation.

Conclusion

Different from simply learning a programming language, the foundation of computer science is an understanding of data structures and algorithms. The book “Data Structures & Algorithms in Python” offers a hands-on introduction to these ideas, explaining how to put basic data structures into practice and evaluating their suitability for different use cases and performance. In addition to teaching implementation, the objective is to educate the process of selecting the best data structures and algorithms and identifying potential failure points.