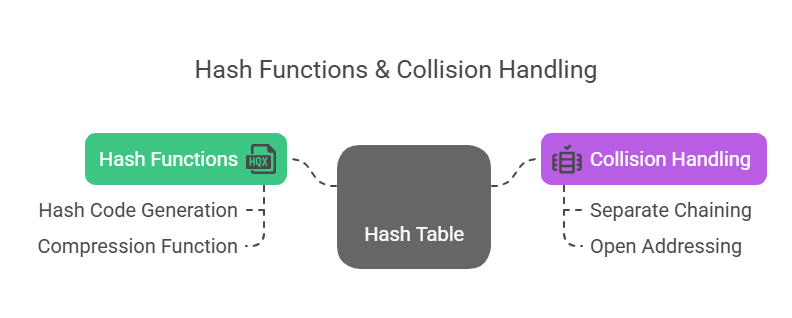

Hash Functions and Collision Handling

For some activities, hash tables a basic data structure made for effective data storage and retrieval often provide benefits over other structures like arrays. Fundamentally, hash tables use a procedure known as hashing to associate keys with certain positions, or “buckets,” in an underlying array.

Hash Functions

A hash function is a crucial part of a hash table because it transforms an arbitrary key into an integer index (also known as a “hash address”) that falls inside the array of the hash table. A good hash function should be quick and simple to calculate and should minimise collisions, which occur when distinct keys provide the same hash address.

A hash function evaluation usually consists of two primary steps:

Hash Code Generation: The initial step, Hash Code Generation, transforms a key k into an integer hash code, which need not be non-negative or even fall inside the final index range. Making ensuring that various keys ideally generate unique hash codes in order to prevent early collisions is the main objective here.

- Directly interpreting the bits as an integer is a straightforward method for data types whose bit representations are no longer than the hash code. If keys differ only in these ignored bits, this could result in more collisions. However, for longer representations (such as 64-bit floating points for 32-bit hash codes), this might entail abandoning information.

- When dealing with variable-length structures, such as character strings or tuples, where the order of members is important, polynomial hash codes are especially helpful. This approach chooses a non-zero constant a (not equal to 1) and uses the components of the object as coefficients to compute the hash code as a polynomial, as opposed to using simple summation or exclusive-OR.

- This polynomial can be computed effectively using Horner’s rule. Each component’s effect is dispersed throughout the hash code with the aid of this method. Constants such as 33, 37, 39, and 41 have been empirically demonstrated to produce relatively few collisions for English words.

- A variation known as a “cyclic-shift hash code” substitutes a cyclic bit shift of a partial sum for multiplication by a. The bits of the calculation are essentially varied by this operation, which is not arithmetic. For this, bitwise operators << and >> can be used in Python implementations, with careful truncation to the necessary bit length (e.g., 32-bit integers). The shift amount selection is important and has a big influence on accident rates.

Compression Function: A compression function converts the generated integer hash code into the bucket array’s valid index range [0, N-1], where N is the table size. Collisions for the specified set of hash codes are further reduced by a decent compression algorithm.

- A straightforward compression algorithm called the Division Method converts an integer i to i mod N. To help disperse the hashed values and lower the possibility of recurring patterns causing collisions, N is suggested as a prime number.

- An integer i can be mapped to [(ai + b) mod p] mod N using the more complex Multiply-Add-and-Divide (MAD) Method. The bucket array size is N, a and b are randomly selected integers, and p is a prime number greater than N.

- By removing recurring patterns from integer keys, this technique seeks to get closer to a “good” hash function where collisions happen with a probability of 1/N.

Example:

These concepts could be combined in a hash function that is part of a basic class for hash table implementations:

class HashTableChaining:

def __init__(self, size):

self.size = size

self.table = [[] for _ in range(size)]

def hash_function(self, key):

return hash(key) % self.size

def insert(self, key, value):

index = self.hash_function(key)

for kv_pair in self.table[index]:

if kv_pair[0] == key:

kv_pair[1] = value

return

self.table[index].append([key, value])

def search(self, key):

index = self.hash_function(key)

for kv_pair in self.table[index]:

if kv_pair[0] == key:

return kv_pair[1]

return None

def display(self):

for i, bucket in enumerate(self.table):

print(f"Index {i}: {bucket}")

# Example Usage

ht = HashTableChaining(5)

ht.insert("apple", 10)

ht.insert("banana", 20)

ht.insert("grape", 30)

ht.insert("apple", 40)

ht.display()

print("Search 'apple':", ht.search("apple"))Output:

Index 0: [['apple', 40]]

Index 1: []

Index 2: []

Index 3: [['banana', 20], ['grape', 30]]

Index 4: []

Search 'apple': 40Hash Map Base’s hash function illustrates this two-step process. It compresses using len(self.table), self.scale, self.shift, and self.prime after producing the hash code with Python hash() method. The base class resizes to maintain load factor (entry count / table capacity).

Collision Handling

A collision happens when the hash value generated by two different keys is the same. Because a hash table maps keys to array indices directly, collisions make it impossible to just put an item into A[h(k)] if it’s already taken. Schemes for addressing collisions are used to address this.

Two main methods for resolving collisions are:

Separate Chaining: With separate chaining, every bucket A[j] stores a secondary container, either a linked list or a tiny map instance, to hold all of the items that hash to index j.

- Inserting an item at the determined hash index adds it to the list.

- Following the hash function’s bucket selection, the list inside that bucket is traversed to find an item.

- This strategy is simple and successful if bucket lists are kept low. The ChainHashMap class utilises an UnsortedTableMap for each bucket, likely a list.

- The average chain length in a chaining hash table affects insertion, search, and deletion efficiency. Short chains make average-case performance close to O(1) if the hash algorithm efficiently distributes keys. The worst case is O(N), where N is the number of items, if all keys hash to the same bucket. Performance is best achieved when the load factor is kept below 0.9.

Open Addressing: In the event of a collision, open addressing techniques locate a different unoccupied space inside the main hash table array itself rather than relying on additional containers. Space is saved because each bucket doesn’t need a framework.

- Simple open addressing method linear probing tries A[(j+1) mod N], A[(j+2) mod N], and so on if bucket A[j] is occupied to discover an empty bucket.

- Primary clustering, a major drawback of linear probing, is the formation of consecutive sequences of occupied cells, which significantly slows down searches, particularly when more than half the table is full.

- Linear probing for collision resolution is implemented by the ProbeHashMap class. Previously erased places are marked by a unique AVAIL sentinel, which prevents searches from ending too soon.

- Quadratic probing is an additional open addressing technique that successively tries buckets A[(h(k) + i^2) mod N] for i = 0, 1, 2,…. in an effort to avoid primary clustering. Keys that hash to the same initial location may follow the same probing sequence, a phenomenon known as secondary clustering, even while primary clustering is avoided. If N is prime and the table is less than half full, quadratic probing will always discover an empty slot.

- Using an additional hash function h'(k) to calculate the step size for probing, double hashing aims to further minimise clustering: A[(f(i)) + h(k)] mod N. As a result, a pseudo-random probe sequence that is dependent on the original hash code is produced.

- The load factor affects open addressing techniques’ effectiveness as well. The load factor is kept at or below 0.5 for ProbeHashMap, and when it is surpassed, the table is resized.

If open addressing is not properly managed, it may result in clustering problems even though it may save space. With a solid hash function, separate chaining typically provides better performance guarantees in practice since collisions only impact the short list inside a single bucket rather than spreading throughout the larger array.