Sorting Algorithms in DSA

In computer science and programming, comparing various sorting algorithms is essential since algorithm selection has a big influence on a program’s productivity and consumption. Programmers can choose the best data structures and algorithms for particular programming jobs and even anticipate when an algorithm might fail by being aware of their advantages and disadvantages. Stability, space complexity, and temporal complexity are important comparative points.

Overview and Comparison of Sorting Algorithms

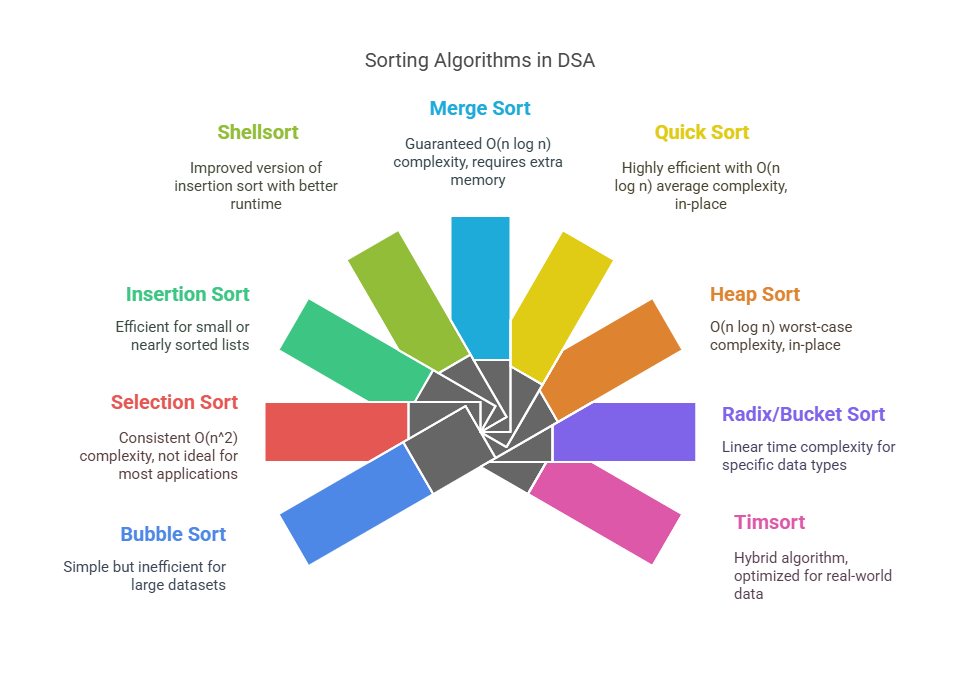

There are numerous sorting algorithms, and each has special qualities:

Bubble Sort: Bubble Sort, often known as sinking sort, is a straightforward sorting algorithm. Stepping over a list repeatedly, it compares each pair of adjacent items and switches them if they are out of order. This procedure keeps on until the list is sorted after a full pass through it is finished without any swaps. Two nested loops can be used to build the technique, which efficiently compares each item in the list with every other item. It goes over the list n-1 times, comparing the current and subsequent elements and switching out any that are out of order.

Selection Sort: The least (or largest) piece from the unsorted section is repeatedly found and placed at the beginning using selection sort. It is a bad option for the majority of applications because it always has an O(n^2) time complexity.

Insertion Sort: One item at a time, this algorithm creates the final sorted list. For tiny sequences (less than 50 elements, for example) or sequences that are “almost” sorted, with few inversions, it is easy to program and effective. However, it can involve many data copies and has an average and worst-case complexity of O(n^2).

Shellsort: A better variant of insertion sort, Shellsort compares entries separated by an interval and narrows the gap. This faster method moves things to their approximate locations than insertion sorting. O(n log^2 n) typically leads to better runtime complexity than O(n^2).

Merge Sort: Recursively dividing a list into two sublists, merge sort sorts them, and combines them. Comparison-based sorting benefits from its guaranteed worst-case running time of O(n log n). Nevertheless, it is challenging to implement in-place (without requiring a large amount of extra memory for temporary arrays).

Quick Sort: Quick Sort is another divide-and-conquer algorithm that divides the ‘pivot’ element into two sub-arrays: those that are greater than the pivot and those that are less than the pivot. These sub-arrays are then sorted recursively. With an average-case time complexity of O(n log n), Quick Sort is incredibly effective. However, if the pivot selection is constantly subpar (e.g., always selecting the lowest or largest element), its worst-case scenario may decline to O(n^2). Implemented “in-place,” it requires only the input array and little auxiliary memory.

Heap Sort: A binary heap data structure is used to sort elements in this technique. It repeatedly eliminates the maximum (or minimum) element from the input array after establishing a max- or min-heap. Heap Sort is best for comparison-based sorts since it has O(n log n) worst-case execution time. It can be applied in-place as well. It is typically not a stable kind, though.

Radix Sort and Bucket Sort: Under some circumstances, such as when the keys are integers falling within a specified range, the non-comparison-based sorting algorithms Radix Sort and Bucket Sort can reach linear time complexity O(n + N). Using their keys, bucket sort divides the items into “buckets” and then gathers them in order. Radix sort sorts by individual digits (or components) by applying bucket sort repeatedly. These kinds are frequently stable.

Timsort: Timsort, a hybrid sorting algorithm based on merge sort and insertion sort, is used by Python built-in sort() method and sorted() function. It combines the best features of both: the effectiveness of insertion sort for little runs within the data and the speed of merge sort for large datasets. It is extremely optimised and provides O(n log n) performance.

In general, there is no one “best” sorting algorithm; instead, the selection is based on the characteristics of the application, such as memory limitations, stability requirements, and efficiency requirements. Because of their O(n log n) performance, algorithms like heapsort, mergesort, and quicksort are recommended for huge datasets, whereas insertion sort may be quicker for extremely short or almost sorted lists.

Internal and External Sorting

Additionally, sorting algorithms are divided into groups according to the location of the data during the sorting process:

Internal Sorting: Sorting algorithms that fit all of the data to be sorted completely inside the computer’s main memory (RAM) are referred to as internal sorting algorithms. Since they work with material that has been loaded into RAM, the majority of the aforementioned algorithms Bubble Sort, Selection Sort, Insertion Sort, Quick Sort, Heap Sort, Shellsort, Radix Sort, and Timsort are usually regarded as internal sorting algorithms.

Example:

def quicksort(arr):

if len(arr) <= 1:

return arr

pivot = arr[len(arr) // 2]

left = [x for x in arr if x < pivot]

middle = [x for x in arr if x == pivot]

right = [x for x in arr if x > pivot]

return quicksort(left) + middle + quicksort(right)

# Example Usage

arr = [3, 6, 8, 10, 1, 2, 1]

print("Sorted Array:", quicksort(arr))Output:

Sorted Array: [1, 1, 2, 3, 6, 8, 10]External Sorting: When the volume of data that needs to be sorted is too big to fit in main memory and needs to be stored on external storage, like a hard drive, external sorting is required. Since disc transfers (I/O operations) are far slower than internal memory operations, external sorting algorithms are primarily concerned with minimising the number of these operations. A variant of Multiway Merge-Sort is a popular method for external sorting.

Example:

def merge_chunks(chunk1, chunk2):

result = []

i = j = 0

while i < len(chunk1) and j < len(chunk2):

if chunk1[i] < chunk2[j]:

result.append(chunk1[i])

i += 1

else:

result.append(chunk2[j])

j += 1

result.extend(chunk1[i:])

result.extend(chunk2[j:])

return result

# Example of merging sorted chunks from two files

chunk1 = [1, 4, 7]

chunk2 = [2, 5, 6]

print("Merged Array:", merge_chunks(chunk1, chunk2))Output:

Merged Array: [1, 2, 4, 5, 6, 7]In order to minimise the number of merge levels, this technique splits the data into smaller subsets that can fit in main memory, sorts each subset internally, and then merges these sorted subsets (also known as “runs”) in a multiway fashion, repeatedly joining numerous sorted lists at once.

This is a conceptual Python depiction of the Insertion Sort algorithm that shows the fundamental ideas behind it. This algorithm’s pseudocode and description.

The for loop iterates across the list in this insertion sort function, treating the elements that have been handled thus far as a sorted sublist. The inner while loop moves larger elements one place to the right for each current value in order to determine its proper location within this sorted sublist. Current value is placed when the proper location has been determined, where it is no longer less than the element to its left. This iterative procedure guarantees that a greater percentage of the list is sorted following each outer loop pass.