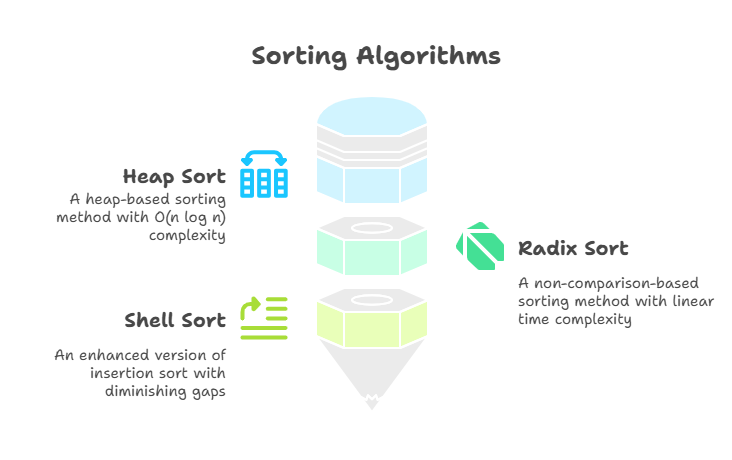

Sorting Algorithms

Along with their running time analyses, Through explanations of Heap Sort, Radix Sort, and Shell Sort. Although there is pseudo-code for Radix Sort, It reference Heap Sort and Shell Sort Python implementations in the excerpts but do not include actual, executable code examples.

Heap Sort

Concept and Operation: Heap Sort is a powerful heap data structure sorting method. According to the “heap-order property,” each node’s key is greater than or equal to its children’s keys (min-heaps) or less. Typically, heap sort makes use of a priority queue, which a heap may efficiently implement.

There are two primary steps in the Heap Sort Sorting Algorithms process:

Building the Heap (Phase 1): A heap (priority queue) is created by adding every piece from the input collection. An in-place approach is possible if the collection is an array since the heap can be constructed inside the array. This approach typically uses heapify().

Extracting Sorted Elements Phase 2: Extracting Sorted Elements The remove min or remove max procedure is repeated to extract elements from a min- or max-heap. This operation always finds the smallest (or largest) element. After being retrieved, these elements are rearranged in the array in a sorted order. In order to preserve the heap property, this step also entails Sorting Algorithms up/down operations.

Running Time Analysis

The logarithmic time complexity of heap operations is used to calculate the efficiency of heap sort.

Phase 1 (Building the Heap): O(log i) time is required for each addition operation to the heap, where i is the number of elements in the heap at that moment. This phase has an O(n log n) duration when summed over n elements. The bottom-up heap formation approach can optimise this step to O(n) time.

Phase 2 (Extracting Elements): Each remove min (or remove max) operation takes O(log(n – j + 1)) time, where j is the number of iterations. These operations across all n items give this phase an O(n log n) duration.

Overall Efficiency: Assuming element comparisons take O(1) time, the Heap Sort algorithm sorts n elements in O(n log n) time in the worst case. For sorting algorithms that rely on comparison, this performance is seen to be ideal. In-place implementation of heap sort is advantageous for space efficiency. However, element swaps make a conventional implementation of Heap Sort inherently unstable.

Although it mention the heapify() and heapsort() subroutines in Python and the heapq module for heap-based functionality, the extracts do not include a specific runnable Python code sample for the heapsort() subroutine.

Radix Sort

Concept and Operation: Radix Sort is a sorting algorithms that does not rely on comparing components to determine their sorted order because it is not a comparison-based sorting algorithms method. Rather, it processes individual “digits” or “keys” of the numbers to sort the items. Items are categorised using “buckets” in Radix Sort, where each bucket represents a distinct digit value (for example, 0-9 for base-10 integers). Usually, these buckets are implemented as queues.

Typically, the process moves from the least significant digit to the most significant one in repetitive passes:

Distribution: Data structures and algorithms’ “distribution” How data pieces or values are spread or arranged can greatly affect algorithm performance and design. Distribution includes the order of data components, especially key values. If key values arrive in a strict sequence (e.g., alphabetical order), it can degrade tree topologies and cause lopsided trees with most nodes on one side of the root.

Collection: After distributing all elements, buckets are emptied sequentially (from bucket 0 to bucket 9) and concatenated to generate a partially sorted list.

Iteration: Until all of the biggest number’s digits are processed, same methods are repeated for each subsequent digit. For multi-digit sorting algorithms to function properly, elements with identical keys must retain their relative order after each pass, which is why Radix Sort stability is so important.

Running Time Analysis

Under certain circumstances, Radix Sort can reach linear time complexity, making it faster than comparison-based sorts like Heap Sort and Quick Sort.

- Radix Sort can sort the keys in O(n + N) time if they are integers that fall inside a certain range (such as N-1]).

- If keys are d-tuples with components in N-1, running time is O(d(n + N)). If d and N are small, Radix Sort may outperform O(n log n) methods.

Example:

def counting_sort(arr, exp):

n = len(arr)

output = [0] * n

count = [0] * 10

# Count occurrences of digits

for i in arr:

index = (i // exp) % 10

count[index] += 1

# Update count to contain positions

for i in range(1, 10):

count[i] += count[i - 1]

# Build the output array

for i in range(n - 1, -1, -1):

index = (arr[i] // exp) % 10

output[count[index] - 1] = arr[i]

count[index] -= 1

# Copy output to arr

for i in range(n):

arr[i] = output[i]

def radix_sort(arr):

max_num = max(arr)

exp = 1

while max_num // exp > 0:

counting_sort(arr, exp)

exp *= 10

# Example usage

array = [170, 45, 75, 90, 802, 24, 2, 66]

radix_sort(array)

print("Radix Sort Result:", array)Output:

Radix Sort Result: [2, 24, 45, 66, 75, 90, 170, 802]This pseudo-code illustrates the iterations of maxKeySize (number of digits). Based on the indexOfKey, which effectively isolates the current digit, items are divided into 10 queues (representing digits 0–9) in each iteration. The queues are then reunited to create the updated list.

Shell Sort

Concept and Operation: It is said that Shell Sort, which bears Donald L. Shell’s name, is a more effective version of insertion sort. By enabling comparisons and swaps between distant components as opposed to merely adjacent ones, it enhances the fundamental insertion sort. The number of shifts needed in later stages is decreased because of the speedier movement of items to their roughly sorted positions made possible by this broader range of comparisons.

The algorithm goes through several passes to function:

N-Sorting: Shell Sort sorts “sub-lists” of elements that are a specific “interval” or “gap” apart in each run of N-sorting. If the interval was 5, it would sort entries at (0, 5, 10,…), (1, 6, 11,…), etc. This first sorting of widely spaced pieces reduces the number of components moved in later, smaller-gap processes.

Diminishing Gaps: The main characteristic of Shell Sort is that with each succeeding pass, the interval (gap) gets smaller and smaller. When the interval eventually reaches 1, the algorithm executes a conventional insertion sort. The array is already “mostly” sorted by this last run, which greatly improves the insertion sort’s performance and fixes its primary flaw of making too many copies of elements that are far from their ultimate places.

Running Time Analysis

The “interval sequences” selected for the reducing gaps have a significant impact on Shell Sort’s efficiency.

- O(n log² n) is the usual running time complexity for Shell Sort.

- A key component of improving performance is choosing the best interval sequences, a topic that is still being researched.

Even though “Python Code for the Shellsort” and a “Shell Sort class”, the snippets do not provide an executable Python code example. Shell Sort is a better variation of insertion sort because it compares objects at different distances.