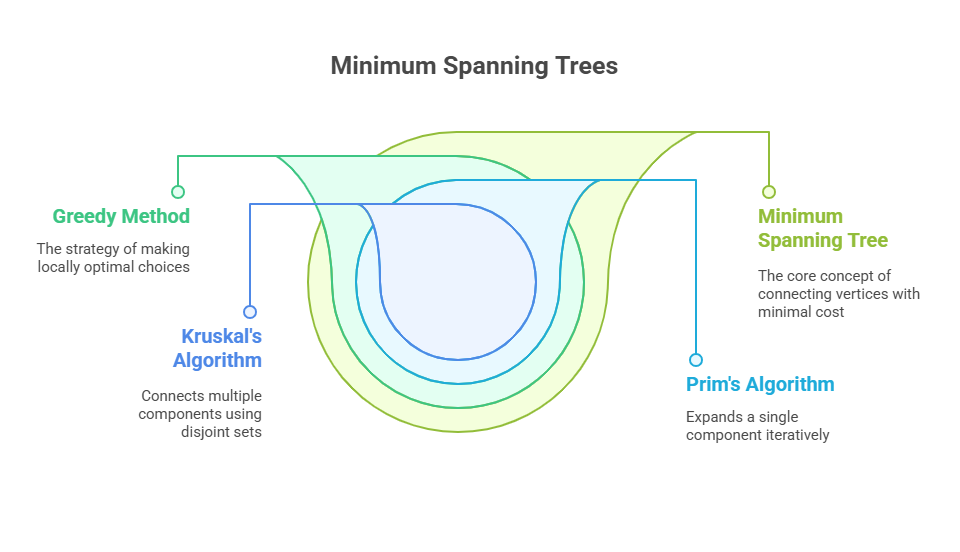

Minimum Spanning Trees

An essential idea in graph theory is the study of Minimum Spanning Trees (MSTs), which are useful for resolving issues where the objective is to connect a collection of points with the least amount of “weight” or “cost” possible. A tree that joins all of the vertices of a connected, undirected, and weighted graph in such a way as to minimise the sum of the weights of its edges is called an Minimum Spanning Trees. The goal of creating communication networks, for example, is to connect all nodes (such computers or switching centres) with the least length of cable possible. This challenge comes up in a variety of real-world situations.

The Greedy Method and MSTs

The greedy method is used in the classic solutions to the Minimum Spanning Trees issue, such as Prim’s and Kruskal’s algorithms. This design pattern selects an item to add to an expanding collection repeatedly by minimising a certain cost function. A “crucial fact” regarding MSTs underpins the accuracy of these algorithms: if a weighted connected graph is supplied, and its vertices are divided into two disjoint nonempty sets (V1 and V2), then any edge with the smallest weight that connects a vertex in V1 to one in V2 must be a minimal spanning tree.

Prim’s Algorithm

Prim’s Algorithm greedily finds a linked, weighted, undirected graph’s Minimum Spanning Tree (MST). From a single vertex, it creates the MST by continually selecting the smallest edge that connects a tree vertex to an outer vertex. The algorithm tracks edges with the fewest weights for the Minimum Spanning Trees in a priority queue (or min-heap). At each step, the queue’s shortest edge is removed and the related vertex added to the tree. Iterate until all vertices are in the Minimum Spanning Trees . Prim’s Algorithm is effective for dense networks, with a priority queue time complexity of O(ElogV), where E is the number of edges and V is the number of vertices. The MST reduces edge weight without cycles.

Concept and Mechanism

- It starts by choosing an arbitrary initial vertex (s) and initialising a “cloud” (C) that contains only s.

- Outside the cloud, a label D[v] is kept for each other vertex v. This label holds the weight of the smallest observed edge connecting any vertex already in cloud C to v.

- You can think of these D[v] labels as keys in a priority queue (Q). The algorithm takes the vertex u with the lowest D[u] value from Q in each iteration.

- After that, the vertex u is added to cloud C, and the edge that joined u and cloud C is added to the MST.

- In the algorithm, the edges incident to u are relaxed once u is added to C. D[v] is updated in the priority queue for each neighbour v of u that is still outside C if the edge (u,v) offers a shorter connection to the cloud than D[v] currently shows.

- The procedure is repeated until the spanning tree contains every vertex. Prim’s technique makes that a valid MST edge is always inserted by automatically selecting the smallest-weight edge that connects to the expanding tree.

Efficiency: The priority queue implementation affects how long Prim’s algorithm takes to run. For linked graphs (where n is the number of vertices and m is the number of edges), the algorithm’s time complexity is O((n + m) logn), which reduces to O(m logn) when an adaptive heap-based priority queue is employed. Alternatively, an O(n^2) running time is obtained by utilising an unsorted list for the priority queue.

Python Code Example (Conceptual):

MST Prim Jarnik(g) has a Python implementation. Usually, a list tree would be created to gather the MST edges, and a dictionary d would be initialised to hold the current minimum distances to the tree. For effective updates, a pqlocator dictionary is utilised to map vertices to their priority queue entries in conjunction with Adaptable Heap Priority Queue. In the core logic, vertices are added to the priority queue with starting distances of zero for the start vertex and infinite for the others. Then, in a loop, the minimum is extracted, added to the cloud, and the distances of the neighbours are updated in the priority queue.

Example:

import heapq

def prims_mst(graph, start):

mst = []

visited = set()

min_heap = [(0, start, None)]

while min_heap:

weight, vertex, parent = heapq.heappop(min_heap)

if vertex in visited:

continue

visited.add(vertex)

if parent is not None:

mst.append((parent, vertex, weight))

for neighbor, edge_weight in graph[vertex]:

if neighbor not in visited:

heapq.heappush(min_heap, (edge_weight, neighbor, vertex))

return mst

# Graph representation: adjacency list

graph = {

0: [(1, 4), (2, 3)],

1: [(0, 4), (2, 1), (3, 2)],

2: [(0, 3), (1, 1), (3, 4)],

3: [(1, 2), (2, 4)]

}

mst = prims_mst(graph, 0)

print("MST (Prim's):", mst)Output:

MST (Prim's): [(0, 2, 3), (2, 1, 1), (1, 3, 2)]Kruskal’s Algorithm

Kruskal’s Algorithm greedily finds a linked, weighted, undirected graph’s Minimum Spanning Tree (MST). It starts by sorting edges by weight in ascending order. To avoid cycles, the algorithm iteratively adds the smallest edge to the MST. It tracks graph connections using a disjoint-set (union-find) data structure. Each edge is inserted only if it merges two components into one. Continue this method until the MST has −1 edges, where V is the number of vertices. Kruskal’s Algorithm is particularly useful for sparse networks, with a temporal complexity of O(ElogE) (where 𝐸 is the number of edges). It is commonly used in network optimisation to construct cost-effective roads, pipelines, and communication networks.

Concept and Mechanism

- Every vertex in the graph is first handled as a distinct, simple cluster.

- Edges in the graph have non-decreasing weights.

- The algorithm iterates through the sorted edges, considering each one individually.

- It checks if u and v are in different clusters for the edge e = (u,v). This crucial check is normally done with a union-find data structure.

- The two clusters containing u and v are combined into a single cluster if they are in separate clusters, and the edge e is added to the collection of MST edges.

- Since adding e would start a cycle if u and v are already in the same cluster, the edge is thrown away.

- Since the addition of n-1 edges to the MST (where n is the number of vertices) indicates the formation of a spanning tree, the algorithm stops. Utilising the “crucial fact” about MSTs, Kruskal’s algorithm consistently chooses the minimum-weight edge that joins two previously disjointed components, guaranteeing optimality.

Efficiency: Since m <= n^2, logm <= log(n^2) = 2logn, the initial sorting of all m edges requires O(m logm) time, which is the main factor influencing the running time of Kruskal’s algorithm. A sequence of make group, union, and find operations takes about n)* time, where k is the number of operations and log* n is the iterated logarithm, which grows extremely slowly. This helps to explain why cluster management using an efficient union-find data structure adds to the overall time complexity. Kruskal’s algorithm’s overall running time is therefore commonly expressed as O(m logn).

Python Code Example (Conceptual):

It include a Python implementation of MST Kruskal(g). In order to manage the clusters, this function would initialise a Partition object (a union-find structure), a Heap Priority Queue pq to hold graph edges arranged by their weights, and a list tree for the MST edges. For every vertex, a group would first be created in the Partition. After that, it repeatedly goes over the edges that were taken out of the priority queue. It finds the endpoint clusters for each edge using forest.find(). If they vary, the clusters are combined using forest.union() and the edge is appended to the tree.

Example:

class UnionFind:

def __init__(self, n):

self.parent = list(range(n))

self.rank = [0] * n

def find(self, node):

if self.parent[node] != node:

self.parent[node] = self.find(self.parent[node])

return self.parent[node]

def union(self, node1, node2):

root1, root2 = self.find(node1), self.find(node2)

if root1 != root2:

if self.rank[root1] > self.rank[root2]:

self.parent[root2] = root1

elif self.rank[root1] < self.rank[root2]:

self.parent[root1] = root2

else:

self.parent[root2] = root1

self.rank[root1] += 1

def kruskals_mst(edges, num_vertices):

uf = UnionFind(num_vertices)

mst = []

edges.sort(key=lambda x: x[2])

for u, v, weight in edges:

if uf.find(u) != uf.find(v):

uf.union(u, v)

mst.append((u, v, weight))

return mst

# Graph representation: edge list

edges = [

(0, 1, 4),

(0, 2, 3),

(1, 2, 1),

(1, 3, 2),

(2, 3, 4)

]

num_vertices = 4

mst = kruskals_mst(edges, num_vertices)

print("MST (Kruskal's):", mst)Output:

MST (Kruskal's): [(1, 2, 1), (1, 3, 2), (0, 2, 3)]Conclusion

In conclusion, greedy concepts are used by both Prim’s and Kruskal’s algorithms to efficiently build Minimum Spanning Trees. However, their methods for expanding the tree vary; Prim’s expands a single component, whereas Kruskal’s connects several components.