An upgraded kind of neural network called a bidirectional long short-term memory (BiLSTM) network is made to process sequential input more efficiently than its predecessors.

What is BiLSTM?

An expansion of conventional Long Short-Term Memory (LSTM) networks is a Bidirectional Long Short-Term Memory (BiLSTM) protocol. BiLSTMs permit information to flow both forward and backward, in contrast to traditional LSTMs that only process sequences in one way. They can extract additional contextual information from the data to their special skill.

By simultaneously tracking the flow of information from past and present timesteps in both forward and backward directions, BiLSTMs are specifically made to handle the vanishing-gradient problem and identify long-term dependencies in text sequences.

History

BiLSTMs are based on Long Short-Term Memory (LSTM) networks, which Hochreiter and Schmidhuber first presented in 1997. They were developed as a substitute for conventional Recurrent Neural Networks (RNNs) in order to address problems with long-term dependencies, gradient disappearing, and gradient exploding. Around the same time, Schuster and Paliwal’s 1997 paper on “Bidirectional recurrent neural networks” examined the idea of Bidirectional Recurrent Neural Networks, which is what BiLSTMs are.

The 2005 conference paper “Bidirectional LSTM Networks for Improved Phoneme Classification and Recognition” by Alex Graves, Santiago Fernández, and Jürgen Schmidhuber is a noteworthy publication that highlights the use and efficacy of BiLSTMs. This study shown that in framewise phoneme classification, bidirectional LSTM performed better than both unidirectional LSTM and traditional RNNs.

Architecture

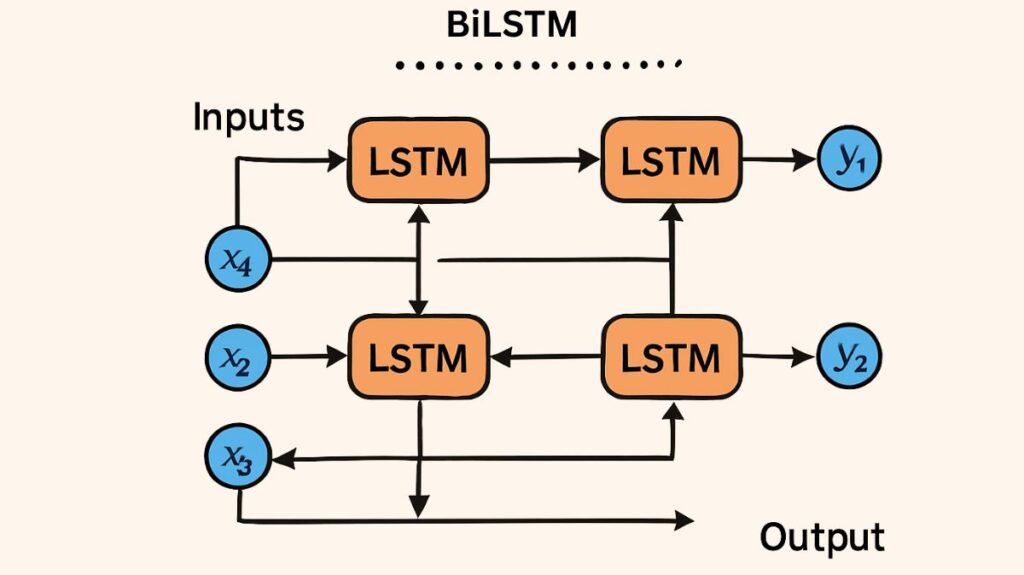

Two distinct LSTM layers usually make up a bidirectional LSTM (BiLSTM):

- Forward LSTM: This layer handles the input sequence in the standard chronological order, from beginning to end.

- Backward LSTM: In this layer, the input sequence is processed in reverse chronological order, from end to start.

The final output is then created by combining the outputs from the forward and backward LSTM layers. Techniques like concatenation, multiplication, and summation can be used to accomplish this combination. For example, theoretically, the sum of the probability vectors from the forward LSTM and the backward LSTM may be used to get the final probability vector at time. The LSTM units for forward and backward processing are shown in this architecture, and the combined outputs of these units create the final output token from an input token.

How it Works

BiLSTM operates by concurrently processing the input sequence in two directions. By reading the entire sequence, the forward LSTM creates a representation of each element’s historical context. At the same time, the reverse LSTM records the future context for every element by reading the same sequence from start to finish.

BiLSTM obtains a thorough grasp of the context surrounding every point in the sequence by integrating the data from both of these approaches. This is especially helpful for activities where a word or data point’s meaning is dependent on things that come before and after it. For instance, a BiLSTM can distinguish between “Apple” the firm and “apple” the fruit based on the context by examining terms that occur before and after the word “Apple” in a phrase. BiLSTM’s fundamental LSTM units can learn and remember long-term dependencies throughout the sequence by selectively retaining or discarding information using gates (forget, input/update, and output).

Also Read About Feedforward networks in Neural Network and its Types

Benefits

BiLSTMs have a number of noteworthy benefits.

Contextual Understanding: In order to fully comprehend the input data, they give access to both past and future context. They are able to provide more significant results as a result.

Increased Accuracy: BiLSTMs frequently perform better than unidirectional LSTMs in jobs where context from both directions is crucial. Bidirectional LSTM, for instance, scored better in phoneme classification than both unidirectional LSTM and traditional Recurrent Neural Networks (RNNs). For phoneme recognition, a hybrid BLSTM-HMM system also outperformed unidirectional LSTM-HMM and conventional HMM.

Long-Term Dependency Handling: Similar to LSTMs, BiLSTMs solve the vanishing gradient issue that plagues simpler RNNs by preserving long-range connections and identifying links between values across lengthy sequences.

Comprehensive Temporal Information: By combining data from the past and the future, they are able to extract complete temporal information at any given time point.

Disadvantages

BiLSTMs have disadvantages in addition to their benefits.

- Enhanced Complexity: Because of the dual sequence processing, their architecture is more intricate.

- Higher Computational Cost: Because BiLSTMs process sequences both forward and backward, they need more memory and processing power. In comparison to unidirectional LSTMs, this results in slower training times as well. For example, in a study on the classification of arrhythmia data, a Bidirectional LSTM model required substantially more training time per epoch (1071 s) than Vanilla LSTM (245-247 s) and Stacked LSTM (504 s).

- Unsuitable for Real-Time Applications: They might not be appropriate for real-time applications because of their decreased processing speed and greater complexity. BiLSTM-based language models can have to analyse entire sentences, which limits their use for real-time automated speech recognition (ASR).

- Feature Dimension Increase: BiLSTM extracts twice as many feature dimensions as hidden layer dimensions, albeit not all of these doubled features may be beneficial. Because of this, using a BiLSTM network alone to extract the most useful information may be difficult.

Also Read About Gated Recurrent Unit (GRU) And its Comparison with LSTM

Features

Bidirectional LSTMs’ salient characteristics include:

- Sequential data can be processed both forward and backward using dual-directional processing.

- The network can access and use contextual information from the past and future inside a sequence to contextual capture.

- Long-Term Memory: Prevents the vanishing gradient issue by inheriting the capacity to manage and retain long-term dependencies from LSTMs.

- Gating Mechanisms: To regulate the information flow via the network’s memory cells, forget, input/update, and output gates are used.

Types

Rather there being different “types” of BiLSTM, BiLSTM is an extension or modification of the conventional LSTM network. It is frequently addressed, nevertheless, in relation to or in conjunction with other neural network architectures:

- Compared to Unidirectional LSTM: BiLSTM’s dual-directional processing sets it apart from conventional LSTM.

- BiLSTMs are commonly employed as parts of hybrid systems, including:

- For phoneme recognition, BLSTM-HMM (Hidden Markov Model) systems are utilized.

- Convolutional Neural Network-BiLSTM (CNN-BiLSTM) models: Utilized for activities like as virus detection and the creation of ECG signals. This combo makes use of BiLSTMs to capture sequential dependencies and CNNs to learn representations on incoming data.

Challenges

BiLSTM networks is their complexity and computational requirements:

- Computational Overhead: As previously indicated in the drawbacks, dual processing raises training time and memory needs, which presents a problem for real-time applications or big datasets.

- Overfitting: BiLSTMs are susceptible to overfitting, just like other intricate neural networks, if improperly handled. To avoid this, the architecture frequently incorporates strategies like dropout layers. Additional steps like L2 regularisation, early halting, or model simplification might be required if overfitting happens.

- Feature Redundancy: It can be challenging to extract the most important information from a BiLSTM network alone because not all recovered features are equally useful due to the doubling of feature dimensions.

Applications

There are several uses for BiLSTMs, especially in domains where comprehending context across a sequence is essential:

Natural Language Processing (NLP)

- Because BiLSTMs can comprehend both past and future context, they do especially well on NLP tasks. Particular uses consist of:

- Sentiment analysis: Determining the tone of written material, such movie reviews.

- Named Entity Recognition: Recognizing and categorising textual named entities.

- Machine Translation:

- Classifying sentences according to their substance is known as sentence classification.

- Emotion Detection: This function is employed in single-task BiLSTM-based systems. Sentence context features in clinical texts are extracted using Chinese Clinical Named Entity Recognition.

- Speech Recognition: It has been demonstrated that BiLSTMs enhance phoneme recognition and classification.

- Bioinformatics: Applied to activities such as predicting the secondary structure of proteins.

- BiLSTMs are also used in the field of handwritten recognition.

Medical Signal Analysis

- Models for categorising arrhythmia signals from ECG data use heartbeat detection. BiLSTM models can accomplish this task with excellent accuracy (e.g., 99.00% total accuracy), albeit occasionally at a larger computational cost than stacked LSTMs.

- ECG Signal Synthesis: This generator, which shows a high degree of authenticity and morphological closeness to actual data, is proposed for use in Generative Adversarial Networks (GANs).

- Health Status Prediction: Based on medical records, health status can be predicted.

- Malware Detection: Used in CNN-BiLSTM and other models for reliable malware detection using control flow traces.

- Building Occupancy Detection: Using environmental sensors, a CDBLSTM (probably CNN-BiLSTM) approach has been put out for projecting building occupancy.

For deep learning problems involving sequential data, BiLSTMs remain a potent tool, particularly when successful predictions require rich information from both directions.

Difference Between LSTM and BiLSTM

LSTM

The vanishing gradient issue that prevents conventional RNNs from learning long-term dependencies was addressed by Long Short-Term Memory (LSTM) networks, a unique type of RNN. A memory cell and three gates input, forget, and output are used by LSTMs to do this. The network may keep or discard information across lengthy sequences to these gates, which selectively regulate the flow of information into and out of the cell state.

BiLSTM

Traditional LSTMs are extended by Bidirectional Long Short-Term Memory (BiLSTM) networks. BiLSTMs permit information to flow both forward and backward, whereas traditional LSTMs only process sequences in one way. For jobs where a thorough comprehension of the sequence is required, this dual processing allows BiLSTMs to access both past and future context, allowing them to capture more contextual information.

Also Read About The Power of Artificial Neural Networks in Data Science