This page discusses sequence labelling and Sequence classification. A full overview is provided below.

What is sequence labelling in NLP?

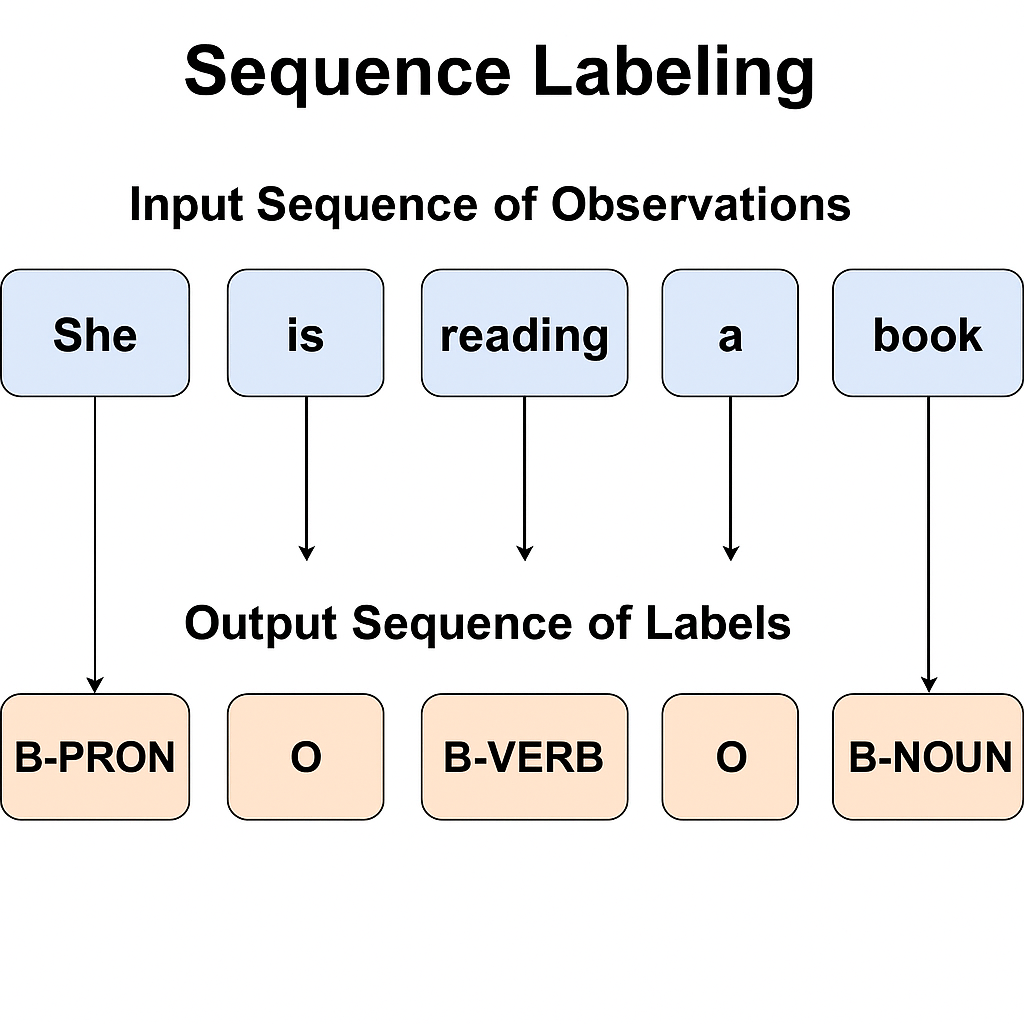

A fundamental job in natural language processing is sequence labelling, which entails giving each piece in a series a distinct label. Mapping an input series of observations for example, a sentence’s words to an output sequence of labels of the same length is the aim. This may be compared to giving each piece a label from a small, fixed collection.

Numerous NLP applications depend on this including:

Part-of-speech (POS) tagging: Giving every word in a phrase a grammatical category (such as noun, verb, or adjective). This is a common sequence labelling issue that frequently forms the first stage of NLP pipelines, offering properties that are helpful for subsequent tasks such as relation extraction or parsing.

Named Entity Recognition (NER): Recognizing and categorizing identified items in text, such as people, places, or organizations.

Chunking: Separating and labelling sequences of several tokens, such as recognizing verb phrases (VPs) or noun phrases (NPs). It is possible to relate the non-recursive chunking of text to a word classification problem.

Tokenization: Identifying word boundaries by giving characters labels (such as START or NONSTART).

Morphological segmentation: A job pertaining to the segmentation of words into morphemes.

Code switching: Addressing situations in which a text has language switches.

Dialogue acts: Assigning labels to conversation utterances based on the speaker’s purpose.

Scope identification: Labelling word tokens to show the start, middle, or end of a cue, focus, or scope span is used in tasks such as negation detection.

Semantic Role Labelling (SRL): Recognizing and designating spans (such as ARG0 and ARG1) that correspond to semantic responsibilities.

Segmentation tasks: Including the identification of the topic and sentence boundaries.

Notably, issues that are fundamentally segmentation problems are frequently addressed by sequence labelling. This entails placing marked brackets over sequence segments that do not overlap. Typically, encoding strategies like BIO tagging are used to transform these segmentation jobs into a word-by-word sequence labelling issue. Regarding BIO tagging:

- A token with the label “B” (Begin) initiates a period of interest for a certain entity or chunk type.

- A token that is inside (continues) a span of interest is labelled with “I” (Inside).

- Tokens that are outside of any period of interest are labelled with the letter “O” (Outside). Each class type has its own unique ‘B’ and ‘I’ tags for operations like chunking or NER (e.g., B-PER, I-PER for a Person entity). With this technique, span information may be deterministically represented using a tag sequence. There are variations that can record more specific span information, such as IO tagging, which combines the B and I prefixes, and BIOES (Begin, Inside, Outside, End, Single) or BILOU (Begin, Inside, Last, Outside, Unit).

Approaches to solving sequence labelling problems

There are many approaches to solving sequence labelling problems:

Sequence labelling as classification: This entails considering each token’s label as a separate categorization determination. Despite being straightforward, this method makes decisions in the moment and cannot be undone in light of new information.

Sequence labelling as structure prediction: This method takes into account the links between tagging decisions and uses the complete tag sequence as the label to be predicted collectively. This enables models to give implausible tag pairs such as noun followed by determiner lower scores.

Models

Sequence labelling is done using a variety of models and algorithms:

Hidden Markov Models (HMMs): A traditional generative model for tagging sequences. They are defined by observation likelihoods (the likelihood that a word will be assigned a tag) and transition probabilities between states (tags), which introduce important ideas in sequence modelling.

Conditional Random Fields (CRFs): These sequence models are discriminative. CRFs explicitly represent transition probabilities between labels and enable the inclusion of a large number of features. They are NER’s standard method.

Neural Networks: For every tagging choice, neural network techniques provide a vector representation.

- Sequence tagging is a good fit for recurrent neural networks (RNNs), especially bidirectional RNNs (biRNNs). Word embeddings are frequently used as inputs. A simple approach is to assign a score to each possible tag according to the RNN’s hidden state at that location. Models like the LSTM-CRF may be created by combining RNNs with CRFs.

- Sequence labelling is another use for convolutional neural networks (CNNs), which occasionally function at the character level. Dilated convolution is one technique that has been used.

- Transformers: Sequence labelling can be accomplished via these structures. To generate a probability distribution across potential tags, a classifier (such as a softmax layer) can receive the output vector for every input token via a Transformer encoder. This method is applied to sequence labelling jobs by models such as BERT.

- Transformation-based tagging: This method entails iteratively improving an initial label assignment, as demonstrated by the Brill tagger.

Sequence labelling algorithms

Sequence labelling algorithms include:

Viterbi algorithm: Used, especially with HMMs or when combining local scores, to determine the single most likely sequence of hidden states (tags) given a sequence of observations. It runs on a framework known as a trellis.

Greedy sequence classification: Sequence labelling model training usually requires labelled datasets and is supervised. Training entails minimizing a loss function, such as the negative log-likelihood, in order to learn parameters, such as weights or transition scores. The current word, prior tags, suffixes, gazetteer matches, and even parse features are examples of features that can be employed. In order to generate models from unannotated text, unsupervised sequence labelling is also feasible.

Sequence Classification

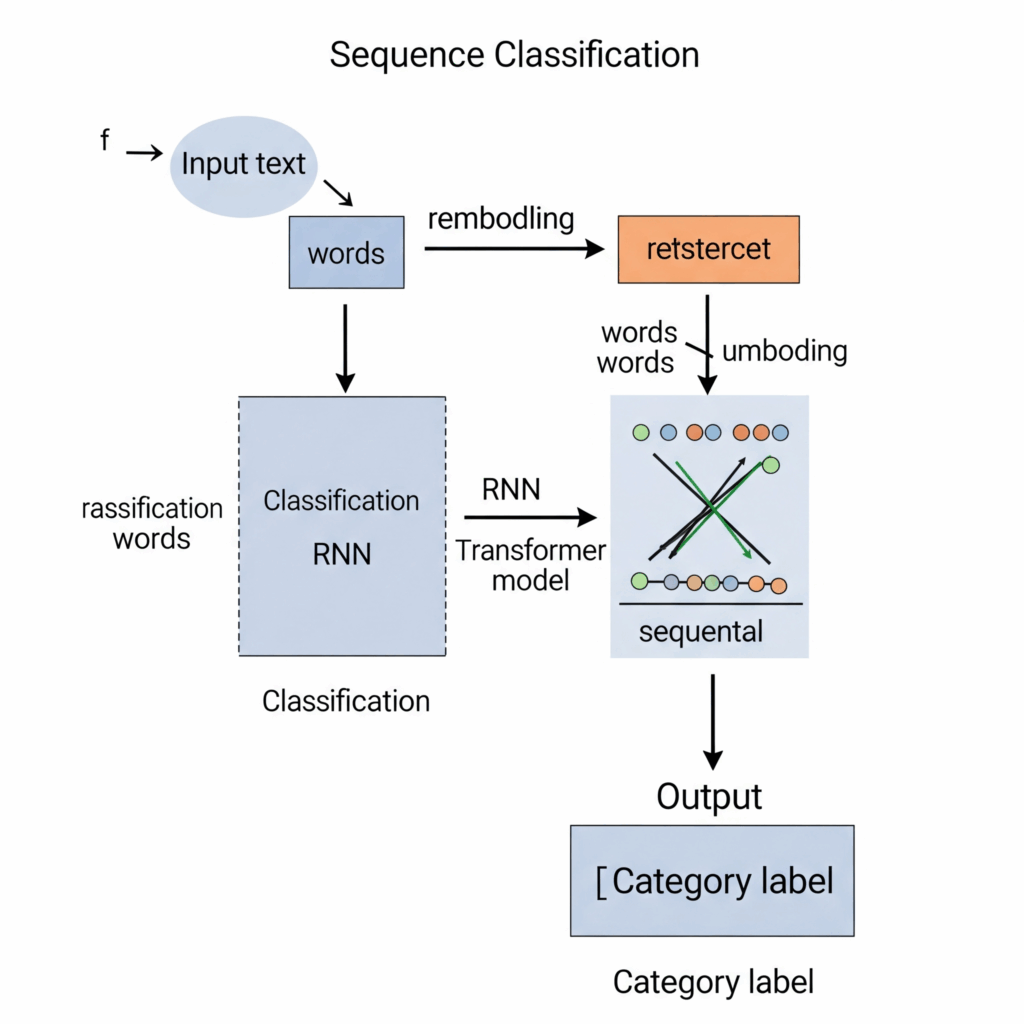

Assigning a single class label to an entire input sequence is the aim of the sequence classification problem. In contrast to sequence labelling, the output is a single result for the whole sequence rather than a series of labels for each input element. In contrast, each input may be taken into consideration separately in simple categorization problems.

The output for each token in the sequence is usually ignored in sequence classification models, like those based on RNNs or Transformers, with the exception of the final token (or a special token like [CLS]), whose vector representation is then sent to a classification layer to generate the single output label for the entire sequence.

Sequence categorization can be either multi-class (assigning one of more than two labels) or binary (assigning one of two labels). Getting labelled data, taking features out of the sequence, and training a classifier are common steps in the process.

Applications of sequence classification

Sequence classification applications that are discussed above:

- Sentiment Analysis: Giving a review or text block a sentiment label (such as favourable, negative, or neutral).

- Text Classification (Text Categorization): A general phrase used to give a written document a label or category. Examples include language detection, identifying the topic of a news story, classifying job applications (resume screening), and filtering spam.

- Classifying Pairs of Input Sequences: Tasks that assign a single label based on the connection between two sequences, such as speech analysis, logical entailment, and paraphrase detection.

- Word Sense Disambiguation (WSD): Can be expressed as a classification issue in which a sense label is given to each instance of an ambiguous term.

- Sentence and Topic Segmentation: A classification issue (binary or multi-class) can be used to frame the question of whether a candidate position is a border.

Key Differences

Their output is the main way that Sequence Labelling and Sequence Classification differ from one another:

- Sequence Labeling: Produces a list of labels, one for every item in the input list.

- Sequence Classification: Gives the full input sequence as a single label.

Sequence labelling is frequently regarded as a type of structured output problem, where the output structure is a sequence of tags, whereas sequence classification maps an entire sequence to a single discrete category. Despite this, both are types of classification problems in a broader sense and frequently use similar underlying machine learning techniques. POS tagging is one of the many NLP jobs that need for structured output that goes beyond a single label.