A beginner-friendly guide to ontology in NLP, including Ontology example in NLP, types, core functions, and its use in AI and natural language systems.

Ontology in NLP

There are various methods to conceptualise an ontology in Natural Language Processing (NLP), but the basic one is as a structured representation of information about a certain topic or area of reality.

Definitions and Characteristics of Ontologies

- The nature of being is the subject of ontology from a philosophical standpoint. An ontology is frequently described as a formal, clear statement of a conceptualisation in the fields of natural language processing (NLP) and artificial intelligence (AI). This indicates that it’s a method of thinking about a certain area that can be expressed in writing, read by computers, and understood by humans.

- Ontologies are logical theories that formalise an abstraction of a portion of reality that is pertinent to a given area.

- In contrast to language things like lexica, lexical databases, or thesauri, ontologies are logical theories rather than linguistic objects. In an ontology, concepts and attributes are defined logically, for instance, by means of axioms that express necessary and sufficient conditions.

- Unique symbols are used by ontologies to determine meaning by their logical relationships with other symbols. Although language is ambiguous by nature, ontologies typically do not address the issue of idea ambiguity.

- Ontologies are, in theory, independent of language as they ought to reflect the structure of the outside world. They shouldn’t always reflect the differences found in natural language. Since existence is seen as language independent, it would be problematic if an ontology designed based on linguistic distinctions had to be language-specific.

- The objective of reaching consensus among interested parties on pertinent concepts and relationships with meaning being precisely defined to eliminate ambiguities, is a crucial component of ontology creation. By separating concepts from the terms that are used to refer to them, this stage makes a clear distinction between the ontological and linguistic levels.

Purpose and Role in NLP

- By generating a common understanding and agreement on terminology within a field, ontologies can operate as artefacts for establishing a “common ground” and promoting communication.

- Domain knowledge can be analysed, domain assumptions can be clarified, domain knowledge can be separated from operational information, and domain knowledge can be reused.

- An ontology is the primary tool used in ontological semantics to generate texts based on meaning representations, reason about derived knowledge, and extract and express meaning from natural language writings.

- In knowledge representation and natural language processing, ontologies and the intersection of ontologies and linguistic structure are emerging as a dynamic and significant field.

Ontology Types

- Ontologies can be categorised according to how general they are:

- Very general ideas like space, time, and matter are described by top-level ontologies.

- The language of a particular field is described by domain ontologies.

- The language of a general task or activity is described by task ontologies.

- Concepts from task and domain ontologies are specialised for particular applications by application ontologies.

- The Gene Ontology is a three-part ontology that was created by biologists to categorise gene function. It covers biological processes, cellular components, and molecular functions and is connected by “is-a” and “part-of” relationships. It has been utilised more as a target for NLP applications, such as automatically classifying genes according to GO classes based on text, than as a lexical resource.

- Broad subject categories, entity kinds, and relationships between entities are defined by the UMLS (Unified Medical Language System) network.

Ontology Construction

Ontology The process of creating an ontological formal framework used to record knowledge about a particular area of reality for a given job or goal is referred to as construction. The purpose of ontologies is to facilitate the reuse of domain knowledge and to explicitly state domain assumptions.

Ontologies are logical theories that describe some facet of reality, according to computer science. They are a conceptualization’s clear specifications. They formalise an abstraction of a certain aspect of reality that is pertinent to a particular field. Regardless of the specific application, the main goal is to declaratively encapsulate knowledge.

A typical ontology’s anatomy consists of persons or instances, attributes, relations, and ideas or classes. Subclass axioms, non-taxonomic relations, domain and range limits on relations, cardinality constraints, part-of relations, and disjointness between classes are examples of constraints and relationships that are expressed using axioms.

Top-level ontologies (broad notions like time and space), domain ontologies (particular vocabulary in a domain), task ontologies (vocabulary of a generic activity), and application ontologies (specialisations of domain and task ontologies) are some of the categories into which ontologies can be divided. They can also be categorised according to their topic content, purpose, and level of formality.

These logical theories are recorded in formal languages. KIF, DAML-ONT, OIL, DAML+OIL, and the popular Ontology Web Language (OWL) are a few examples. Description Logics (DLs), subsets of First-Order Logic intended for tractable inference, form the foundation of OWL. Knowledge about categories, people, and relationships is the main focus of DLs. In DLs, the terminology (TBox) consists of concepts or categories, and the ABox contains information about specific people. Subsumption relations are used to represent hierarchical relationships (C is subsumed by D, meaning members of C are members of D).

There is disagreement on the connection between ontologies and lexica or natural language. In contrast to linguistic objects such as lexica, lexical databases, or thesauri, ontologies are essentially logical beliefs. Ontological relations may serve as the foundation for sense relations in lexica, but they are not the same thing. Ontology ideas should ideally be clear symbols in and of themselves; any ambiguity should come from labels or definitions used in normal language for human communication. Although textual material might direct their development, ontologies are meant to reflect the structure of the world rather than linguistic distinctions created in particular languages.

Methodologies and ideas are used in Ontology Engineering to direct the building process. Clarity (vocabulary meaning is clear, definitions are formal and objective), coherence (axioms are consistent), extensibility (new concepts can be added easily), minimal ontological commitment (making few assumptions to facilitate reuse), and minimal encoding bias are important tenets. Finding the goal (e.g., by utilising competence questions), capturing the ontology (identifying/defining ideas informally), coding it in a formal language, merging pre-existing ontologies, assessing, and documenting are among the common methodologies. The field also includes collaborative approaches and ontology reuse techniques (composition, alignment, discovery, and merger). There are various engineering paradigms, such as domain-specific, cognitive, linguistic, computer science, and logico-philosophical methods.

Automated techniques for creating or modifying ontologies are the main focus of ontology learning, especially from text. This includes tasks like as relation learning from text collections, taxonomy induction (learning hierarchies), and term extraction. One way to think of these tasks is as tiers in a “ontology learning layer cake.” Text-driven ontology building relies heavily on machine learning and natural language processing techniques.

Among the Natural Language Processing methods employed are:

- Phrase and Lexical Analysis: To extract terms. This depends on morphological and syntactic analysis.

- Multilingual Processing: To extract translations and multilingual terms.

- Analysing similarity: To extract synonyms.

- Parsing: For axiom analysis and connection extraction. Relation extraction-related structures such as dependency arcs and parse trees are provided by syntactic analysis.

- Pattern-based techniques: Lexico-syntactic patterns, such as Hearst patterns, are frequently employed to extract other relations (meronymy/part-of) and idea hierarchies (hyponymy).

- Relation extraction can be accomplished by supervised learning, which treats the task as a classification task using annotated corpora.

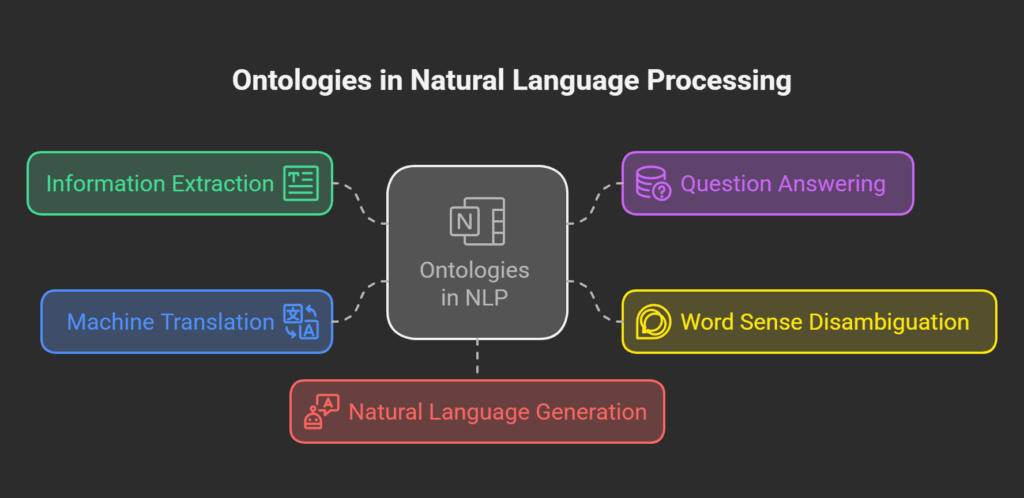

Ontologies are relevant to various NLP applications:

- An important first step in information extraction (IE) is the recognition of proper names and multiwords. IE categories include population and ontology creation. One important task is entity linking, which links textual mentions to entities in an ontology (like Wikipedia). Ontologies can be populated by relation extraction.

- Knowledge bases in Question Answering (QA) are ontologies. Over these knowledge bases, questions can be mapped to queries using semantic parsing.

- Word Sense Disambiguation (WSD): WSD makes use of lexical resources such as WordNet, which contain ontology-like characteristics (e.g., hypernymy). It is connected to the “one sense per collocation” theory.

- Machine Translation (MT): An interlingua method may include language-neutral ontologies.

- Natural Language Generation (NLG): Report generators can employ ontologies as intermediate structures or as an initial input.

There are still difficulties, especially when it comes to successfully combining several NLP approaches for reliable and effective text-driven ontology building.

Ontology Example in NLP

Ontology Example Breakdown:

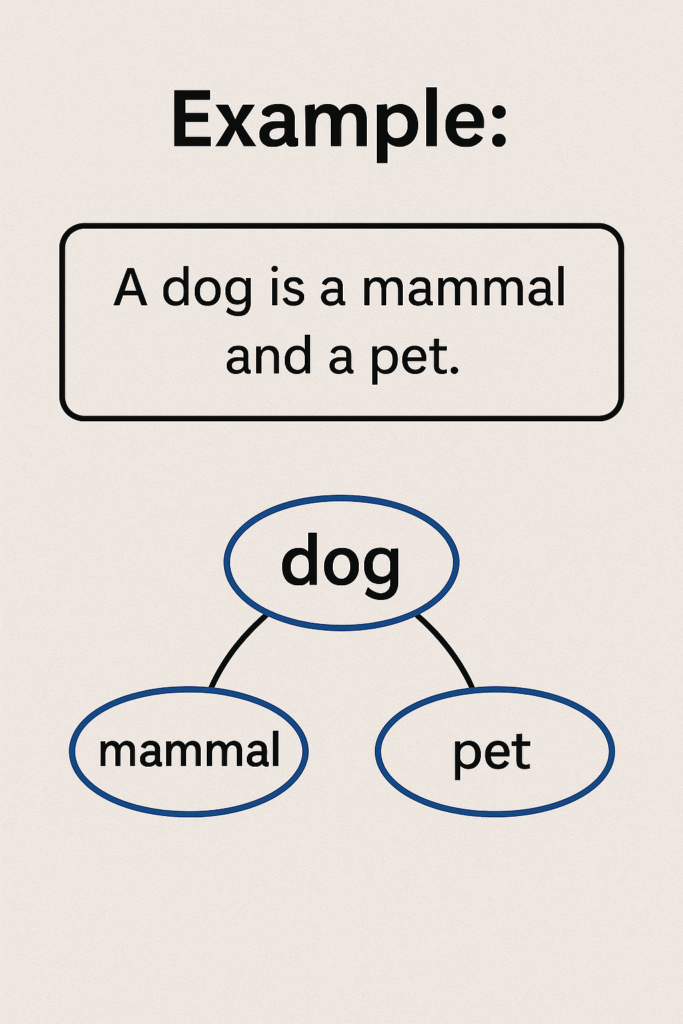

Sentence:

“A dog is a mammal and a pet.”

This sentence introduces a basic knowledge representation involving three concepts:

Dog

Mammal

Pet

Ontology Structure:

The term “dog” is treated as an entity.

The entity “dog” is connected to two categories:

Mammal (biological classification)

Pet (human relationship/classification)

This visualizes how ontology organizes knowledge in NLP:

By mapping relationships between entities (like “dog”) and concepts/categories (like “mammal” or “pet”).

These relationships help machines understand context, meaning, and classification in language.

Why This Matters in NLP:

Enables machines to answer questions like:

“Is a dog a mammal?” → Yes

“Can a dog be a pet?” → Yes

Useful in semantic search, information retrieval, question answering, and AI assistants.

“The polar bear is a mammal.” An ontology can represent this information in the following way:

- Concept: “polar bear,” “mammal”

- Relationship: “is a”

- Instance: A specific polar bear could be represented as an instance of the “polar bear” class.

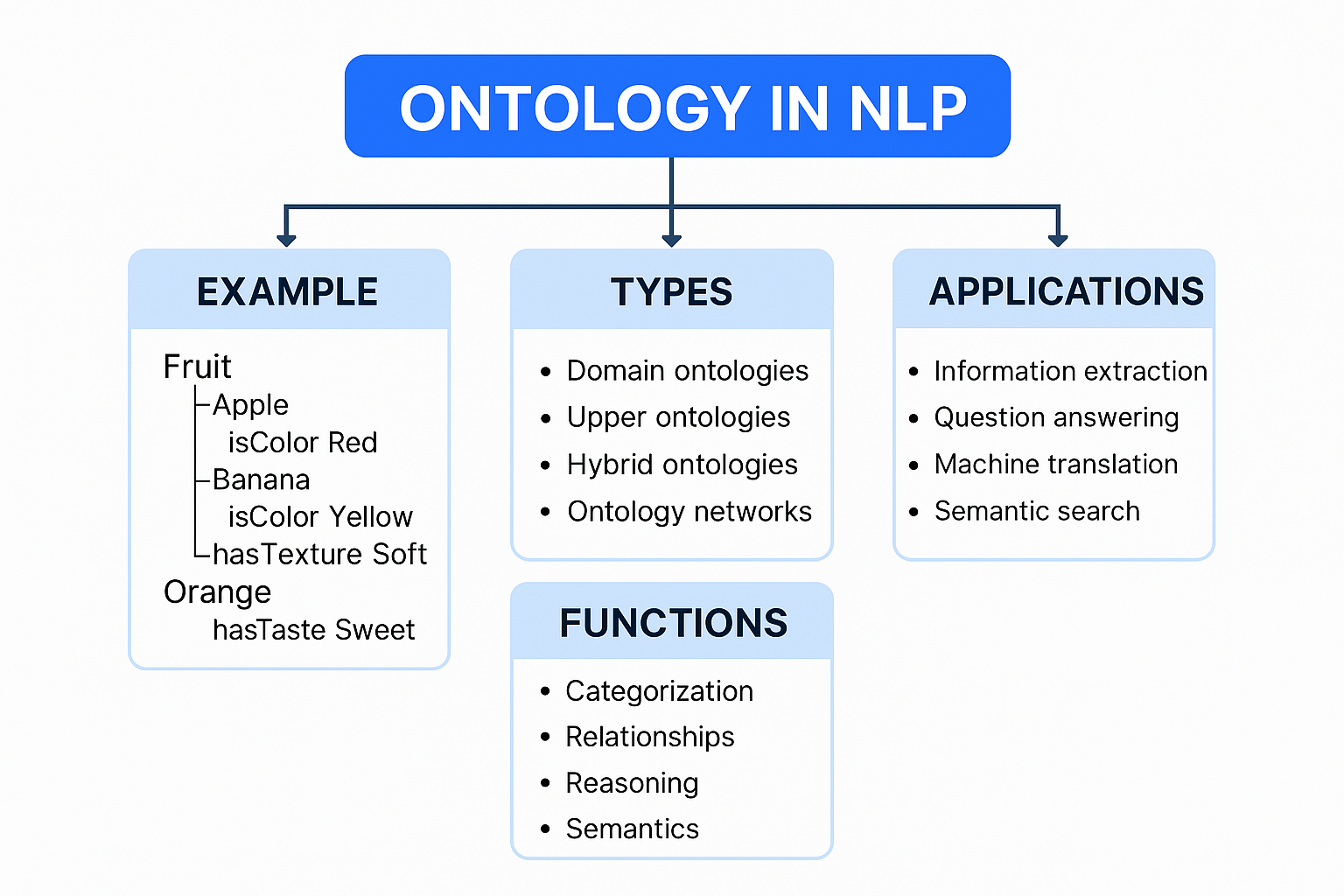

Simple Example: Fruit Ontology

Concepts (Things):

- Apple

- Banana

- Orange

Categories:

- All are types of Fruit

Properties:

- Apple isColor → Red

- Banana isColor → Yellow

- Orange hasTaste → Sweet

- Banana hasTexture → Soft

Relationships:

- Apple isA → Fruit

- Fruit isA → Food

How NLP Uses It:

If someone says:

“I like yellow fruit that is soft.”

An NLP system with this ontology can figure out:

They’re probably talking about a banana, because the ontology says:

- Banana is yellow

- Banana is soft

- Banana is a fruit