Algorithm Analysis in Python

Algorithm analysis is the methodical assessment of algorithms’ effectiveness, with a primary focus on how much space and time they require to run as a function of input size. Designing “good” data structures and algorithms requires this procedure since time is a significant in computing and solutions should operate as quickly as possible.

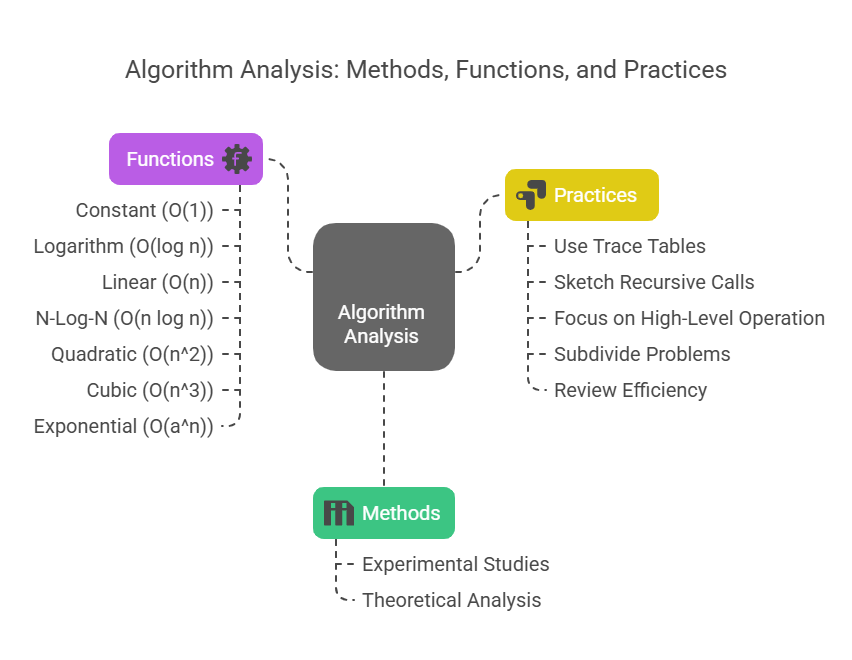

Methods of Algorithm Analysis

Algorithm analysis can be approached in two primary ways:

Experimental Studies

An algorithm’s running time can be examined by running it on different test inputs and timing the execution, if it has been implemented. For more complex analyses, use the timeit package or Python time module to quantify elapsed “wall clock” time. The constraints of experimental investigations are as follows. They can only be conducted on a small number of test inputs, they are reliant on hardware and software environments, and they necessitate a complete implementation of the algorithm, which may be inefficient if the method proves to be subpar.

Theoretical (Asymptotic) Analysis

This method examines a high-level description (such as pseudo-code) without requiring a complete implementation, it takes into account all potential inputs, and it enables the evaluation of algorithm efficiency irrespective of particular hardware and software. Primitive operations are low-level instructions that have a fixed execution time. Examples of these include arithmetic, number comparison, identifying, and index-based list element access. How many primitive operations are carried out in total depends on how long the algorithm takes to run.

A common topic of algorithm analysis is the worst-case running time, or the longest running time for all inputs of a certain size. Because it is usually simpler to determine the worst-case input, this method is favoured over average-case analysis, which necessitates the definition of a probability distribution on inputs and advanced probability theory. By planning for the worse, you can guarantee reliable performance for all inputs.

Algorithm Analysis Seven Crucial Functions (Big-Oh Notation)

It is common practice to use Big-Oh notation to describe the execution time of an algorithm as a function of input size n. By ignoring lower-order components and constant factors, it offers an abstract assessment that concentrates on the dominating growth rate. Developers can communicate with each other using this notation, which provides important efficiency data.

As the growth rate increases, the following seven functions are in order of importance:

Constant (O(1)): Regardless of input size, the running time remains constant. This is perfect for tasks like appending a node to a linked list’s tail when a pointer to the tail is kept up to date.

Logarithm (O(log n)): Running time increases relatively slowly with input size when using the logarithm (O(log n)). It is usually linked to techniques like binary search that constantly break the problem down into smaller parts.

Linear (O(n)): The size of the input directly affects the execution time. This happens when an algorithm finds the maximum element in a series, for example, by carrying out a single fundamental operation for each of n items.

N-Log-N (O(n log n)): Running time increases somewhat more quickly than linear growth but much more slowly than quadratic growth when N-Log-N (O(n log n)) is performed. This is a common behaviour of algorithms that divide a problem into smaller sections, solve them, and then aggregate the results; sorting algorithms such as Quick-Sort and Merge-Sort are excellent examples.

Quadratic (O(n^2)): The running time of a quadratic (O(n^2)) system is proportional to the square of the input size. Nestled loops that iterate linearly with n are frequently. An algorithm that operates in quadratic time is bubble sort.

Cubic (O(n^3)): The execution time is proportional to the input size cube. The Floyd-Warshall algorithm for transitive closure is one example of an algorithm that can use it, albeit it is less common than quadratic. With the exception of extremely minor issues, cubic algorithms are often avoided.

Exponential (O(a^n)): When ‘a’ is a constant above 1, the running time increases exponentially with the size of the input. Even moderately sized inputs are usually too big for these algorithms to work.

A lower growth rate algorithm (like O(n log n)) will always perform better for larger inputs than a higher growth rate algorithm (like O(n^2)), even if the latter has a smaller constant factor.

Example:

The prefix average1 algorithm’s computation of prefix averages for a sequence S will be examined.

def prefix_average1(S):

"""Return list such that, for all j, A[j] equals average of S[0], ..., S[j]."""

n = len(S)

A = [0] * n # create new list of n zeros

for j in range(n): # loop from 0 to n-1

total = 0 # begin computing S[0] + ... + S[j]

for i in range(j + 1):

total += S[i]

A[j] = total / (j + 1) # record the average

return A

# Example Usage:

input_list = [1, 2, 3, 4, 5]

output_list = prefix_average1(input_list)

print(f"Input list: {input_list}")

print(f"Prefix averages: {output_list}")

input_list_2 = [10, 20, 30]

output_list_2 = prefix_average1(input_list_2)

print(f"Input list: {input_list_2}")

print(f"Prefix averages: {output_list_2}")Output:

Input list: [1, 2, 3, 4, 5]

Prefix averages: [1.0, 1.5, 2.0, 2.5, 3.0]

Input list: [10, 20, 30]

Prefix averages: [10.0, 15.0, 20.0]This is an in-depth analysis:

- Line 3: n = len(S): This operation always takes O(1) time to complete.

- Line 4: A = * n: O(n) time is required to create and initialise a Python list of length n because each entry requires a fixed number of basic operations.

- Loop Outer Lines 5–9: for j in range(n): For j ranging from 0 to n-1, the outer loop iterates n times.

- Line 6: total is equal to zero: It takes O(n) time to execute this statement n times, once for each iteration of the outer loop.

- Lines 7-8: for i in range (j + 1) (Inner Loop): It is dependent on j to execute the inner loop.

- When j = 0, it executes once.

- There are two runs when j = 1.

- Runs n times when j = n-1. The body (total += S[i]) of the inner loop runs 1 + 2 +… + n times in total. This total is O(n^2), or n * (n + 1) / 2.

- Statement 9: A[j] = total / (j + 1): Additionally, this statement takes O(n) time to execute n times.

- Line 10 return A: Time for this procedure is O(1).

When combining these processes, the nested loop which performs O(n^2) operations becomes the major factor. Thus, O(n^2) time is required for the prefix average1 method to execute.

In contrast, an O(n) linear running time would be the outcome of a single loop that iterates n times while carrying out constant-time operations inside the loop for a more straightforward algorithm such as find max (finding the largest value in a series).

Tips for Practicing Algorithm Analysis

For efficient algorithm analysis practice:

Use Trace Tables: Create a table to record variable names and values as they are executed while examining algorithms, particularly iterative ones. By giving a history of mutations and aiding in the visualisation of changes, this facilitates pattern inference and accuracy verification.

Sketch Recursive Calls: In addition to trace tables, draw out the recursive calls for recursive algorithms to help visualise the call/return chain and gain a better understanding of parameter changes and control flow.

Focus on High-Level Operation: Before delving into specific implementations, comprehend how the algorithm functions at a high level. This creates well-thought-out solutions.

Subdivide Problems: If you’re faced with a complicated algorithm or a lot of worries, break the problem up into smaller, more manageable subproblems. Composing these smaller pieces after solving them is frequently simpler.

Review Efficiency: To obtain the most efficient run times, always examine the design of algorithms and optimise where you can, especially for loops and recursive calls. For quick algorithms, use recursion; however, for O(n^2), O(n^3), or O(2^n) difficulties, exercise caution because of the possibility of stack overflow and performance overhead.