Relation Extraction NLP

One of the most important tasks in the Information Extraction (IE) area of Natural Language Processing (NLP) is Relation Extraction (RE). Finding and categorizing semantic relations that exist between things mentioned in text is the main objective of a RE system. Through this procedure, unstructured data from natural language words is transformed into structured data that may be used to fill knowledge graphs or databases.

While certain methods also relate to extracting n-ary events, RE usually concentrates on finding binary relations between two items. These relations, which define a set of ordered tuples over domain elements which frequently correspond to named entities found in the text, entities resolved by coreference, or entities from a domain ontology can be equivalent to model-theoretic concepts.

Types of Relations

In RE, several kinds of semantic relations are extracted:

- Geospatial relations, employs, and part-of are examples of generic relations.

- The 17 relations utilised in the ACE (Automatic Content Extraction) evaluations, the 9 directed connections between nominals in the SemEval-2010 Task 8, or the 41 relation types (plus “no relation”) in the TACRED dataset are examples of relations created within certain evaluation campaigns or datasets.

- Relations from structured resources or ontologies, such as hierarchical relations like is-a (hypernymy) and part-whole (meronymy) found in resources like WordNet, or relations between concepts in UMLS (Unified Medical Language System) or facts taken from Wikipedia infoboxes.

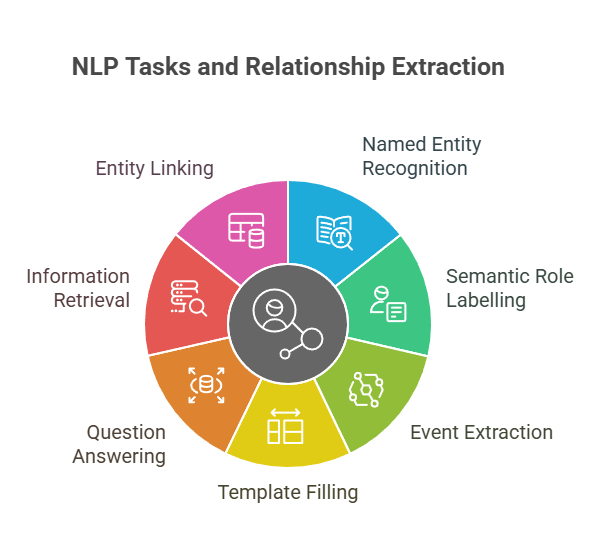

Relationship Extraction to Other NLP Tasks

RE is an essential part of downstream applications and frequently builds upon the outcomes of other NLP tasks:

- Named Entity Recognition (NER): Finding relationships between entities usually requires first identifying them. NER is frequently followed by RE.

- Semantic Role Labelling (SRL): SRL, which focusses on determining the participants (semantic roles) of predicates (often verbs or nouns) in a phrase, is closely related to RE. Compared to SRL, RE usually works with a more limited, predetermined set of relation types.

- Event Extraction: Event extraction seeks to locate events in which these entities take part and extract pertinent details about the event (e.g., participants, time, place), whereas relational analysis (RE) concentrates on relationships between entities.

- Template Filling: This activity, which frequently overlaps with RE and event extraction, entails identifying prototypical circumstances in text and extracting information from the text to fill predetermined slots in a template.

- Question Answering (QA): For QA systems, knowledge of entities and relationships is essential. Information about interactions required for QA reasoning is provided via relationship extraction.

- Relationships that have been extracted can improve information retrieval (IR) systems. Although one study revealed that using both concepts and relations decreased IR performance, indexing based on semantic relations between concepts has been investigated. Semantic similarity, which has to do with comprehending relationships, can also be used in IR query expansion.

- Entity Linking: This task associates textual references to entities with knowledge base items. Entities must be identified before they can be linked, and in knowledge bases, relationships exist between these linked entities.

Approaches and Techniques

RE systems are constructed using a variety of methods and strategies:

Methods Based on Patterns:

- These systems find and categorise relations using manually created linguistic rules or patterns.

- Hearst patterns, also known as lexico-syntactic patterns, search for particular word sequences or grammatical structures between items. The pattern “PERSON, a native of LOCATION,” for instance, may indicate an ENTITY-ORIGIN relationship.

- These methods often have low recall but great precision, and it takes a lot of work to create thorough patterns. Finite-state automata and transduction can be used to build patterns and generalise them using methods such as lemmatisation or WordNet synsets.

Methods of Supervised Machine Learning:

- Using hand-annotated datasets with entity pairs labelled with the appropriate relation type (or “no relation”), these techniques train classifiers.

- Finding pairs of named items, frequently inside a single sentence, and using a classifier to ascertain their relationship is a popular method.

- Classifiers can be either advanced deep learning models (like Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), or Transformers like BERT) or more conventional models (like Random Forests, Logistic Regression, and Support Vector Machines).

- These models use a variety of elements, including syntactic features like dependency routes that link the entities, the words and distance between the entities, and the words and their properties (like POS tags) in the entities themselves.

- Word embeddings and context can be captured by neural models. Overfitting to particular words can be avoided with the use of strategies such as de-lexification, which involves replacing entity names in the input with NER tags. Certain Transformer variations, such as RoBERTa or SPANbert, are appropriate.

- When enough hand-labeled training data is available and the test data is comparable to the training data, supervised approaches can achieve high accuracy. However, labelling is costly, and models may become fragile when used in different genres.

- Comparing these techniques to fully supervised ones, fewer hand-labeled data are needed.

- Bootstrapping: Begins with a limited number of seed tuples or high-precision seed patterns. From the seed tuples, it extracts and generalises the context surrounding the entities to discover new patterns, then iteratively uses these patterns to locate more tuples. Newly learnt patterns and tuples are filtered based on confidence scores.

- Distant supervision: Obtains actual relationships between entities from pre-existing knowledge bases (like Freebase). Any statement mentioning two entities that are known to be associated in the knowledge base is assumed to be conveying that relationship in a big text corpus. A supervised classifier is trained on these entity pairings with labels from the KB after features are collected over all sentences referencing the entity pair. It can pick up handwritten-like patterns. The primary obstacle is the noise created by assuming that a related entity pair is implied by every mention of that pair, which might result in low precision. This noise is addressed by improvements such as multi-instance learning.

Unsupervised Methods (Open IE – Open Information Extraction):

- Seeks to extract relations from text (such as online data) without the need for labelled training data or preset relation types.

- Usually, relations and their arguments are retrieved as text strings, frequently verb-initial sentences. One may think of this as a more portable version of SRL.

- Syntactic analysis (POS tagging, chunking) is used by systems such as ReVerb to find possible related phrases and their arguments. Sometimes trained on a small hand-labeled sample of extractions, they frequently use confidence score and syntactic and lexical limitations.

- Numerous relations can be found with Open IE, however it can be difficult to translate the extracted string relations into a format that is accepted by knowledge bases. Present-day approaches frequently concentrate on verb-expressed interactions.

Evaluation

The method determines how RE systems are evaluated:

- Supervised Systems: Usually assessed on a held-out test set with human-annotated “gold standard” relations using common metrics like precision, recall, and F1-score. There are two types of evaluation: “labelled,” which requires the correct relation type, and “unlabelled,” which only requires the identification of linked things.

- Because of the number and uniqueness of the retrieved relations, semi-supervised and unsupervised systems are more difficult to evaluate. Approximating precision by manually evaluating the accuracy of a random sample of extracted relation tuples is a common evaluation method. At various recall levels, precision can be assessed (e.g., evaluating the top N extractions ranked by confidence). It is challenging to evaluate recollection directly using these methods.

As a key component of many Natural Language Processing applications, Relation Extraction is a basic IE task that focusses on organizing information by recognizing and categorizing relationships between entities using a variety of approaches, ranging from rule-based patterns to sophisticated deep learning and weakly supervised methods.