NLP system design

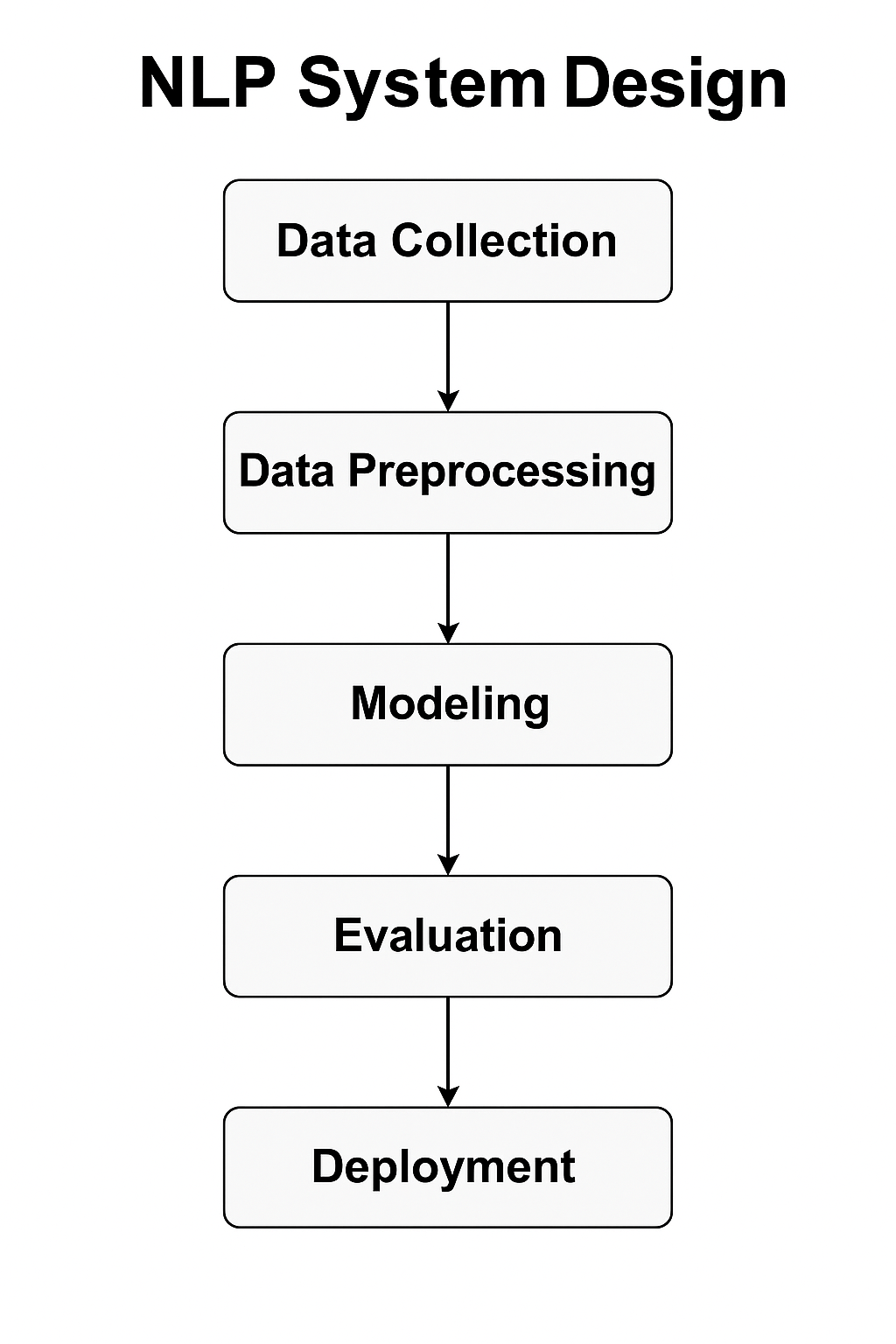

A number of interrelated steps are involved in the development and implementation of Natural Language Processing (NLP) systems, which convert unstructured voice or text into information or produce language that is human-like for a range of uses. The field’s primary focus is this procedure, which makes extensive use of software engineering, machine learning, and computational linguistics concepts.

Understanding the precise issue or use case that the NLP system is meant to address is the first stage in problem framing and data acquisition. This determines the kind of information required. The process of locating and gathering pertinent text or speech corpora is known as data acquisition. This could entail mimicking interactions or employing Wizard of Oz studies to collect accurate data for applications such as dialogue systems. Corpora can be domain-specific datasets or broad collections such as the British National Corpus (BNC) or Brown Corpus. Training supervised models frequently necessitates the manual annotation of corpora with linguistic information (such as POS tags or semantic roles), albeit this is usually costly.

Text Extraction and Preprocessing:

In order to get raw data ready for processing, it must be removed and cleaned. This may entail managing different file types, optimizing HTML, and using Unicode normalization to guarantee consistent encoding. The following are basic preprocessing steps:

- Error correction and noise removal, possibly including system-specific error correction, are part of text extraction and cleanup. Spelling correction is included in this step as well.

- Tokenization is the process of breaking up a text into smaller chunks, such as words, phrases, sentences, or subwords. Sentence segmentation is regarded as a basic, low-level activity.

- Normalization is the process of transforming text into a standardized format, such lowercase.

- Filtering: Eliminating extraneous elements such as punctuation or stop words.

- Reducing words to their base or root form in order to manage morphological variance is known as stemming and lemmatization. A popular technique in information retrieval (IR) is stemming.

The process of converting the processed text into a representation that computational models can comprehend and use is known as analysis and feature engineering. In the past, this required a lot of manual feature engineering, while more recent methods depend on models to automatically learn representations. Structured information is provided via linguistic analysis:

- Analyzing word structures, recognizing lexemes and morphemes, and connecting variants to lemmas are all part of morphological analysis.

- Syntactic analysis is the process of using parsing (such as constituency or dependency parsing) to ascertain the grammatical structure of sentences. Assigning grammatical categories to words, or part-of-speech (POS) tagging, is frequently a need for parsing.

- Semantic analysis is the study of language meaning, including Word Sense Disambiguation (WSD) and the context-based resolution of ambiguous word meanings. Semantic analysis also includes determining semantic roles.

- Discourse and pragmatic analysis are the study of the complex and frequently domain-dependent interactions between sentences or utterances (discourse relations) and the comprehension of meaning in context.

Feature engineering frequently entails learning dense vector representations (embeddings) from data, such as word embeddings or contextualized embeddings, which capture semantic and syntactic aspects. This is due to the growth of machine learning, particularly deep learning. As feature extractors, neural networks in particular, CNNs and RNNs are widely employed.

- Modelling: This entails creating the computer model that carries out the fundamental NLP function. There are several methods:

- NLP systems that rely on preset rules or scripts are known as heuristic- or rule-based systems. These are difficult to scale and maintain, frequently have limited recall, and can have great precision.

- Models are trained to identify patterns in annotated data using machine learning for natural language processing. This can be semi-supervised (using a combination of supervised and unsupervised methods), supervised (using labelled data), or unsupervised (spotting patterns in unlabeled data). A lot of NLP issues are presented as optimization exercises.

Deep learning uses neural networks for natural language processing, a recent trend. Typical architectures include RNNs, CNNs, LSTM networks, and transformers (including BERT, GPT, and T5). Encoder-decoder architectures are used for text synthesis and machine translation. Popular methods include pre-training models on massive datasets and optimizing them for specific applications.

Evaluation:

System performance must be assessed throughout development. Evaluation might be extrinsic, measuring how a component influences an end-application activity, or intrinsic, assessing its quality. The area has advanced thanks in large part to shared activities and standardized measures (such as BLEU for Machine Translation). To compare various systems, statistical significance testing is employed. Consistent human annotation and evaluation techniques continue to present difficulties.

Deployment and Maintenance:

Following training and assessment, a model must be incorporated into an application and made available for use. This includes factors like computing efficiency and system topologies (deep, shallow, data-driven, and knowledge-based). Continuous performance monitoring, problem-solving, and model upgrading with fresh data or enhanced methods are all included in the post-deployment phases. It takes constant work to develop and sustain mature systems, including controlling technical debt, understanding model behaviour (interpretability), guaranteeing repeatability, and iterating on models. Parts of the machine learning workflow can be automated. A major obstacle may be portability to new languages or domains. Building reliable, practical apps requires good software engineering practices.

It is stressed that NLP systems’ modularity is essential for handling complexity, permitting parts to be created and assessed in a fairly autonomous manner, and, ideally, for reusability across many applications. It can be challenging to achieve real reusability, though.

There are numerous open-source toolkits and libraries that can help with the development of NLP systems by offering data access and algorithm implementations.

There are many different applications for the procedure, such as:

- Classification of Texts

- Extraction of Information (NER, Relation, Event, and Template Filling)

- Machine Translation

- Answering Questions

- Chatbots and Conversational Platforms

- Analysis of Sentiment

- Recognition of Speech (ASR)

- Speech to Text (TTS)

- Finding Information Through Search Engines

- Summarization of Texts

- Labelling Semantic Roles

- Resolution of Coreference

- Creation of Reports

- Construction of Ontologies

Even while deep learning has greatly enhanced performance on a variety of tasks, these techniques are not a “silver bullet,” and issues with world knowledge, linguistic ambiguity, and the requirement for meticulous human supervision still exist.