We will go over BERT’s definition, architecture, training procedure, applications of BERT, comparison to GPT, and more in this blog.

BERT stands for Bidirectional Encoder Representations from Transformers. It is within the Large Language Model (LLM) category. In order to comprehend and produce language that is human-like, LLMs are AI models that have been trained on a large volume of text data. Typically, they make use of transformer models.

The following are the main features and information regarding BERT:

Encoder-Only Transformer architecture

The Transformer design served as the foundation for the first encoder-only model, BERT. For Natural Language Understanding (NLU) tasks, encoder-only models are made to transform a text input sequence into a rich numerical representation. This architecture stands in contrast to encoder-decoder or decoder-only approaches (such as GPT).

Bidirectional Attention:

BERT’s application of bidirectional attention is one of its distinguishing characteristics. This implies that the model simultaneously takes into account the context from the left (before the token) and the right (after the token) when processing a token. Decoder-only models, which only take into account the left context, use causal or autoregressive attention, which is different from this. Neural networks may give each element (token embedding) in a sequence a different weight or “attention” with the transformer’s self-attention mechanism. A library called BertViz is mentioned for visualising these attention weights and demonstrating the combination of query and key vectors.

Training Process:

Pre-training and fine-tuning phases of the training process. Unsupervised pre-training and supervised fine-tuning are the two primary phases in the training of BERT models. This procedure is an example of transfer learning, in which the model learns downstream tasks with the aid of general information gained during pre-training.

Pre-training Strategies:

Two distinct goals were used to pre-train the initial BERT model:

- The goal of Masked Language Modelling (MLM) is to train a model to predict the original masked tokens by masking a portion of the input text’s tokens. As a result, the model can learn representations that take into account both sides’ context.

- Next Sentence Prediction (NSP): The model is trained to determine whether the second sentence is a random sentence or the genuine next sentence from the original text after being shown pairs of sentences. The goal of this purpose was to gather the knowledge needed for applications incorporating sentence relationships. Subsequent studies, however, indicated that performance on specific downstream NLP tasks might be enhanced by eliminating the NSP target.

Pre-training Data:

The 800 million-word BooksCorpus plus a 2.5 billion-word corpus taken from the English Wikipedia, totalling 3.3 billion words, were used to pre-train the original BERT model. Even larger corpora are used in more recent models.

Fine-tuning:

The model is adjusted for certain downstream NLP tasks following the unsupervised pre-training. This entails training the combined model on a labelled dataset for the target task after adding task-specific layers (commonly referred to as the “head”) on top of the previously trained model (the “body”).

How BERT Works

BERT runs a series of tokens via its stack of Transformer encoder blocks after embedding them (combining token, position, and segment embeddings). When processing a single word, the encoder’s self-attention mechanism enables it to take into account every word in the sequence at once, thereby developing contextual representations. Each input token is represented contextually by a series of vectors that make up the output. These vectors encode a word’s meaning according to the sentence’s particular context.

Output: Contextual Embeddings

The model can generate contextual embeddings for incoming text inputs with the pre-training phase, which yields learnt word embeddings and the bidirectional encoder’s parameters. The meaning of a word or subword token is represented by these embeddings according to the sentence’s particular context. BERT’s attention weight visualizations show how the model focuses on particular context words to help differentiate between a word’s various meanings, such “flies” used as a verb or a noun.

Architectural Details:

The WordPiece technique was used to construct the 30,000-token subword vocabulary of the initial BERT model. WordPiece belongs to a family of tokenization methods for subwords. The model was made up of 12 layers of transformer blocks, each of which contained 12 multihead attention layers, and it featured hidden layers of size 768. There were more than 100 million parameters in the final model. The fact that calculation time and memory requirements increase quadratically with input length, requiring a fixed input size, typically 512 subword tokens for BERT, is a practical restriction of transformers such as BERT.

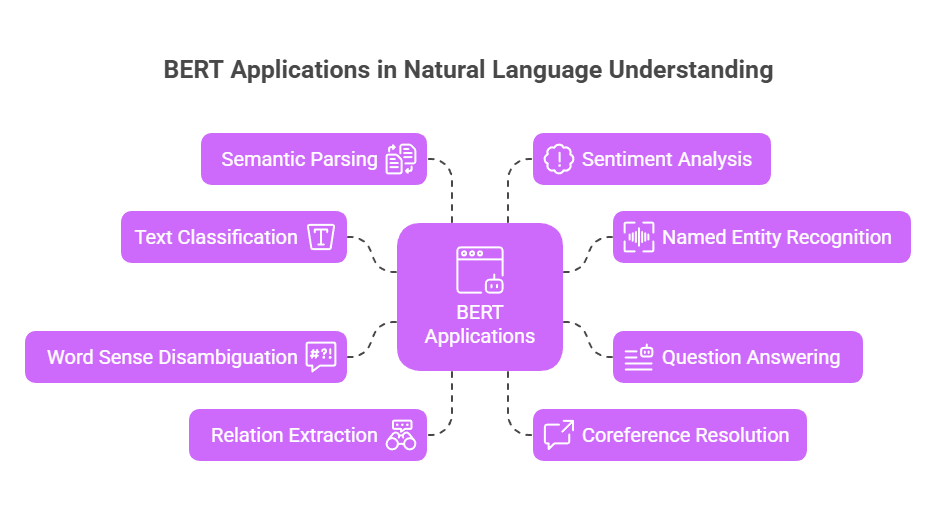

Applications of BERT

For a variety of NLU tasks, applications in encoder-only BERT models are especially successful. These consist of:

- Text classification.

- Named Entity Recognition (NER): Sequence labelling models for NER can employ BERT as an encoder.

- Question Answering (QA): In IR-based QA systems, BERT can be utilised as an encoder for the passage and the question, frequently predicting the beginning and ending points of the answer span. Dense Passage Retrieval (DPR) for QA is another application for BERT.

- Word Sense Disambiguation (WSD): Modern WSD algorithms rely heavily on contextual embeddings such as BERT.

- Relation Extraction: By fine-tuning a linear layer on top of the sentence representation, BERT can be utilised as an encoder to classify the relationship between entities.

- Coreference Resolution: By using BERT as an encoder, span embeddings can be obtained.

- Semantic Parsing: Question tokens can be pre-encoded by BERT and then sent to an encoder-decoder model to be converted into logical forms.

- Sentiment Analysis/Affect Recognition: Although biases in the training data may affect performance, BERT embeddings can be employed for tasks such as sentiment score prediction. BERT considerably improved the state-of-the-art on NLU benchmarks such as GLUE at the time of its introduction.

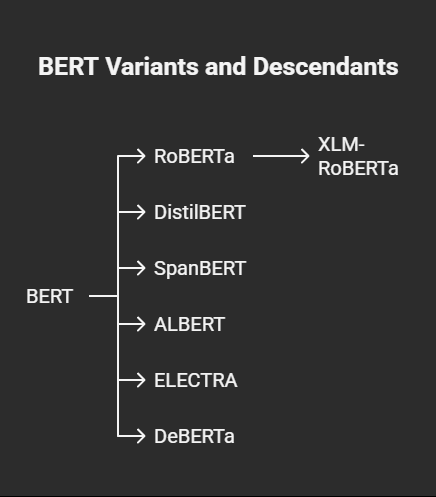

BERT Variants and Descendants:

RoBERTa, DistilBERT, SpanBERT, ALBERT, ELECTRA, and DeBERTa are just a few of the many variations and descendants of BERT, many of which are also LLMs. For instance, knowledge distillation was used to produce the quicker and smaller version known as DistilBERT. Re-examining BERT’s pre-training techniques, RoBERTa observed that NSP was eliminated and that longer training times and more data led to advances. This is extended to multilingual pre-training by XLM-RoBERTa. DeBERTa makes architectural adjustments to improve positional dependencies and model content.

Utilisation in Assessment Additionally, BERT embeddings are utilised in metrics that assess the output of other NLP systems. For example, BERTSCORE, a machine translation evaluation tool, compares candidate translations to references using BERT embeddings.

BERT vs GPT

Although both BERT and GPT (Generative Pre-trained Transformer) are well-known language models that have been trained on large datasets, their main use cases and designs are different.

| Feature | BERT | GPT |

| Architecture | Encoder-only Transformer | Decoder-only Transformer |

| Context Approach | Bidirectional: Processes context from both left and right simultaneously | Unidirectional (autoregressive): Processes context from left to right |

| Pre-training Objectives | Masked Language Model (MLM) and Next Sentence Prediction (NSP) | Predicts the next word in a sequence |

| Primary Strength | Understanding context and relationships within text | Generating coherent and contextually relevant text |

| Typical Tasks | Text classification, NER, Question Answering, Sentiment Analysis | Text generation, Dialogue systems, Summarization, Creative writing |

| Adaptation | Often fine-tuned on specific downstream tasks with labeled data | Designed to perform few-shot learning with minimal task-specific data |

While GPT’s decoder-only architecture and unidirectional training are more appropriate for text production, BERT’s encoder-only architecture and bidirectional training make it ideal for NLU tasks. BERT’s encoder-only architecture, which does not have a decoder, makes it difficult to prompt or generate text directly.

Training BERT can be computationally expensive; for example, training BERTBASE on 16 TPU chips took 4 days and cost an estimated $500 USD. It also takes four days to train BERTLARGE on 64 TPU chips. Larger BERT model training is highly parallelized and takes advantage of GPU distributed computing. NVIDIA’s GPU supercomputers have allowed them to break BERT training times.