What is Deep Generative Models?

One type of artificial intelligence (AI) and machine learning (ML) model that makes use of deep neural networks is called a deep generative model (DGM). The primary objective is to learn the underlying patterns or distributions of intricate, high-dimensional training datasets. They can create new, comparable data instances with the knowledge they have acquired. DGMs can describe how to create novel material compositions, in contrast to conventional machine learning models that frequently forecast attributes. Their capacity to generate fresh data and simulate intricate data distributions makes them essential to contemporary AI, opening up a plethora of applications that would otherwise be challenging or unattainable.

Neural networks known as “deep generative models” can create fresh, realistic samples by learning to mimic the underlying distribution of data. They incorporate architectures such as Normalizing Flows, Generative Adversarial Networks (GANs), and Variational Autoencoders (VAEs), and integrate deep learning with probabilistic modelling. These models, which learn patterns from data in an unsupervised or semi-supervised manner, are frequently employed in voice modelling, text generation, and image synthesis.

History

Since the 1950s, generative models have been an essential component of artificial intelligence. Gaussian Mixture Models and Hidden Markov Models (HMMs) were early examples. Particularly with the recent breakthroughs in creating lifelike voices, images, or movies often referred to as “deep fakes” the discipline has advanced quickly. A new family of techniques known as DGMs, which blend deep neural networks and generative models, has emerged as a result of the growth of deep learning. Improvements in stochastic optimization techniques and deep neural network parameterization of these models have been the main drivers of this success.

How Deep Generative Models Work

Understanding the distribution and probability present in their training dataset is how Deep Generative Models operate. They are able to combine comparable, credible, or real datasets because of their profound knowledge. Methods such as Generative Adversarial Networks (GANs) can be used to provide more realistic results. A “discriminator” neural network in a GAN is trained by a “generator” network that creates a synthetic training dataset. The produced instances serve as negative training examples for the discriminator, which improves the creation of more credible and unique new data by learning to differentiate between the generator’s phoney data and real data.

A generative model learns the joint probability distribution of an observable variable and a target variable, P(X,Y), in statistical classification. The conditional probability P(Y|X=x) for classification can then be calculated from this. The capacity of generative algorithms to use P(X,Y) to create new data that is comparable to current data is one of its main advantages.

Architecture

Deep architectures with numerous layers of nonlinear processing are used to build DGMs. Many older machine learning algorithms, on the other hand, feature “shallow architectures” with simpler internal representations. For DGMs to function well, the scale of training data must usually rise in proportion to the scale of neural networks.

Examples of how DGMs are structured include:

Deep Belief Networks (DBNs)

Multiple layers of hidden variables are present in probabilistic graphical models known as Deep Belief Networks (DBNs). Lower levels create a directed sigmoid belief network, while the top two layers create a Restricted Boltzmann Machine (RBM). More intricate data patterns are captured by each nonlinear layer. Layer-by-layer training of DBNs is effective.

Deep Boltzmann Machines (DBMs)

DBMs are a kind of undirected graphical model, also known as a Markov random field, in contrast to DBNs, in which all layer connections are undirected. They lack within-layer connections, but they do have hidden-to-hidden and visible-to-hidden connections. Internal representations that capture intricate statistical structure in higher levels can be learnt by DBMs.

Features and Key Concepts

Data Distribution Learning: To produce new data points that are comparable to the original set, generative models learn the underlying data distribution of a training set. They are able to comprehend and reproduce the subtleties of the data.

Latent Space: Variational Autoencoders (VAEs) can generate variations of input data with seamless transitions by using a latent space to represent data continuously and smoothly.

Materials with desired electrochemical properties can be produced with the aid of deep generative models that utilize latent space.

Probability and Statistics: To abstract observed events or goal variables, generative modelling makes use of probability in AI and statistics. In addition to producing input data X and target labels Y, these models are capable of learning the joint probability distribution P(X,Y).

High-Level Representations: For tasks like object recognition, speech perception, and language comprehension, DGMs’ ability to extract high-level representations from high-dimensional sensory data is essential. A significant amount of unlabelled data is used to construct these representations.

Types of deep generative models

Depending on the application case, a variety of deep learning methods can be applied. Typical kinds include of:

Variational Autoencoders (VAEs)

Using a latent space, Variational Autoencoders (VAEs) are models that can learn to reconstruct and produce new samples from a given dataset. They encode and decode data using probabilistic models.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks are made up of two competitive neural networks (a discriminator and a generator) that collaborate to produce new data instances that are comparable to the training data yet distinct from it. They are really good at creating images.

Autoregressive Models

Using historical data, these statistical models are used to comprehend and forecast future values in a time series.

Normalizing Flow Models

These models use a sequence of invertible mappings to transform a probability density into complex distributions.

Energy-Based Models

Frequently employed in statistical physics, these models generate additional datasets that match the distributions of the data they learn from a training set. They specify a scalar energy function that gives improbable data points high energy and training data points low energy.

Score-Based Models

By estimating scores from training data, these models enable data space navigation based on the learnt distribution, producing fresh, comparable data.

Restricted Boltzmann Machines (RBMs)

Two-layer Markov random fields called restricted Boltzmann machines (RBMs) are used to describe distributions over binary-valued data. They make up DBN and DBM component modules. The replicated softmax model for sparse count data and Gaussian-Bernoulli RBMs for real-valued inputs are examples of variations.

These are probabilistic generative models with numerous hidden variable layers. The top two layers create a directed sigmoid belief network, while the lower layers create an RBM.

Deep Boltzmann Machines (DBMs)

Undirected graphical models with several hidden layers that have undirected connections between them are known as Deep Boltzmann Machines (DBMs).

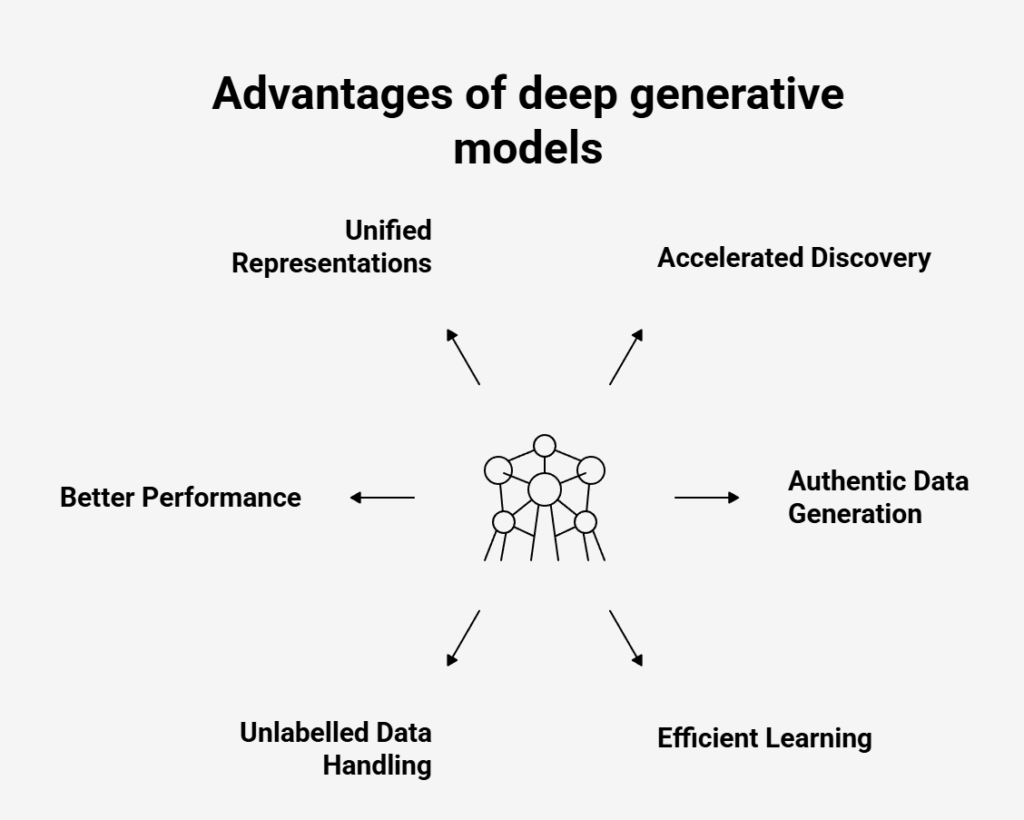

Advantages of deep generative models

- Accelerated Discovery: New materials, such electrode materials, can be found more quickly thanks to DGMs. Additionally, they make in-silico screening possible, which is quicker than conventional tests.

- Generating Authentic Data: Because they have a thorough understanding of the training dataset’s underlying distribution and probability, they are crucial when more realistic or authentic generated data is required.

- Efficient Learning: Deep generative models can be learnt effectively in spite of their complexity.

- Handle of Unlabelled Data: The learning algorithms are able to effectively handle very large collections of unlabelled data for unsupervised pretraining of models.

- Better Performance: In a number of applications, such as classification, regression, object and speech recognition, and information retrieval, DBNs and DBMs have demonstrated notable gains in performance.

- Unified Representations: For classification and retrieval tasks, multimodal DBMs can combine data from many data modalities into a single, unified representation. Additionally, they are able to produce missing data modalities.

Challenges and Limitations

- Data Availability and Understanding: There are still problems with data availability and comprehension of these models’ operation.

- Computational Complexity: Complex generative models, such as GANs, demand a lot of time and computing power to train, which calls for strong hardware.

- Output Quality: Inadequate training, a lack of data, or overly complicated models can all contribute to output that isn’t always accurate or error-free.

- Security and Trustworthiness: DGMs can be abused to produce propaganda, false information, and fraudulent material like deepfakes. It is crucial to ensure responsible use in order to stop fraud or misuse.

- Data Dependencies: Biassed or unrepresentative training data will result in biassed model output, which is mostly dependent on the quality of the training data.

- Mathematical and Practical Issues: Developing and training a DGM for a given dataset is still difficult, and figuring out why a given model works or doesn’t is much more difficult.

- Local Optima: Deep architectures’ loss functions are nearly invariably nonconvex, which makes them challenging to optimize and prone to become stuck in subpar local optima when gradient-based optimization is used.

Also Read About What is Recurrent Neural Networks? and Applications of RNNs

Applications

There are numerous real-world uses for deep generative models in a variety of fields:

Materials Discovery

This process expedites the discovery of new electrode materials by predicting them.

Image Generation

GANs have transformed the process of creating realistic images for virtual reality, media, and entertainment. OpenAI DALL-E, Midjourney, and Stable Diffusion are a few examples.

Text Generation

Essential to Natural Language Processing (NLP) for comprehending and producing text that is human-like, including summarising and text completion. Google PaLM, Meta LLaMA, OpenAI GPT-4, Mistral, and Zephyr are a few examples.

Audio and Music Generation

Used to create original music (e.g., Musenet, Jukedeck) and synthesise speech (e.g., WaveNet, Tacotron).

Data Augmentation

Produce synthetic data to improve training datasets, particularly in situations where labelled data is limited, hence enhancing the effectiveness of machine learning algorithms. Data privacy in industries like healthcare and finance also depends on this. StyleGAN is one example.

Healthcare Applications

Used in drug discovery to create novel compounds with desired features and in medical image analysis to enhance and create images (such as MRIs and X-rays).

Self-driving car systems

Analyse data from Lidar and visual sensors to forecast future actions and make proactive path adjustments.

Fraud Detection

Look for irregularities by contrasting recent transactions with past behaviour.

Virtual assistants

Gain insight into individual preferences (such as musical tastes, schedules, and past purchases) in order to provide recommendations.

Entertainment systems

Make movie recommendations based on past viewing.

Smartwatches

Alert users to possible illnesses, excessive exertion, and sleep deprivation.

Image Enhancement

Enhance digital or scanned photos by boosting sharpness, adjusting colour balance, and proposing cropping.

Caption Generation

Produce captions for meetings or films automatically, including in several languages.

Handwriting Style Generation

Create fresh text using a handwriting style you’ve learnt.

Photo Libraries

Add descriptions to photos to make finding and identifying duplicates simpler.

Information Retrieval and Classification

Visual object identification, information retrieval, classification, and regression are among the tasks that can be performed using high-level feature representations that DGMs have learnt.

Additional Details

Integration with Robotics

To speed up material discovery, deep generative models can be combined with autonomous robotics.

Discriminative versus Probabilistic Models

- Generative models are able to produce random examples of observations and learn the complete joint probability distribution P(X,Y).

- Discriminative models concentrate on class distinction and learn the conditional probability P(Y|X=x).

- Generative models can also be employed for classification by first learning the data creation process, whereas discriminative models directly predict labels.

- Discriminative algorithms often cannot produce fresh data, whereas generative algorithms may.

Pretraining is crucial

Deep Boltzmann Machines (DBMs) perform significantly better when their parameters are properly initialized. This is frequently accomplished by employing a greedy layerwise pretraining technique with a stack of RBMs. With a little amount of labelled data utilized for fine-tuning, this pretraining aids in creating high-level representations from vast volumes of unlabelled data.

Multimodal Data

By developing a joint density model, DGMs can be expanded to model data with various modalities, including text and images. By creating missing modalities, this makes activities like image annotation and retrieval possible.

Research Focus

One of the most active areas of artificial intelligence research is the development of DGMs.

Stanford Course

Deep Generative Models (CS236) is a course offered by Stanford University that covers the statistical underpinnings, learning algorithms, and application domains of these models. “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville is suggested as a textbook for the course.

Also Read About The Power of Artificial Neural Networks in Data Science