Hierarchical Clustering in Data Science

Introduction

Data scientists and machine learners employ hierarchical clustering to create cluster hierarchies. It is beneficial when data is nested or the number of clusters is unknown. Agglomerative and divisive hierarchical clustering exist. Divisive hierarchical clustering is top-down, while agglomerative clustering is bottom-up, merging pairs of clusters as one rises. This article discusses Agglomerative and Divisive hierarchical clustering, its principles, pros, cons, and data science applications.

What is Divisive Hierarchical Clustering?

Divisive hierarchical clustering is a top-down clustering method that starts all data points in one cluster and recursively splits them into smaller clusters until each data point becomes its own cluster or a stopping requirement is reached. This method is less popular than agglomerative clustering but can be useful when the data is hierarchical.

Divisive clustering involves:

- Starting with one cluster of data.

- Divide the cluster into two or more subclusters using a splitting criterion.

- Reusing the splitting procedure on subclusters.

- Repeating until a stopping condition is met (e.g., a cluster number or similarity criterion).

How Does Divisive Hierarchical Clustering Work?

Steps of the divisive hierarchical clustering algorithm:

Step 1: Initialize the Cluster

Put all data points in one cluster.

Step 2: Choose a Splitting Criterion

The algorithm must decide how to separate a cluster. Common splitting criteria:

- Distance-based measures: Splitting by maximum data point distance.

- Splitting to reduce within-cluster variance.

- Finding low-density areas to divide clusters.

Step 3: Split Cluster

Split the cluster into two or more subclusters using the given criterion. This stage frequently entails finding the two data points farthest away and assigning the rest to one of the two new clusters depending on proximity.

Step 4: Recursively Apply Splitting

Split each newly produced sub-cluster again.

Step 5: Stop Meeting a Condition

When a predefined condition is met, the algorithm stops:

- Obtaining a designated cluster count.

- Reaching cluster homogeneity goals.

- No additional splits are feasible (e.g., each cluster has one data point).

Key Concepts in Divisive Hierarchical Clustering

Splitting Criteria

Divisive clustering depends on splitting criterion. Some common criteria are:

- Maximum Distance: Split the cluster by determining the two farthest points and partitioning the data by closeness.

- Minimum Variance: Split the cluster to reduce sub-cluster variance.

- Density-Based Splitting: Split non-spherical or irregular clusters using low density regions.

Dendrogram

A dendrogram shows cluster hierarchies as a tree. As clusters break, the dendrogram branches from a single root node representing the entire dataset in divisive clustering. Branch height indicates cluster distance or dissimilarity.

Stoppage

The algorithm stops when the stopping condition is met. Common stop conditions:

- Predetermined clusters.

- Cluster similarity threshold.

- Minimum cluster size.

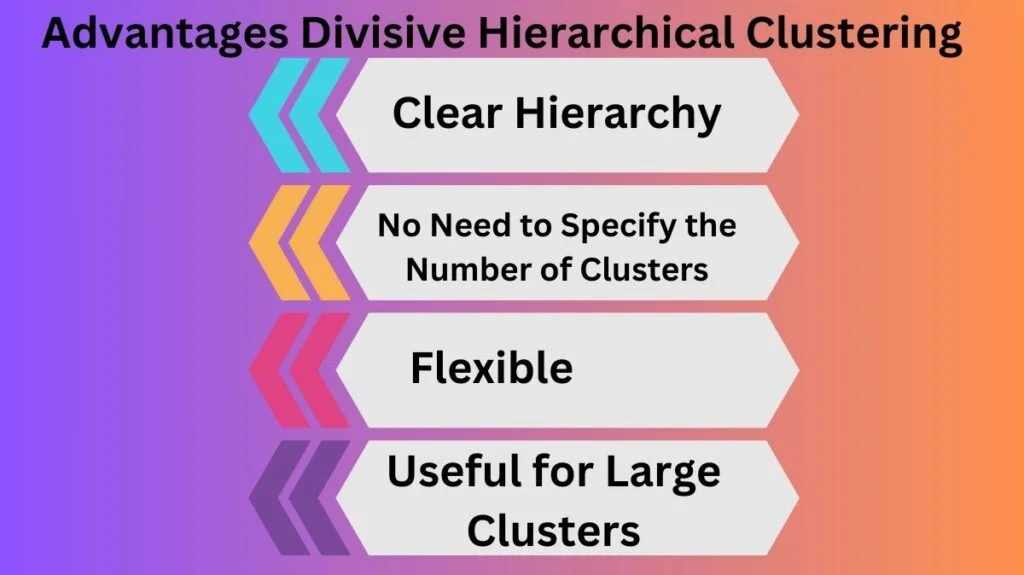

Advantages Divisive Hierarchical Clustering

Clear Hierarchy: Divisive clustering helps explain data point relationships by creating a clear cluster hierarchy.

No Need to Specify the Number of Clusters:Divisive hierarchical clustering does not require a cluster count, unlike k-means clustering.

Flexible: The method can handle diverse data formats and splitting conditions.

Useful for Large Clusters:Divisive clustering works well for huge clusters that need to be split down into smaller, more relevant groups.

Disadvantages of Divisive Hierarchical Clustering

High computational complexity: Evaluates all possible splits at each stage, making it costly for large datasets.

Sensitivity to Splitting Criteria:The quality of the clusters depends on the splitting criterion, which can be difficult to choose.

Irreversible Splits: The method cannot revisit or merge split sub-clusters, which can produce poor results.

Difficulty with High-Dimensional Data:The “curse of dimensionality,” which makes distance metrics less meaningful, may make high-dimensional data difficult for the algorithm.

Divisive Hierarchical Clustering Uses

Divisive hierarchical clustering has many data science and other uses. Famous examples include:

Market segmentation

Businesses can utilize divisive clustering to divide customers by demographics, preferences, or purchase habits. The hierarchical structure facilitates broad and fine-grained segmentation.

Biology/Genetics

Divisive clustering is used in bioinformatics to discover gene expression groups with comparable patterns. Understanding genetic functions and disease pathways is possible.

Image Processing

Divisive clustering divides images into sections with comparable pixel intensities or textures. This aids medical imaging, object detection, and computer vision.

Social Network Analysis

In social networks, divisive clustering can discover user communities with similar interests or interactions. This aids targeted marketing and recommendation systems.

Document Grouping

Divisive clustering can organize massive document collections by content into hierarchical groups. This aids text mining and information retrieval.

Agglomerative and Divisive hierarchical clustering

Both divisive and agglomerative clustering are hierarchical, however their methodologies differ:

Divisive Clustering: Recursively separates one cluster.

Agglomerative Clustering: Iteratively combines data points.

Divisive clustering is more computationally costly but more successful when breaking down datasets with a few major clusters. However, agglomerative clustering is more popular due to its simplicity and efficiency.

Implementing Divisive Hierarchical Clustering

While scikit-learn and other machine learning libraries focus on agglomerative clustering, bespoke algorithms or specialized libraries can implement divisive clustering. How to implement it in summary:

Select a Cluster Splitting Criterion: Maximum distance, minimum variance, etc.

Recursively Split Clusters: Use a recursive function to split clusters until the halting condition is met.

Visualize the Results: Create a dendrogram to show cluster hierarchies.

Conclusion

Divisive hierarchical clustering is a strong and adaptable method for data hierarchization. It is less popular than agglomerative clustering due to its computational complexity, but it is effective for datasets having a few large clusters that need to be broken into smaller, more relevant groups. By understanding its principles, benefits, and drawbacks, data scientists can use divided clustering to get deeper insights and solve challenging challenges across domains.Divisive hierarchical clustering offers a new perspective on data organizing and analysis as data science evolves.