Multimodal Data Fusion in Data Science

Introduction

Big data has greatly expanded data volume and variety from many sources. Text, photos, music, and sensors make up modern data. Multimodal data provides several viewpoints on the same phenomenon. Healthcare patient data includes EHRs, medical imaging, and wearable devices. Autonomous vehicles use cameras, LiDAR, and radar to comprehend their surroundings.

Multiple data sources are combined to generate a single representation for analysis, modeling, and decision-making. This strategy uses complementary data types to deliver more robust and accurate insights than a single modality.We shall discuss multimodal data fusion, its problems, methods, and data science applications in this post.

The Need for Multimodal Data Fusion

Traditional data analysis concentrates on one data type, like numerical or unstructured text. However, many real-world problems demand a comprehensive understanding that only several data kinds can provide. As an example:

Healthcare: Medical imaging, patient history, and genomic data improve disease diagnosis and therapy.

Autonomous Vehicles: Camera, LiDAR, and radar data improves object recognition and navigation.

Social Media Analysis: Combining text, photos, and user behavior data can reveal user sentiment and trends.

Retail: Customer transaction data, reviews, and social media activity boost personalized recommendations.

Multimodal data fusion captures data type synergies to overcome unimodal restrictions. Greater feature extraction, generalization, and predictive modeling performance are possible.

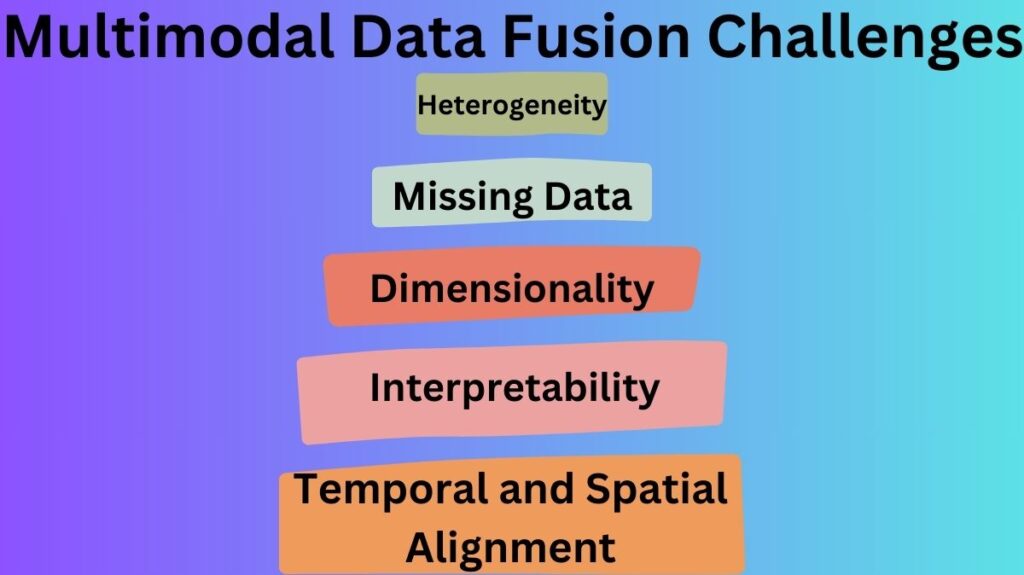

Multimodal Data Fusion Challenges

Multimodal data fusion has benefits and drawbacks:

Heterogeneity: Data types differ in structure, scale, and format. Text is sequential and discrete, but visual data is spatial and continuous. It takes thorough preparation and alignment to integrate diverse data.

Missing Data: Many data points lack all modalities. Keeping the fused representation intact while handling missing data is difficult.

Dimensionality: Multimodal data can create high-dimensional feature spaces, causing computational inefficiencies and overfitting. Management of this complexity requires dimension reduction.

Temporal and Spatial Alignment:Data from multiple modalities must be aligned when working with time-series or spatially scattered data. Bad fusion and model performance can result from misalignment.

Interpretability: Deep learning models make fused representations difficult to interpret. Disclosure and interpretation of the fusion process build confidence and responsibility.

Multimodal Data Fusion Methods

Three types of multimodal data fusion exist: early, late, and hybrid. Each technique has pros and cons, and the application and data characteristics determine the choice.

- Early Fusion Feature-Level Fusion

We combine raw data or derived characteristics from several modalities at the input level before applying a machine learning model. This method generates a single feature representation that shows modality relationships.

Advantages:

- Early modal interaction capture.

- Can improve models if modalities are highly connected.

Disadvantages:

- Needs rigorous data preparation and alignment.

- May create high-dimensional feature spaces that are computationally difficult.

Techniques:

- Concatenation: Simple multimodal feature vector concatenation.

- Canonical Correlation Analysis (CCA): A statistical method that seeks maximum correlated linear combinations of information from distinct modalities.

Deep Learning: CNNs and RNNs can learn joint representations from raw data.

- Decision-Level Late Fusion

Late fusion processes each modality separately using models and then combines the outcomes (predictions or choices). When modalities are less linked or independent processing is easier, this method is used.

Advantages:

- Makes modality-specific modeling more effective for diverse data.

- Since modality is processed separately, missing data is easier to handle.

Disadvantages:

- May overlook crucial modality interactions.

- Complex process needed to merge outputs.

Techniques:

- Voting: Combining model predictions by majority or weighted voting.

- Averaging model predictions or probabilities.

- Stacking: Combining model outputs with a meta-model.

- Fusion Hybrid

Early and late fusion are combined in hybrid fusion to maximize their capabilities. The choice level may fuse some modalities, whereas the feature level may fuse others.

Advantages:

- Allows flexible data and relationship processing.

- Capturing modality-specific and cross-modal interactions improves performance.

Disadvantages:

- More difficult to tune and apply.

- Balances different fusion levels requires careful planning.

Techniques:

- Multi-Stream Networks: Neural networks with numerous input streams processing various modality and a fusion layer.

- Attention Mechanisms: Dynamically weighing modalities depending on task relevance.

Applications of Multimodal Data Fusion

Multimodal data fusion is used in many fields:

- Healthcare Disease Diagnosis: Enhancing accuracy using medical imaging, patient history, and genomic data.

Personalized Medicine: Using wearable gadgets, EHRs, and genetic data to customize therapies.

- Autonomous Vehicles : Enhanced Object Detection: Combining camera, LiDAR, and radar data for better tracking.

Path Planning: Safe and efficient route planning using sensor and map data.

- Social Media Analysis

Analyzing sentiment and trends using text, photos, and user behavior data.

Detecting bogus news using language, user profiles, and network structure.

- Retail and E-Commerce Recommendation Systems: Enhancing tailored suggestions through customer transaction data, reviews, and social media activity.

Demographic, behavioral, and transactional data are used to segment clients for focused marketing.

- Human-Computer Interaction Emotion Recognition: Using facial expressions, speech tone, and physiological markers to identify user emotions.

Gesture Recognition: Interpreting user gestures using camera and wearable sensors.

Future Paths

Many trends and research directions are emerging in multimodal data fusion:

Self-Supervised Learning: Learning meaningful representations from unlabeled multimodal data without annotation.

Transmodal Transfer Learning: Improving performance in data-scarce situations by transferring knowledge between modality.

Explainable AI: Improving multimodal fusion model interpretation and transparency.

Real-Time Fusion: Processing and fusing multimodal data for autonomous driving and healthcare monitoring.

Conclusion

Multimodal data fusion is a powerful data science method that integrates varied data kinds for more accurate conclusions. Despite its challenges, developments in machine learning, deep learning, and data preparation are making multimodal data use more viable. Multimodal data fusion will help solve complicated real-world challenges across many disciplines as data volumes and types expand. Combining data kinds’ strengths can provide new data-driven innovation and discovery opportunities.