Feature engineering is a pre-processing procedure in machine learning that turns unprocessed data into features that may be utilized to build a prediction model through statistical modeling or machine learning. The goal of feature engineering in machine learning is to enhance model performance.

What is a feature?

All machine learning algorithms often require input data in order to produce an output. These properties are frequently referred to as features. The input data is still in tabular form, with rows representing instances or observations and columns representing variables or attributes. In computer vision, for instance, a line within an image may be the feature, but an image itself is an instance. Likewise, in natural language processing, a document may be an observation, and its word count may be the feature. Therefore, we can define a feature as an attribute that affects or helps with an issue.

What is Feature Engineering?

Feature engineering is the pre-processing stage in machine learning that pulls features from raw data. It aids in better representing an underlying problem to predictive models, thus improving the model’s accuracy for unknown data. The feature engineering technique is used to choose the most effective predictor variables for the predictive model. The model comprises predictor variables and an outcome variable.The four steps that make up feature engineering in machine learning are feature creation, transformations, feature extraction, and feature selection.

The processes are explained below:

Feature Creation: The process of choosing which variables are most useful to include in a prediction model is known as feature creation. The process is subjective, requiring human inventiveness and involvement. The new characteristics are formed by combining old features with addition, subtraction, and ration, and they are quite flexible.

Transformations: In the transformation step of feature engineering, the predictor variable is adjusted to improve the model’s accuracy and performance. For example, it ensures that the model is flexible enough to accept a wide range of data; it also ensures that all variables are on the same scale, making the model easy to grasp. It increases the model’s accuracy and guarantees that all features fall within an appropriate range to avoid computational errors.

Feature Extraction: An automated feature engineering technique called feature extraction turns unprocessed input into new variables. The primary goal of this stage is to decrease the amount of data so that it can be conveniently used and managed for data modeling. Cluster analysis, text analytics, edge detection algorithms, and principal component analysis (PCA) are all methods for extracting features.

Feature Selection: Only a few variables from the dataset are useful for building the machine learning model, with the rest being redundant or unnecessary. If we include all of these redundant and irrelevant features in the dataset, it may have a severe impact on the model’s performance and accuracy. As a result, it is critical to discover and pick the most relevant characteristics from the data while removing irrelevant or less important aspects, which is accomplished through feature selection in machine learning.”Feature selection is the process of selecting a subset of the most relevant features from the original feature set while removing any redundant, superfluous, or noisy features.”

The following are some advantages of utilizing feature selection in machine learning:

- It can be used to prevent the curse of dimensionality.

- It aids in the simplicity of the model, allowing researchers to interpret it more simply.

- It reduces training time.

- It reduces overfitting, boosting generalization.

In machine learning, why feature engineering is necessary:

In machine learning, data management and pre-processing determine how well the model performs. However, a model may not provide good accuracy if it is created without any pre-processing or data handling. On the other hand, the accuracy of the model is improved if feature engineering is applied to the same model. Therefore, in machine learning, feature engineering enhances the model’s functionality. The following points clarify why feature engineering is necessary:

Better features mean flexibility: In machine learning, we constantly look for the best model to achieve the best outcomes. Nevertheless, we can occasionally obtain better predictions even after selecting the incorrect model, and this is due to improved features. You can choose the less complicated models because of the feature flexibility. Because simpler models are always preferable because they are quicker to execute, simpler to comprehend, and easier to maintain.

Better features mean simpler models: Even if we choose the incorrect parameters (not very optimal), we can still get good results if we feed our model with well-engineered features. It is not essential to work hard to choose the best model with the most optimized parameters after feature engineering. We can more accurately depict the entire set of data and utilize it to best describe the problem at hand if we have good features.

Better features mean better results: As was previously mentioned, machine learning will produce the same results regardless of the data we supply. Therefore, we must apply superior characteristics in order to get better results.

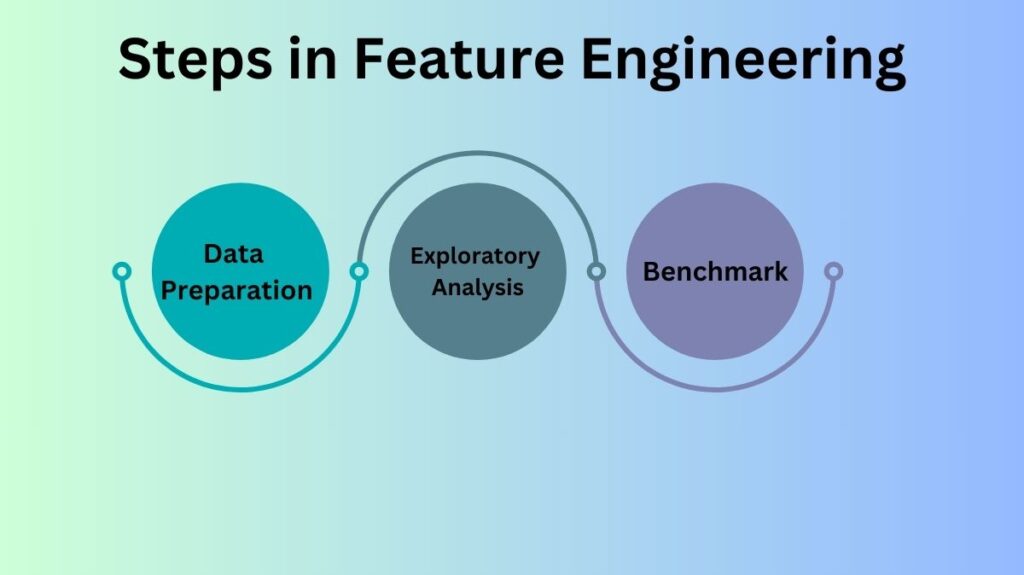

Steps in Feature Engineering

Feature engineering procedures can differ depending on the data scientist and machine learning engineer. On the other hand, the majority of machine learning algorithms consist of the following basic steps:

Data Preparation: Data preparation is the initial stage. In this step, raw data that has been gathered from various sources is formatted appropriately for usage in the machine learning model. Data cleaning, delivery, augmentation, fusion, ingestion, and loading are all possible components of data preparation.

Exploratory Analysis: Data scientists primarily employ exploratory analysis, also known as exploratory data analysis (EDA), as a crucial phase in features engineering. In this step, the data set is analyzed, invested, and the key features of the data are summarized. To choose the best features for the data, choose the most suitable statistical method for data analysis, and gain a better understanding of how data sources are manipulated, several data visualization approaches are employed.

Benchmark: Establishing a standard baseline for accuracy so that all the variables can be compared from this baseline is known as benchmarking. The benchmarking procedure lowers the error rate and increases the model’s predictability.

Techniques for Feature Engineering

- Imputation:

Human error, missing values, unsuitable data, general mistakes, inadequate data sources, etc. are all addressed by feature engineering. Missing values in the dataset have a significant impact on the algorithm’s performance, and the “Imputation” technique is employed to address them. The process of managing dataset anomalies is called imputation.

- Handling Outliers:

Outliers are data points or deviant values that are observed too far apart from other data points to negatively impact the model’s performance. Using this feature engineering technique, outliers can be managed. This method finds the outliers and eliminates them first.

To find the outliers, use the standard deviation. For instance, every value in a space has a specific to average distance, however a value may be regarded as an outlier if it is farther away than a particular value. Outlier detection is another application for the Z-score.

- Log-transformation:

One of the often employed mathematical methods in machine learning is the logarithm transformation, sometimes known as the log transform. The log transform puts the distribution closer to normal following transformation and aids in handling skewed data. Additionally, it lessens the impact of outliers on the data because a model becomes much more resilient due to the normalizing of magnitude differences.

Note: Only positive values can be transformed using log transformation; otherwise, an error will be displayed. This can be prevented by adding 1 to the data before to transformation, which guarantees a positive transformation.

- Binning:

One of the primary problems in machine learning that impairs model performance is overfitting, which is brought on by an excessive number of parameters and noisy input. However, the noisy data can be normalized using “binning,” one of the well-liked feature engineering techniques. Segmenting various features into bins is part of this process.

- Feature Split:

Feature splitting, as the name implies, is the act of carefully dividing features into two or more components and then combining them to create new features. This method aids the algorithms in comprehending and learning the dataset’s patterns.

By clustering and binning the additional features, the feature splitting procedure makes it possible to extract valuable information and enhances the data models’ performance.

- One hot encoding:

The most often used encoding method in machine learning is called “one hot encoding.” This method transforms categorical data into a format that machine learning algorithms can understand and use to generate accurate predictions. It allows categorical data to be grouped without any information being lost.

Conclusion

The article has provided a thorough explanation of feature engineering in machine learning, including its methods and operation.There are other ways to improve prediction accuracy besides feature engineering, which aids in improving the model’s performance and accuracy. We have highlighted the most widely used feature engineering techniques, however there are many more than the ones listed above.