Introduction

Data science relies on clustering to group related data pieces. Hierarchical clustering is unique and versatile among clustering algorithms. Hierarchical clustering’s concepts, methods, Applications of Hierarchical Clustering and data science applications are examined in this article.

What is Hierarchical clustering?

Cluster analysis using hierarchical clustering creates a hierarchy. Hierarchical clustering does not require the number of clusters in advance, unlike k-means. Instead, it builds a dendrogram, a tree-like cluster structure that may be cut at different levels to get varied numbers of clusters.

Hierarchical Clustering Types

There are two primary hierarchical clustering types:

Agglomerative Hierarchical Clustering: This “bottom-up” method clusters each data point. As one ascends, cluster pairs combine. The process continues until all data points are clustered or a stopping requirement is reached.

Divisive Hierarchical Clustering: This “top-down” method clusters all data points. The cluster is then recursively split into smaller clusters until each data point is in its own cluster or a stopping requirement is satisfied.

Due of its simplicity and computing efficiency, agglomerative clustering is more popular.

The Hierarchical Clustering Process

Step 1: Initialization

Agglomerative hierarchical clustering treats each data point as a cluster. If there are n data points, there will be n clusters at the start.

Step 2: Distance Matrix Calculation

Calculate the distance between cluster pairs next. The distance measure utilized depends on the data and analytic needs. Euclidean, Manhattan, and cosine similarity are distance metrics.

Step 3: merge closest clusters

One cluster is formed from the two nearest clusters. Recalculate the distance between the new and remaining clusters.

Step 4: Update Distance Matrix

The cluster distances are updated in the distance matrix. This phase is critical for appropriately identifying the next pair of closest clusters.

Step 5: Repeat

Repeat steps 3 and 4 until all data points are clustered or a stopping requirement is satisfied. The stopping criterion could be a cluster count or distance cutoff.

Step 6: Dendrogram Construction

Dendrograms, tree-like diagrams of merges and splits, show the full cluster merging process. The dendrogram height shows cluster merging distance.

Linking Criteria

How cluster distance is calculated depends on linkage criteria. Different linkage criteria can give different clustering results. Linkage criteria often include:

Single Linkage: The distance between two clusters is the smallest distance between any two places from them.

Complete Linkage: The distance between two clusters is the longest distance between any two places from them.

Average Linkage:The average distance between two clusters is the average distance between all pairs of points from them.

Ward’s Method:Ward’s Method reduces cluster variance. The rise in sum of squared errors (SSE) when two clusters are joined defines their distance.

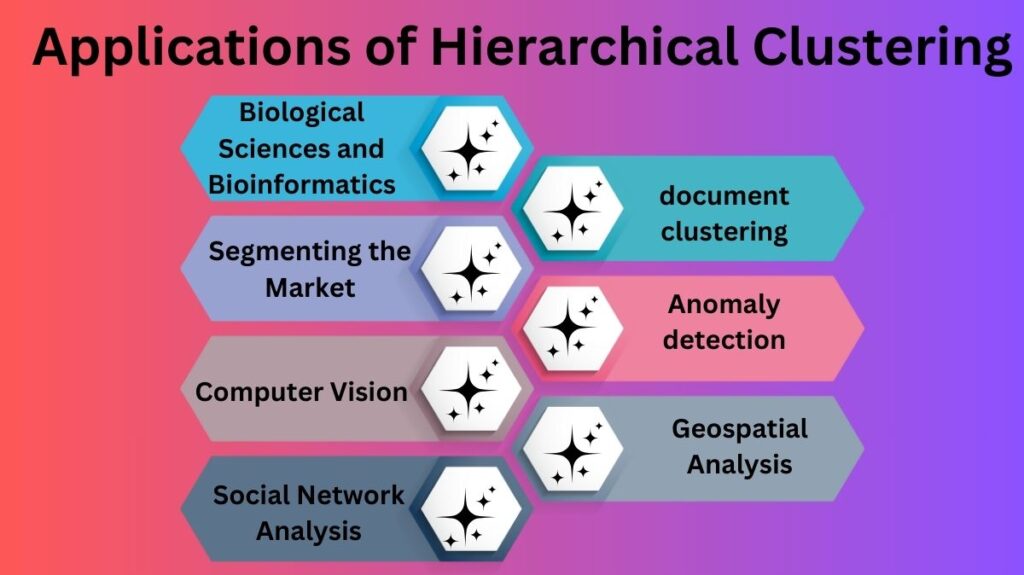

Applications of Hierarchical Clustering in Data Science

Hierarchical clustering is a versatile and widely-used clustering approach in data science. Its ability to establish a hierarchy of clusters without requiring the number of clusters to be stated in advance makes it appropriate for a variety of applications across diverse disciplines. Below, we cover some of the important uses of hierarchical clustering in data science.

1.Biological Sciences and Bioinformatics

When analyzing gene expression data, bioinformatics uses hierarchical clustering. Combining genes with similar expression patterns helps researchers find co-regulated biological processes. Genetic function, illness biomarkers, and treatment targets are improved. Protein sequences, phylogenetic trees, and other biological data show evolutionary links and functional connections using hierarchical clustering.

2.Segmenting the Market

Marketing uses hierarchical clustering to categorize clients by demographics, preferences, and purchasing behavior. Businesses can increase customer targeting, satisfaction, and marketing by grouping clients with similar traits. Hierarchical clustering can help a retailer segment customers and create targeted marketing.

3.Computer Vision/Image Processing

Hierarchical clustering is used for image segmentation and object recognition. Hierarchical clustering can find visual regions of interest by clustering pixels with similar color or intensity values. Medical imaging can benefit from this to detect cancers and other problems. Hierarchical clustering helps computer vision tasks like facial recognition and scene interpretation by grouping related objects or features.

4.Social Network Analysis

Hierarchical clustering finds groupings of people with similar interests, habits, or relationships in social network analysis. Researchers can find communities, impact patterns, and social network structure by clustering people by interactions or social affiliations. This helps with targeted advertising, recommendation systems, and network disease and information propagation.

5.Text mining, document clustering

Many NLP and text mining applications employ hierarchical clustering to group similar content. This aids document structure, subject modeling, and information retrieval. A news aggregator may employ hierarchical clustering to group articles on similar subjects to help consumers locate relevant content. Hierarchical clustering can also uncover themes and feelings in customer reviews, social media messages, and other text data.

6.Anomaly detection

Hierarchical clustering can find dataset outliers. Clustering data points and inspecting the dendrogram reveals anomalies as data points that do not fit, or form small, isolated clusters. This helps discover anomalous patterns or behaviors in fraud detection, network security, and quality control.

7.Geospatial Analysis

In geospatial analysis, hierarchical clustering groups regions with similar climate, population density, or economic activity. These aid urban planning, resource allocation, and environmental monitoring. Hierarchical clustering can identify land use patterns or evaluate disease distribution.

Conclusion

Hierarchical clustering is a versatile data science tool. Gene expression analysis, market segmentation, image processing, social network analysis, document clustering, anomaly detection, and geospatial analysis benefit from its capacity to reveal hidden data patterns and structures. Hierarchical clustering helps data scientists get deeper insights into their data, enabling better decision-making and new solutions across disciplines.